Содержание

- 2. Processes A process is the activity of executing a program on a CPU. Conceptually… Each process

- 3. Why Processes? Hardware-independent solutions Processes cooperate and compete correctly, regardless of the number of CPUs Structuring

- 4. How to define/create Processes? Need to: Define what each process does (the program) Create the processes

- 5. Specifying precedence relations A general approach: Process flow graphs Directed acyclic graphs (DAGs) Edges = processes

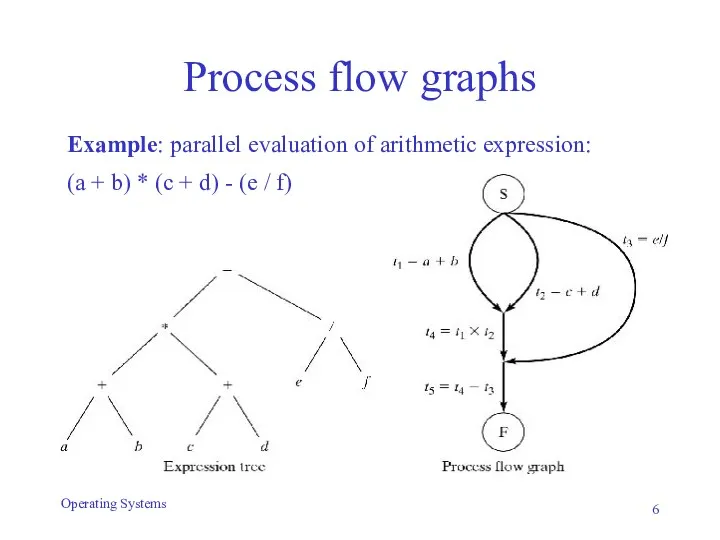

- 6. Process flow graphs Example: parallel evaluation of arithmetic expression: (a + b) * (c + d)

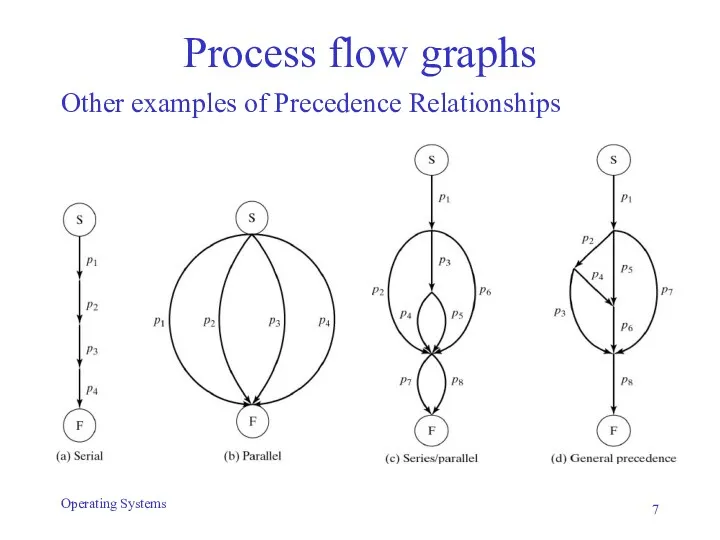

- 7. Other examples of Precedence Relationships Operating Systems Process flow graphs

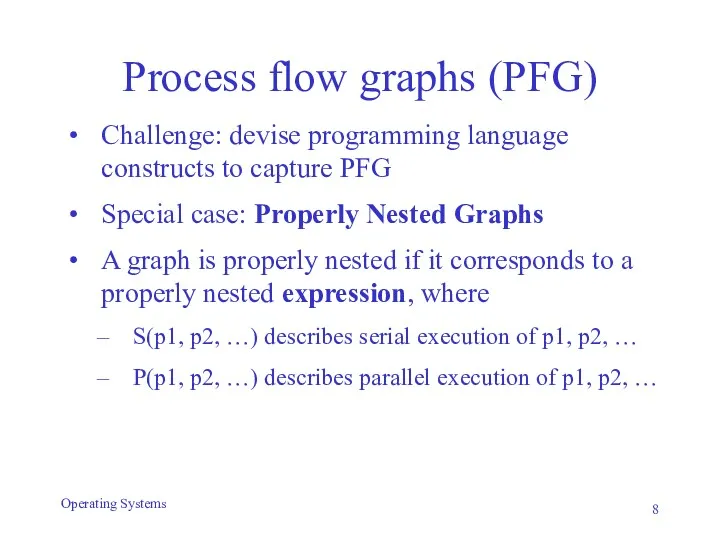

- 8. Process flow graphs (PFG) Challenge: devise programming language constructs to capture PFG Special case: Properly Nested

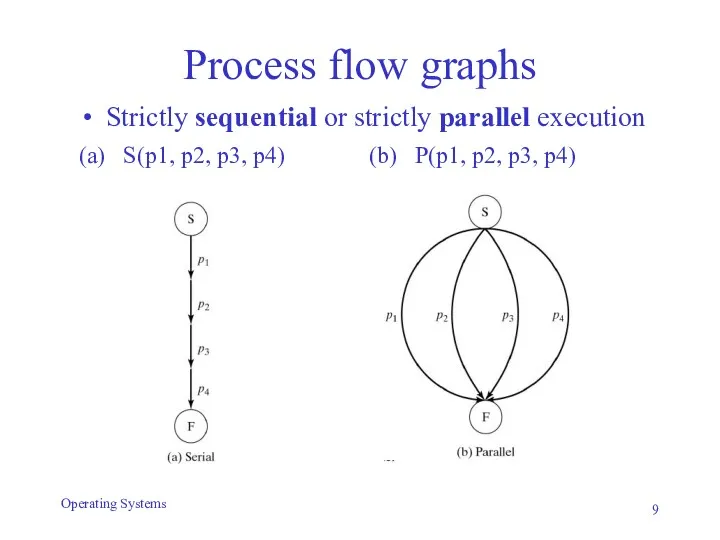

- 9. Process flow graphs Operating Systems (a) S(p1, p2, p3, p4) (b) P(p1, p2, p3, p4) Strictly

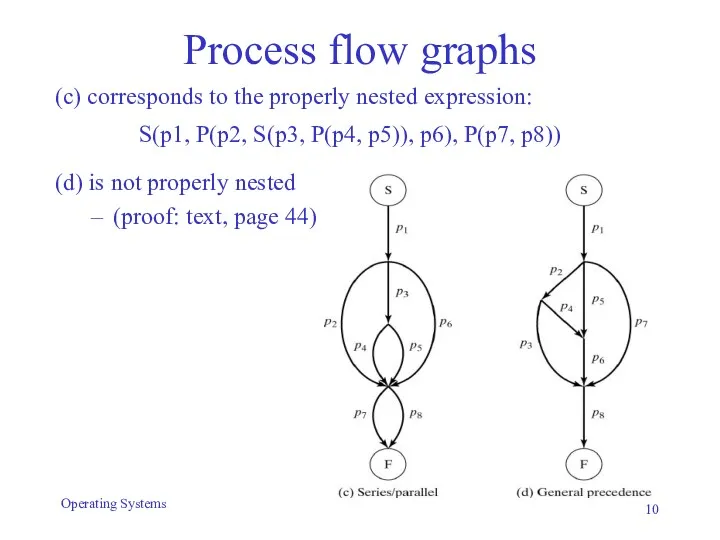

- 10. Process flow graphs (c) corresponds to the properly nested expression: S(p1, P(p2, S(p3, P(p4, p5)), p6),

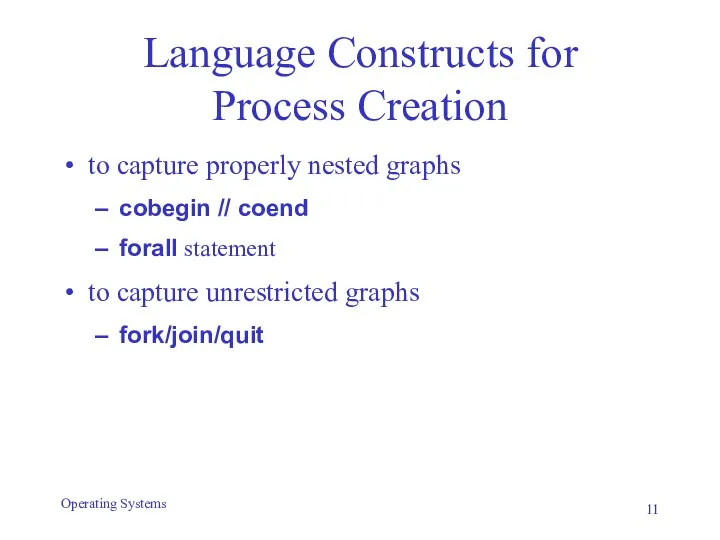

- 11. Language Constructs for Process Creation to capture properly nested graphs cobegin // coend forall statement to

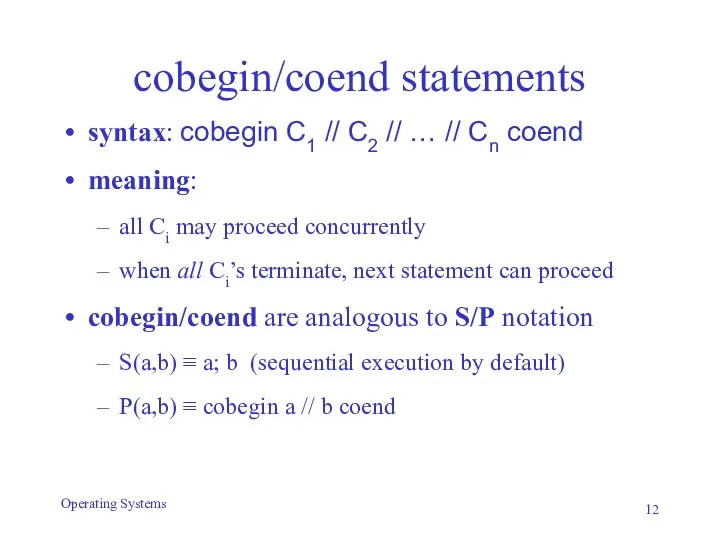

- 12. cobegin/coend statements syntax: cobegin C1 // C2 // … // Cn coend meaning: all Ci may

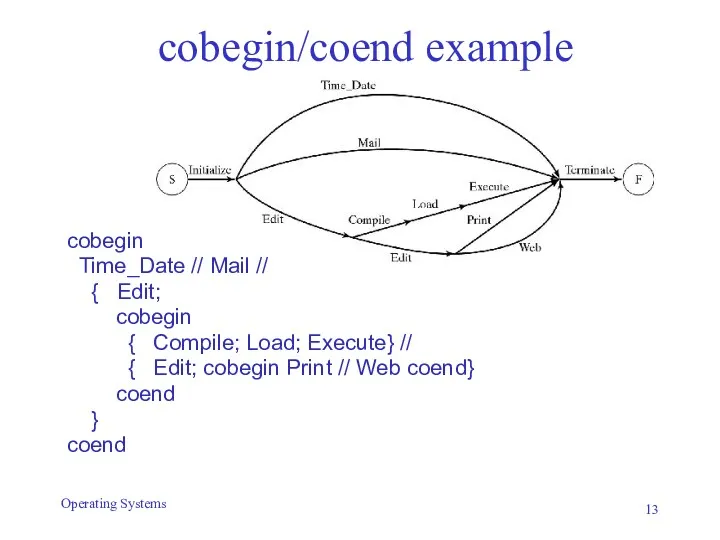

- 13. cobegin/coend example Operating Systems cobegin Time_Date // Mail // { Edit; cobegin { Compile; Load; Execute}

- 14. Data parallelism Same code is applied to different data The forall statement syntax: forall (parameters) statements

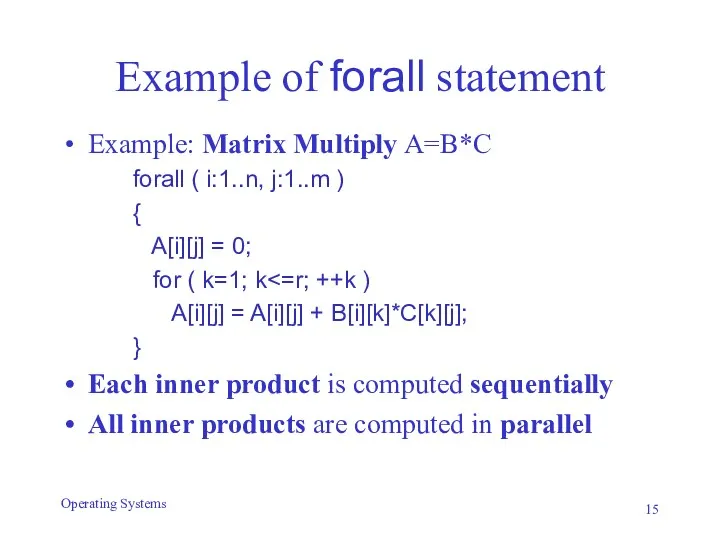

- 15. Example of forall statement Example: Matrix Multiply A=B*C forall ( i:1..n, j:1..m ) { A[i][j] =

- 16. fork/join/quit cobegin/coend limited to properly nested graphs forall limited to data parallelism fork/join/quit can express arbitrary

- 17. fork/join/quit Syntax: fork x Meaning: create new process that begins executing at label x Syntax: join

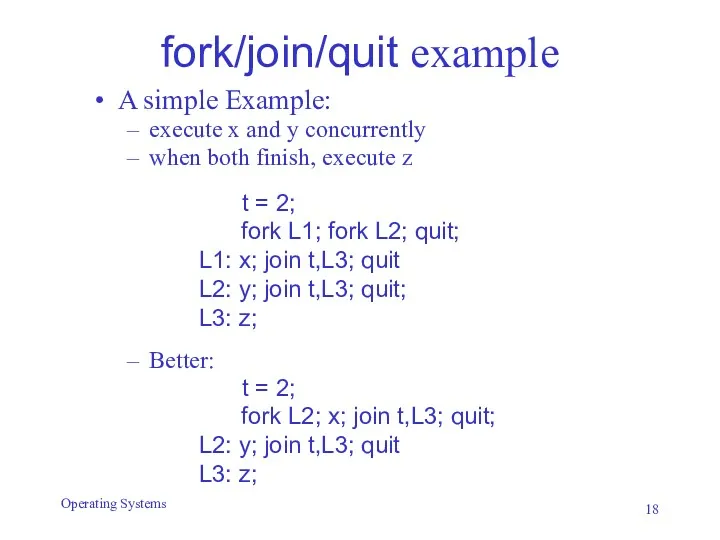

- 18. fork/join/quit example A simple Example: execute x and y concurrently when both finish, execute z t

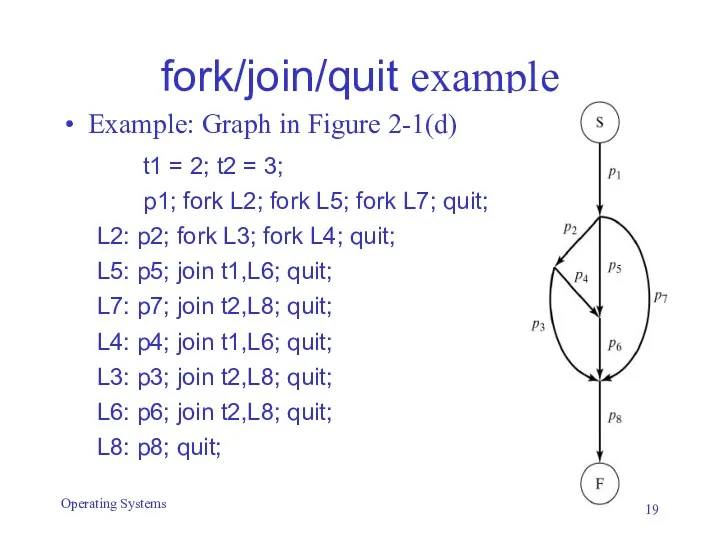

- 19. fork/join/quit example Example: Graph in Figure 2-1(d) t1 = 2; t2 = 3; p1; fork L2;

- 20. Example: the Unix fork statement procid = fork() Replicates calling process Parent and child are identical

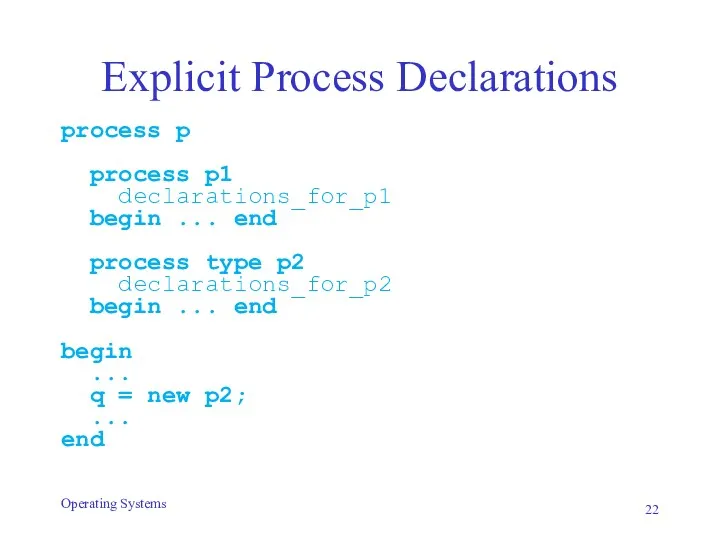

- 21. Explicit Process Declarations Designate piece of code as a unit of execution Facilitates program structuring Instantiate:

- 22. Explicit Process Declarations process p process p1 declarations_for_p1 begin ... end process type p2 declarations_for_p2 begin

- 23. Process Interactions Competition Two processes both want to access the same resource Example: write the same

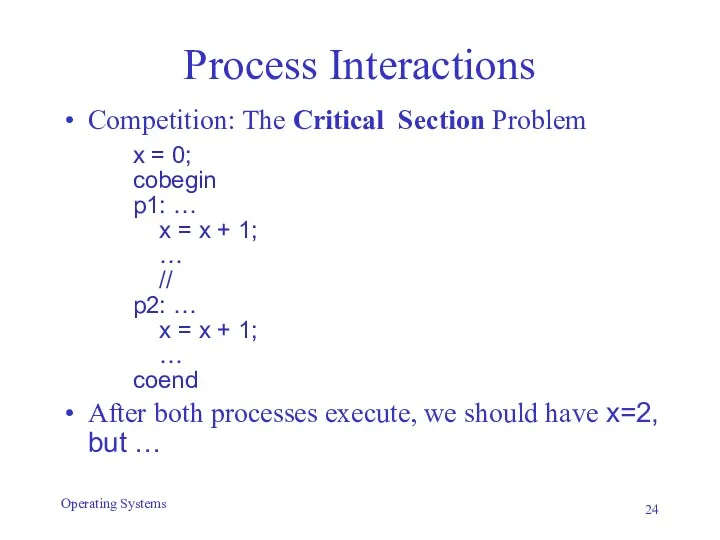

- 24. Process Interactions Competition: The Critical Section Problem x = 0; cobegin p1: … x = x

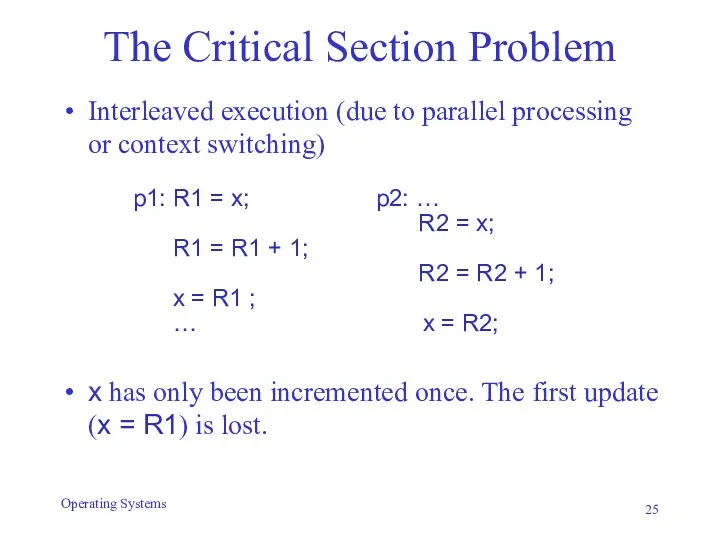

- 25. The Critical Section Problem Interleaved execution (due to parallel processing or context switching) p1: R1 =

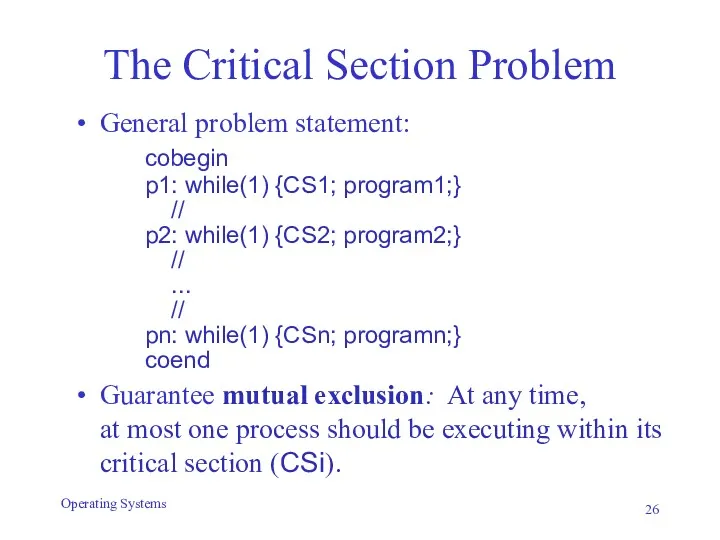

- 26. The Critical Section Problem General problem statement: cobegin p1: while(1) {CS1; program1;} // p2: while(1) {CS2;

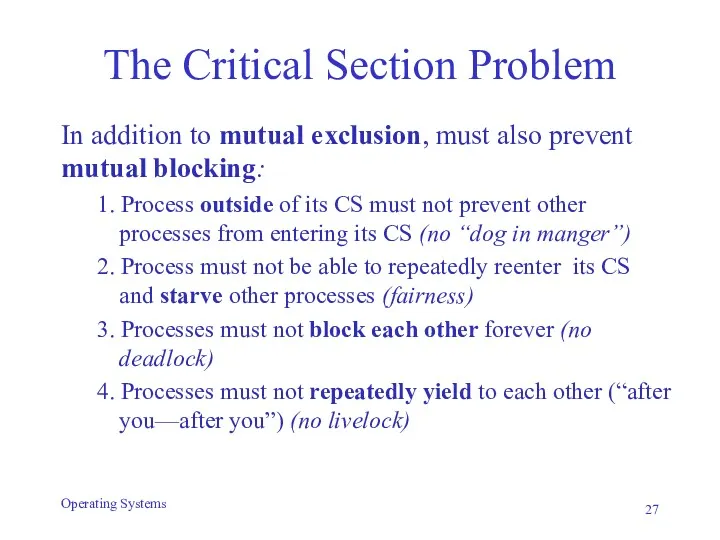

- 27. The Critical Section Problem In addition to mutual exclusion, must also prevent mutual blocking: 1. Process

- 28. The Critical Section Problem Solving the problem is subtle We will examine a few incorrect solutions

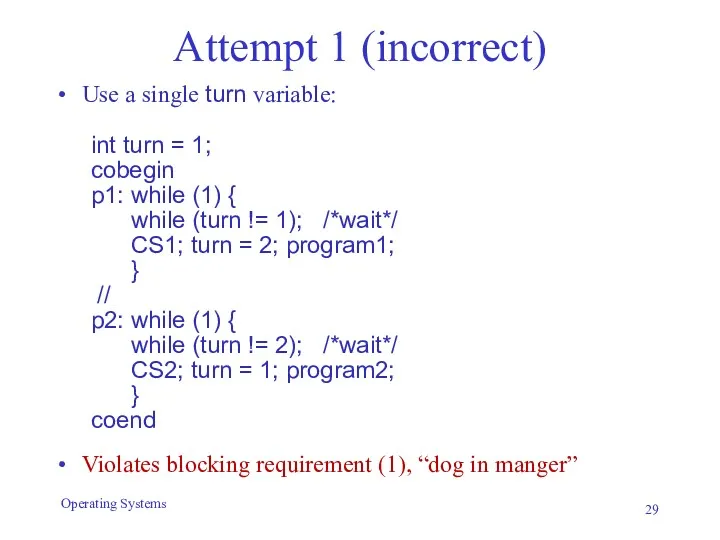

- 29. Attempt 1 (incorrect) Use a single turn variable: int turn = 1; cobegin p1: while (1)

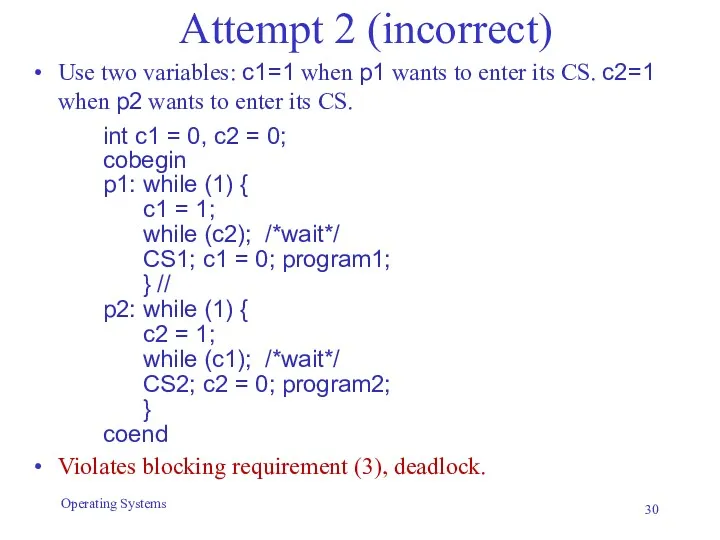

- 30. Attempt 2 (incorrect) Use two variables: c1=1 when p1 wants to enter its CS. c2=1 when

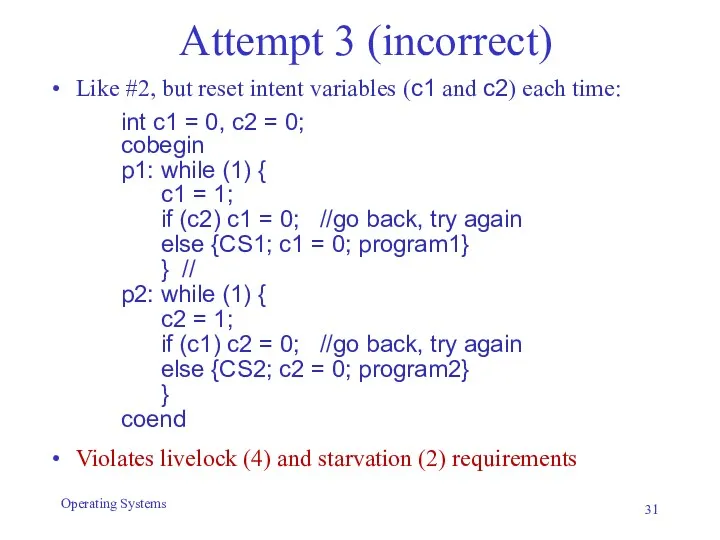

- 31. Attempt 3 (incorrect) Like #2, but reset intent variables (c1 and c2) each time: int c1

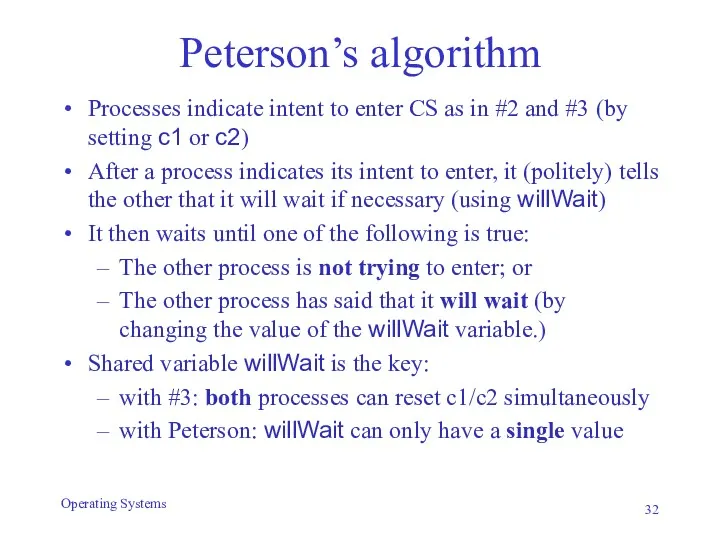

- 32. Peterson’s algorithm Processes indicate intent to enter CS as in #2 and #3 (by setting c1

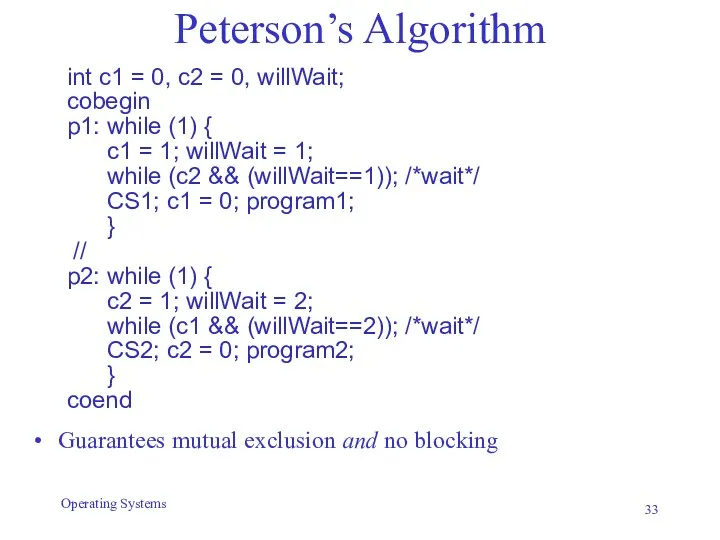

- 33. Peterson’s Algorithm int c1 = 0, c2 = 0, willWait; cobegin p1: while (1) { c1

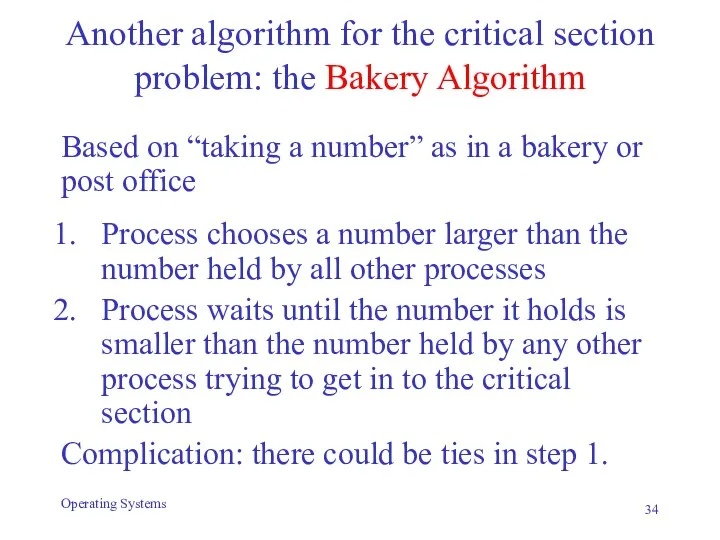

- 34. Another algorithm for the critical section problem: the Bakery Algorithm Based on “taking a number” as

- 35. Code for Bakery Algorithm int number[n]; //shared array. All entries initially set to 0 //Code for

- 36. Software solutions to CS problem Drawbacks Difficult to program and to verify Processes loop while waiting

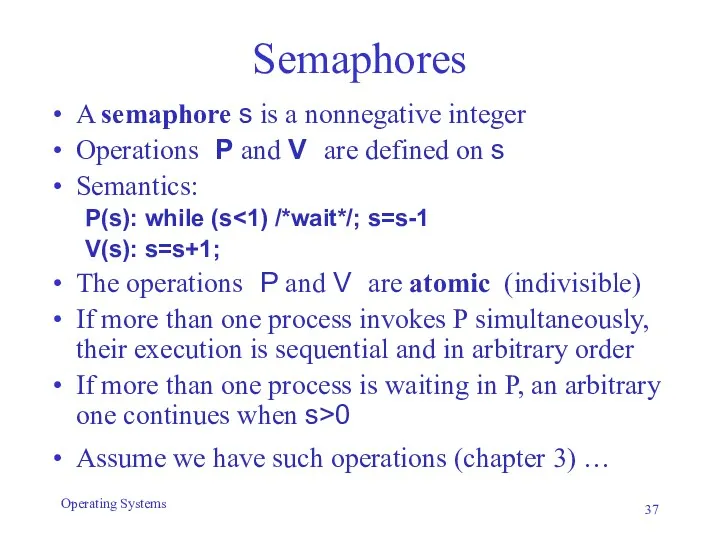

- 37. Semaphores A semaphore s is a nonnegative integer Operations P and V are defined on s

- 38. Notes on semaphores Developed by Edsger Dijkstra http://en.wikipedia.org/wiki/Edsger_W._Dijkstra Etymology: P(s): “P” from “passaren” (“pass” in Dutch)

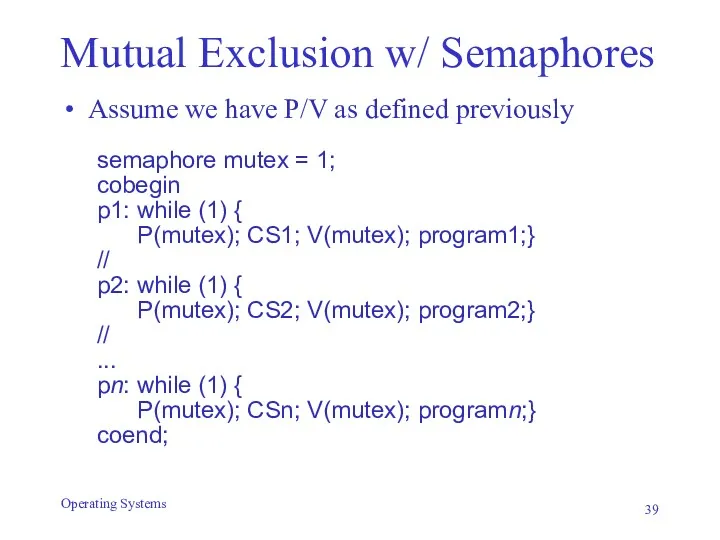

- 39. Mutual Exclusion w/ Semaphores Assume we have P/V as defined previously semaphore mutex = 1; cobegin

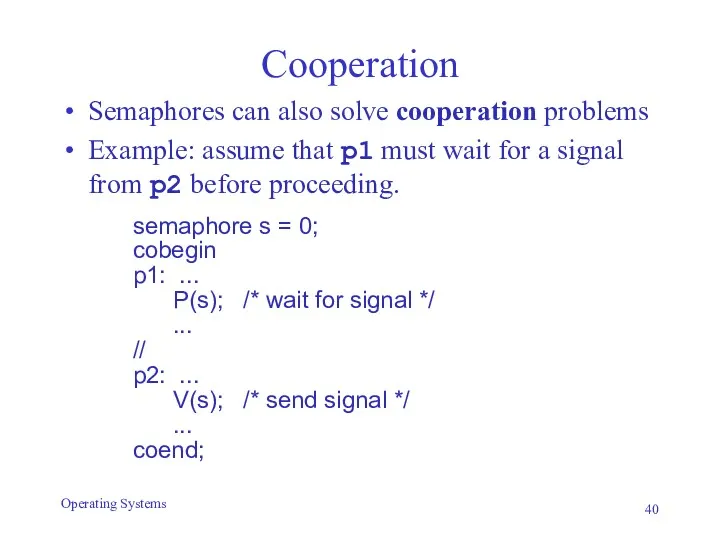

- 40. Cooperation Semaphores can also solve cooperation problems Example: assume that p1 must wait for a signal

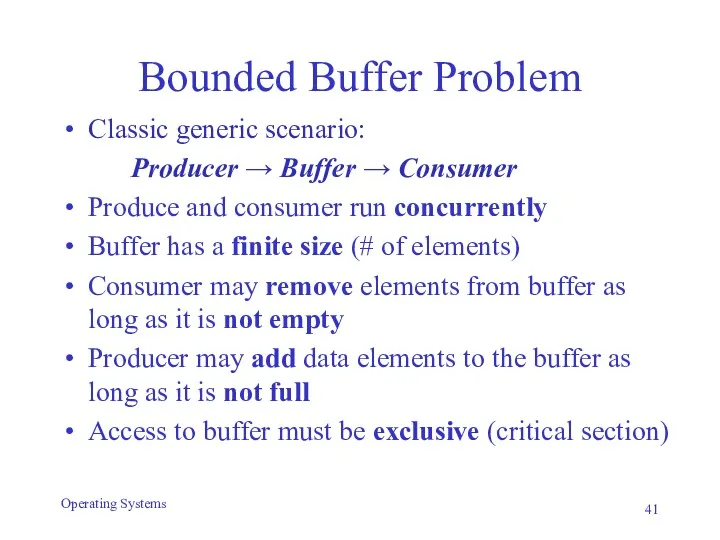

- 41. Bounded Buffer Problem Classic generic scenario: Producer → Buffer → Consumer Produce and consumer run concurrently

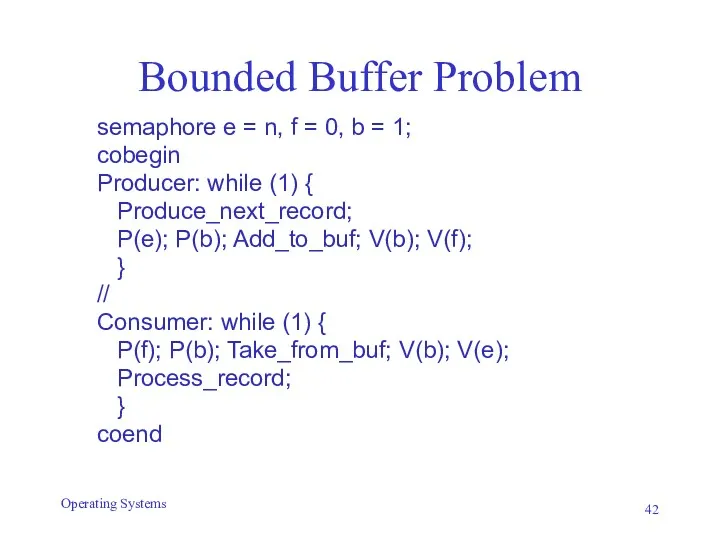

- 42. Bounded Buffer Problem semaphore e = n, f = 0, b = 1; cobegin Producer: while

- 43. Events An event designates a change in the system state that is of interest to a

- 44. Synchronous Events Process explicitly waits for occurrence of a specific event or set of events generated

- 45. Asynchronous Events Must also be defined, posted Process does not explicitly wait Process provides event handlers

- 46. Event synchronization in UNIX Processes can signal conditions using asynchronous events: kill(pid, signal) Possible signals: SIGHUP,

- 48. Скачать презентацию

![Code for Bakery Algorithm int number[n]; //shared array. All entries](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/252255/slide-34.jpg)

Презентация Имя Д.И.Менделеева в геграфических названиях

Презентация Имя Д.И.Менделеева в геграфических названиях Мамин праздник

Мамин праздник Проектно-исследовательская работа Этапы битвы за Сталинград

Проектно-исследовательская работа Этапы битвы за Сталинград Краудфандинг

Краудфандинг Опытная эксплуатация смешанной топливной загрузки активной зоны ТВСА совместно с ТВС-WR на ЮУ АЭС

Опытная эксплуатация смешанной топливной загрузки активной зоны ТВСА совместно с ТВС-WR на ЮУ АЭС Invata sa te comporti civilizat

Invata sa te comporti civilizat First Conditional. Условное предложение 1 типа. Тест

First Conditional. Условное предложение 1 типа. Тест Стресс в профессиональной деятельности: причины и методы преодоления

Стресс в профессиональной деятельности: причины и методы преодоления Мұнай бергіштікті арттыру үшін қабатқа ыстық бу айдау

Мұнай бергіштікті арттыру үшін қабатқа ыстық бу айдау Презентация к устному журналу Это страшное слово - БЛОКАДА

Презентация к устному журналу Это страшное слово - БЛОКАДА Традиции, обряды, нормы буддизма

Традиции, обряды, нормы буддизма Пермский край-лесной край.

Пермский край-лесной край. 9 класс. Западно-Сибирский район

9 класс. Западно-Сибирский район Материалы и оборудование для капиллярного контроля сварных соединений. Капиллярный метод неразрушающего контроля

Материалы и оборудование для капиллярного контроля сварных соединений. Капиллярный метод неразрушающего контроля Предметно-развивающая среда ДОУ Особенности организации предметно-развивающей среды в разных возрастных группах

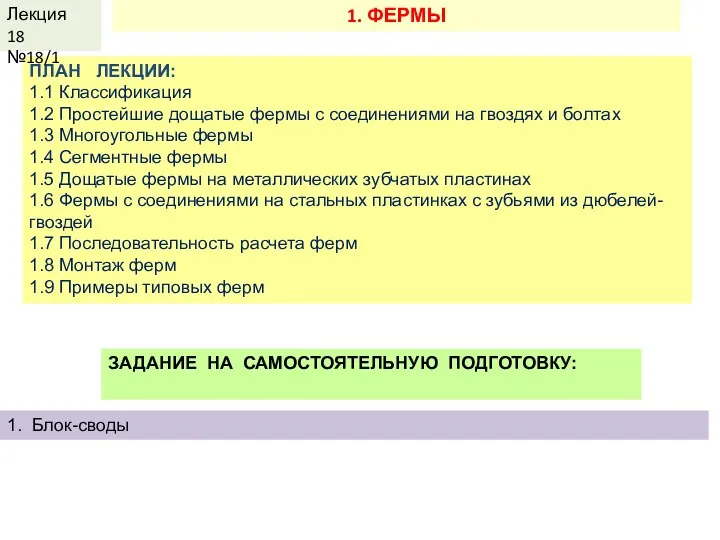

Предметно-развивающая среда ДОУ Особенности организации предметно-развивающей среды в разных возрастных группах Фермы. Классификация

Фермы. Классификация Государственный строй и устройство стран мира.

Государственный строй и устройство стран мира. урок технологии Лесные жители

урок технологии Лесные жители SecurityBand Kvirin KPDA

SecurityBand Kvirin KPDA Chiny – najludniejszy kraj świata

Chiny – najludniejszy kraj świata Общие сведения о каналах утечки информации, обрабатываемой ТСПИ, через ПЭМИН

Общие сведения о каналах утечки информации, обрабатываемой ТСПИ, через ПЭМИН Праздничные концерты для любимых женщин. Фотоальбом

Праздничные концерты для любимых женщин. Фотоальбом По пути Христа

По пути Христа ПРЕЗЕНТАЦИЯ Самообразование педагогов в ДОУ

ПРЕЗЕНТАЦИЯ Самообразование педагогов в ДОУ Оценка и аттестация персонала. Высвобождение персонала. Тема 5

Оценка и аттестация персонала. Высвобождение персонала. Тема 5 20231227_apk

20231227_apk Развитие коммуникативных умений и навыков у учащихся

Развитие коммуникативных умений и навыков у учащихся Процессы и аппараты процессов нефтегазопереработки и нефтехимии

Процессы и аппараты процессов нефтегазопереработки и нефтехимии