Содержание

- 2. Migrating from Streams to GoldenGate12c Tech15.UKOUG Birmingham 7th of December,2015 Zbigniew Baranowski, CERN IT-DB

- 3. About Zbigniew Joined CERN in 2009 Developer Database Administrator & Service Manager Responsible for Engineering &

- 4. Outline Database replication@CERN - overview Why GoldenGate? Preparation for the migration Migration Summary

- 5. About CERN CERN - European Laboratory for Particle Physics Founded in 1954 by 12 countries for

- 6. LHC is the world’s largest particle accelerator LHC = Large Hadron Collider 27km ring of superconducting

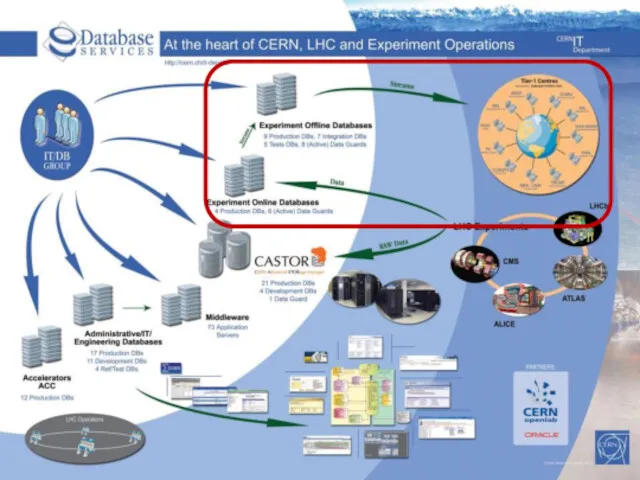

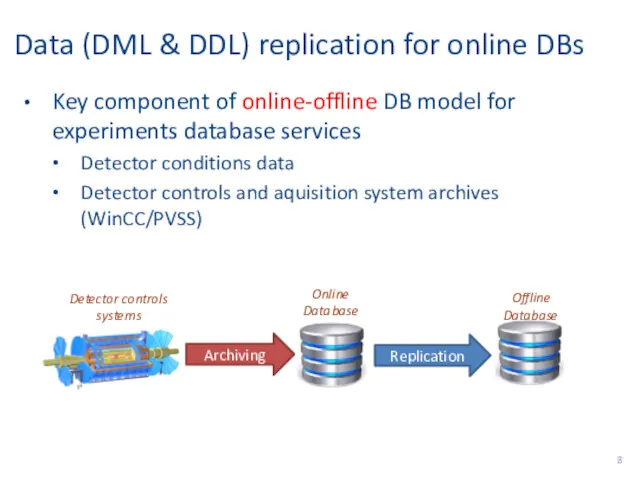

- 8. Data (DML & DDL) replication for online DBs Key component of online-offline DB model for experiments

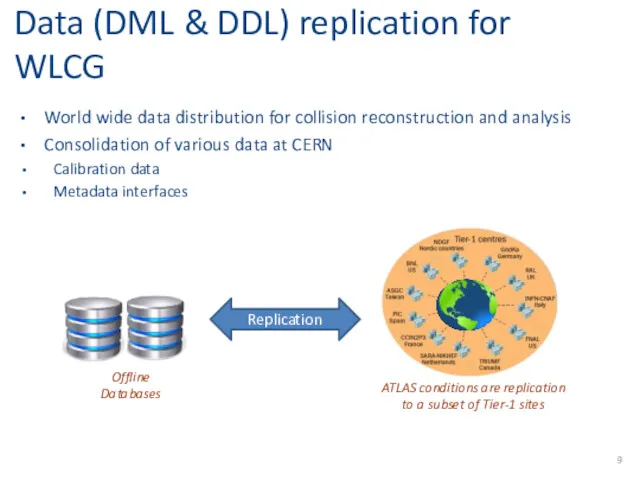

- 9. Data (DML & DDL) replication for WLCG World wide data distribution for collision reconstruction and analysis

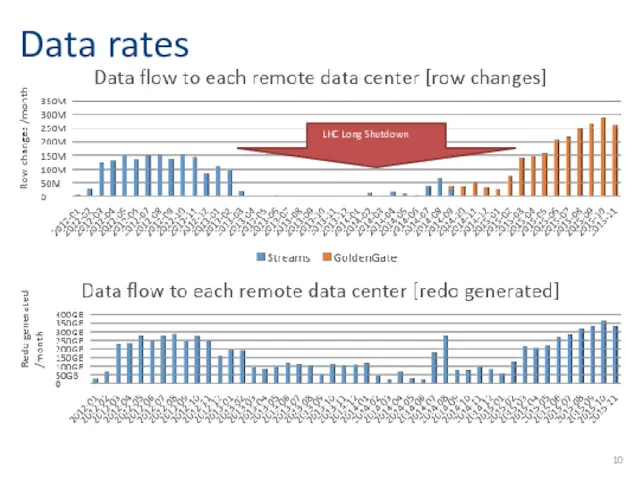

- 10. Data rates LHC Long Shutdown

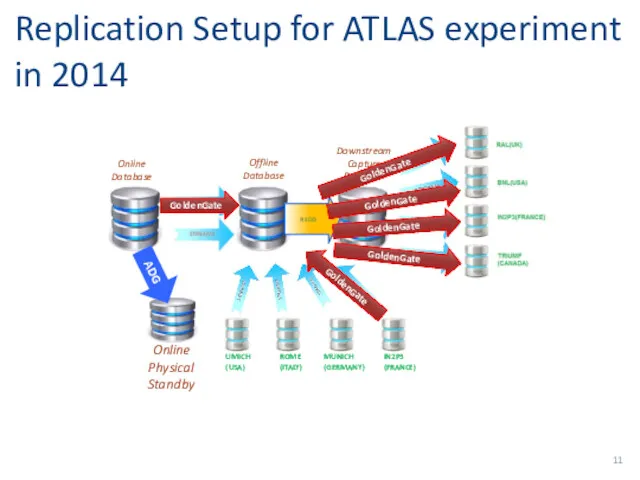

- 11. Replication Setup for ATLAS experiment in 2014 REDO UMICH (USA) ROME (ITALY) MUNICH (GERMANY) IN2P3 (FRANCE)

- 12. Why GoldenGate?

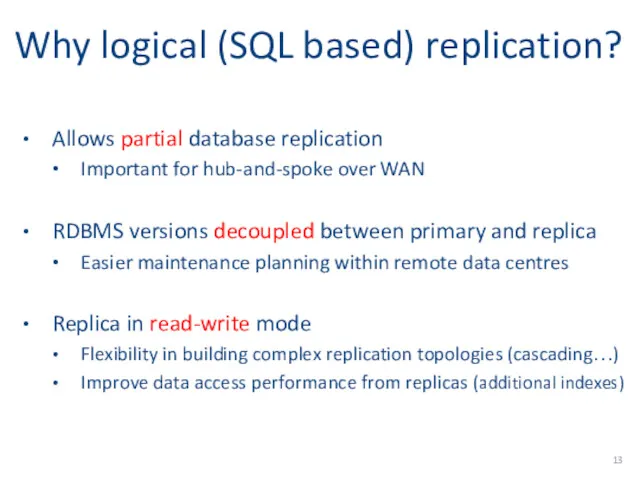

- 13. Why logical (SQL based) replication? Allows partial database replication Important for hub-and-spoke over WAN RDBMS versions

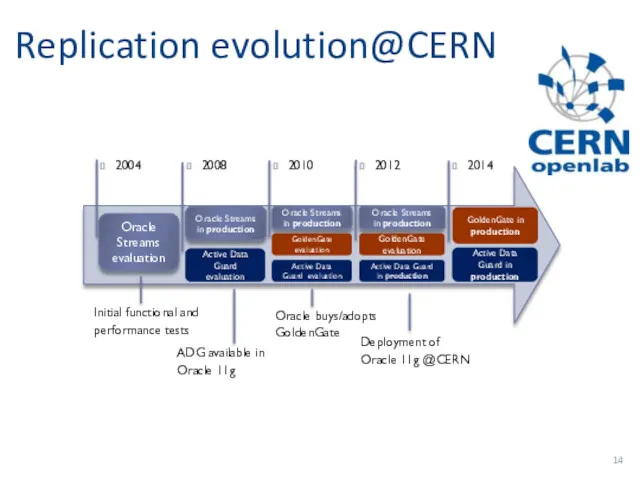

- 14. Replication evolution@CERN Oracle Streams in production Active Data Guard evaluation Oracle Streams evaluation Oracle Streams in

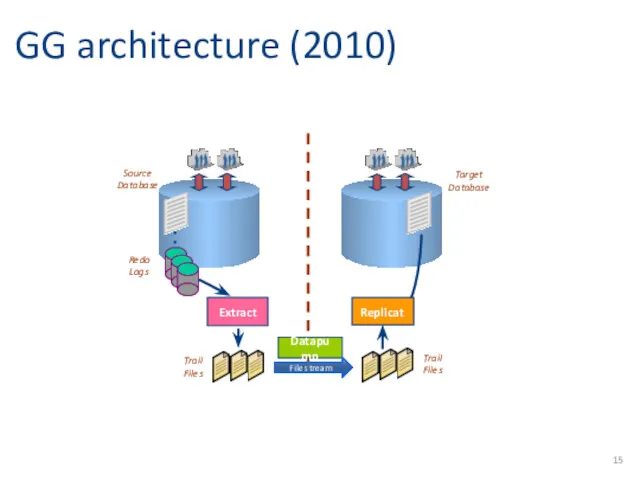

- 15. GG architecture (2010) Redo Logs Datapump Target Database Source Database Extract Replicat Trail Files Trail Files

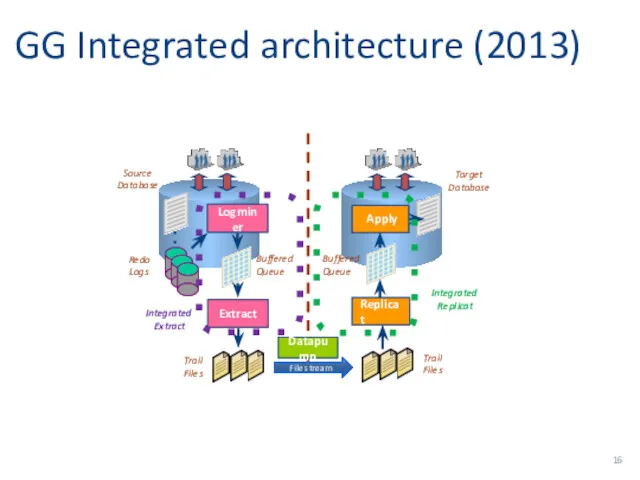

- 16. GG Integrated architecture (2013) Redo Logs Datapump Target Database Source Database Extract Replicat Trail Files Logminer

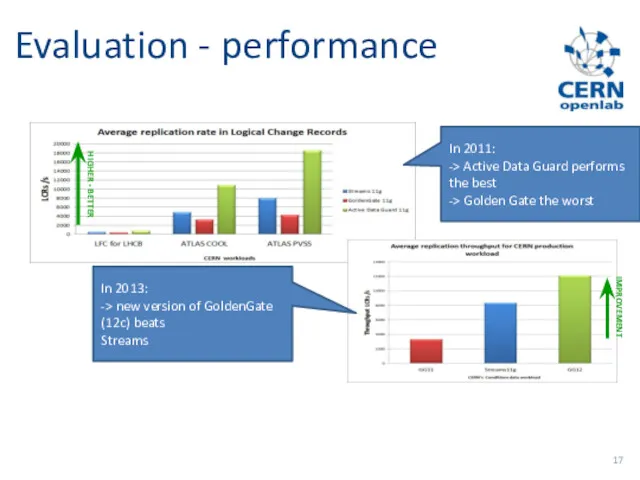

- 17. Evaluation - performance In 2011: -> Active Data Guard performs the best -> Golden Gate the

- 18. Streams vs GoldenGate Streams in 11g are mature and reliable but will not be enhanced! Oracle

- 19. Testing and validation Performance tests on synthetic data Easy to establish Are good for benchmarking Not

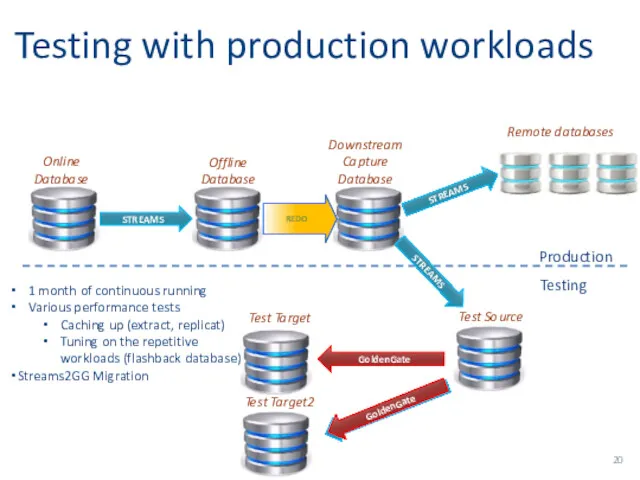

- 20. Testing with production workloads REDO Online Database Offline Database Downstream Capture Database Test Target STREAMS STREAMS

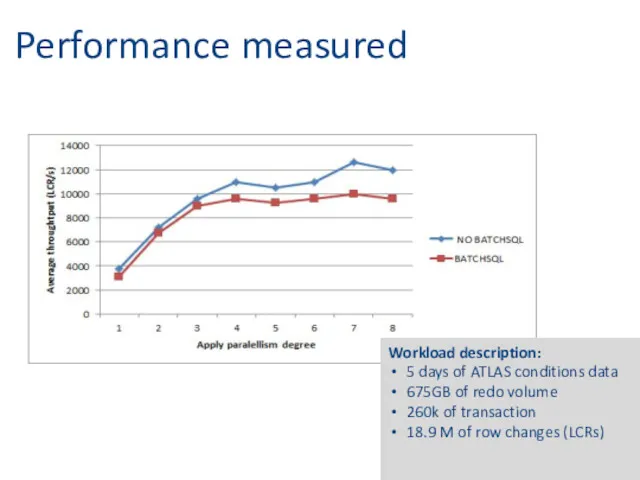

- 21. Performance measured Workload description: 5 days of ATLAS conditions data 675GB of redo volume 260k of

- 22. Ok, Lets migrate…

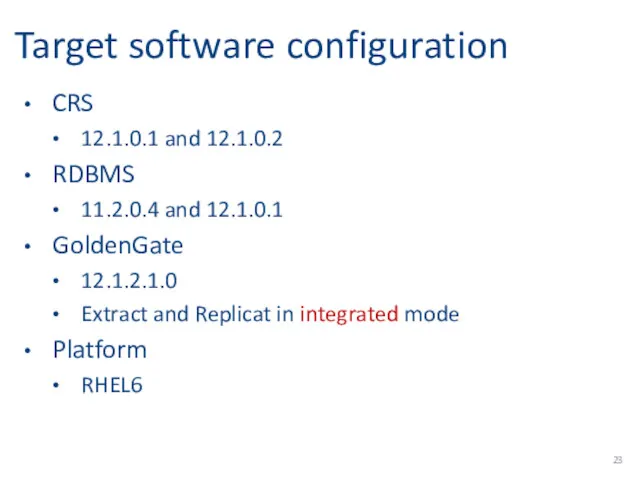

- 23. Target software configuration CRS 12.1.0.1 and 12.1.0.2 RDBMS 11.2.0.4 and 12.1.0.1 GoldenGate 12.1.2.1.0 Extract and Replicat

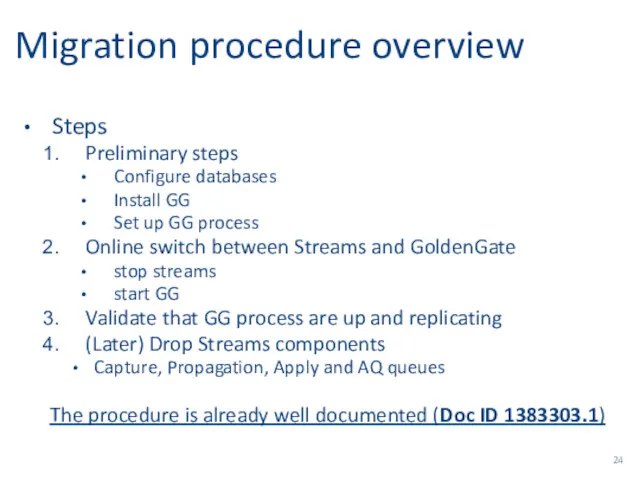

- 24. Migration procedure overview Steps Preliminary steps Configure databases Install GG Set up GG process Online switch

- 25. Preparation

- 26. Preliminary steps Database preparation Install Golden Gate Allocate the storage for GG homes and trails Get

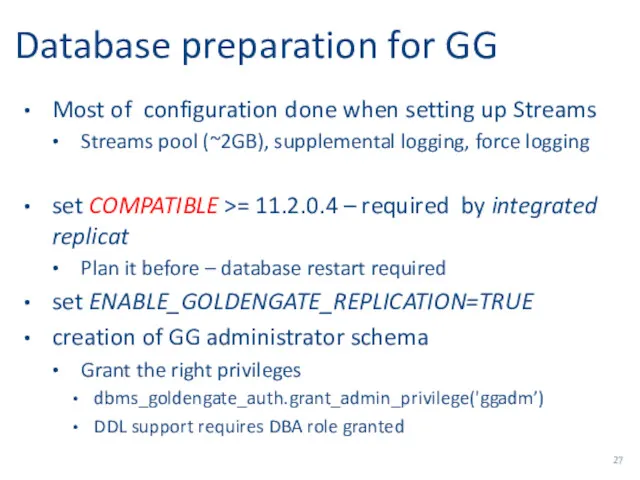

- 27. Database preparation for GG Most of configuration done when setting up Streams Streams pool (~2GB), supplemental

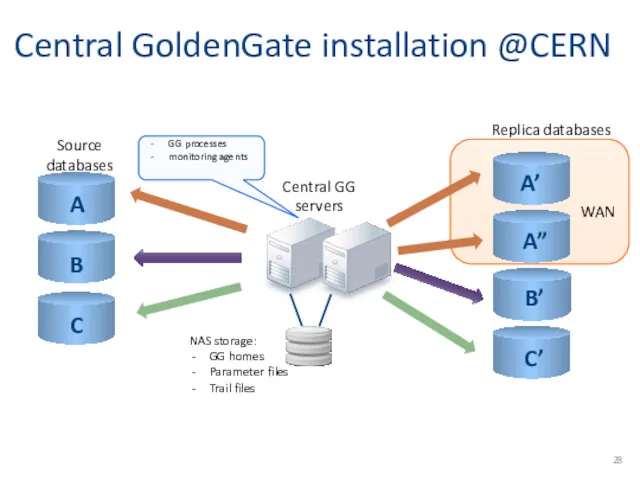

- 28. WAN Central GoldenGate installation @CERN A B C A’ A” B’ C’ Central GG servers Source

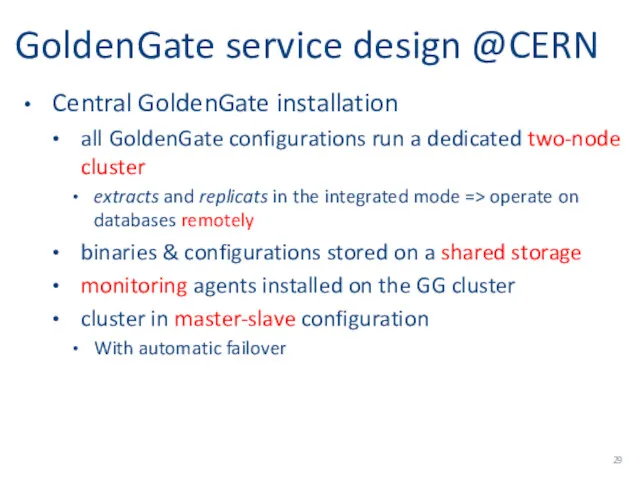

- 29. GoldenGate service design @CERN Central GoldenGate installation all GoldenGate configurations run a dedicated two-node cluster extracts

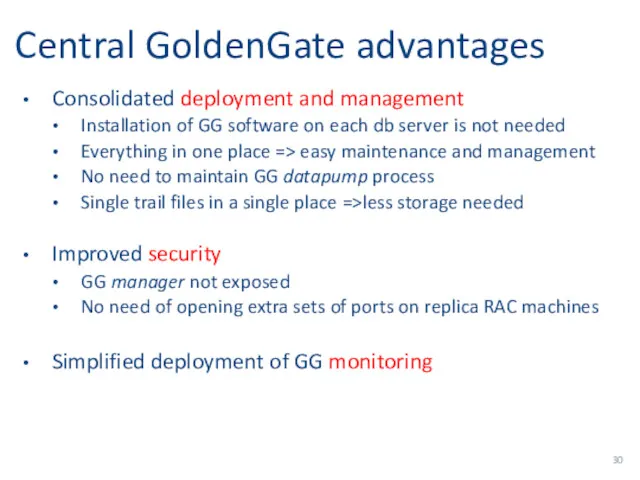

- 30. Central GoldenGate advantages Consolidated deployment and management Installation of GG software on each db server is

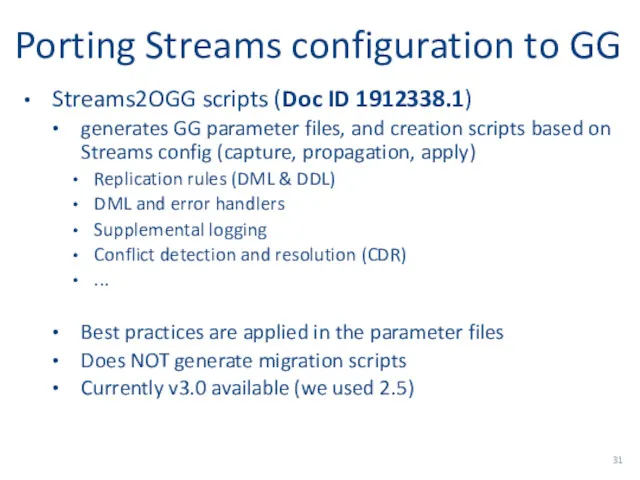

- 31. Porting Streams configuration to GG Streams2OGG scripts (Doc ID 1912338.1) generates GG parameter files, and creation

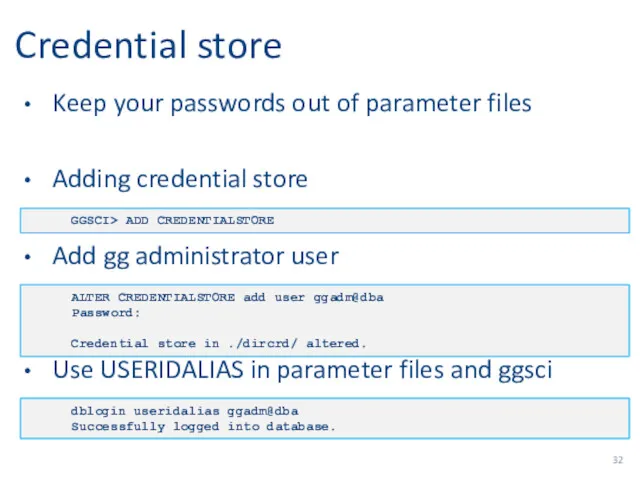

- 32. Credential store Keep your passwords out of parameter files Adding credential store Add gg administrator user

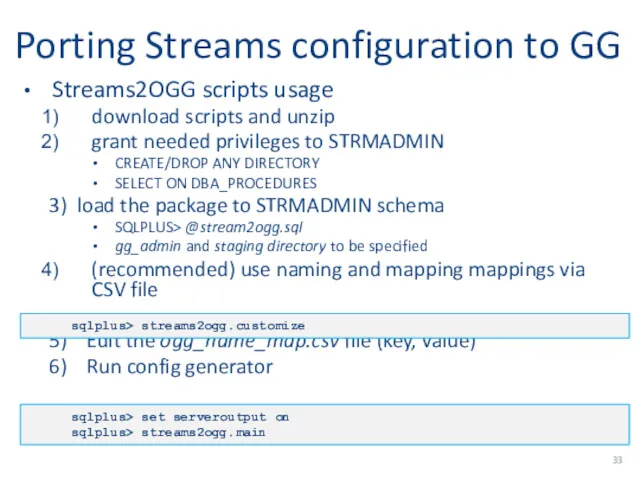

- 33. Porting Streams configuration to GG Streams2OGG scripts usage download scripts and unzip grant needed privileges to

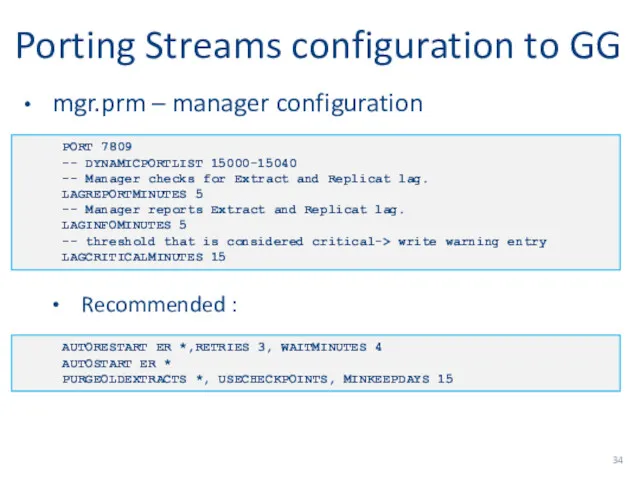

- 34. Porting Streams configuration to GG mgr.prm – manager configuration Recommended : PORT 7809 -- DYNAMICPORTLIST 15000-15040

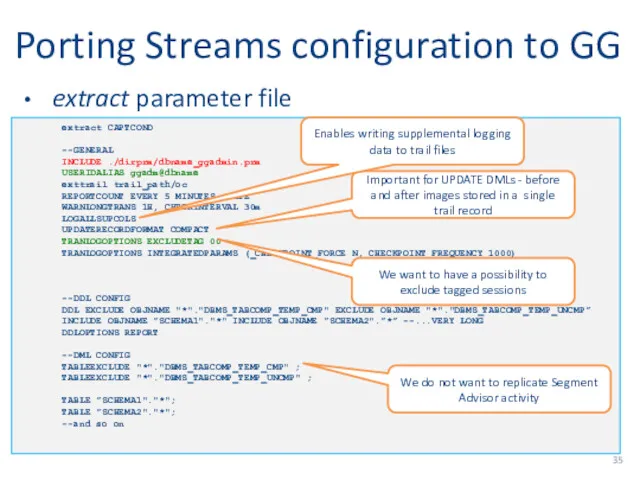

- 35. Porting Streams configuration to GG extract parameter file Worth adding to manager config: extract CAPTCOND --GENERAL

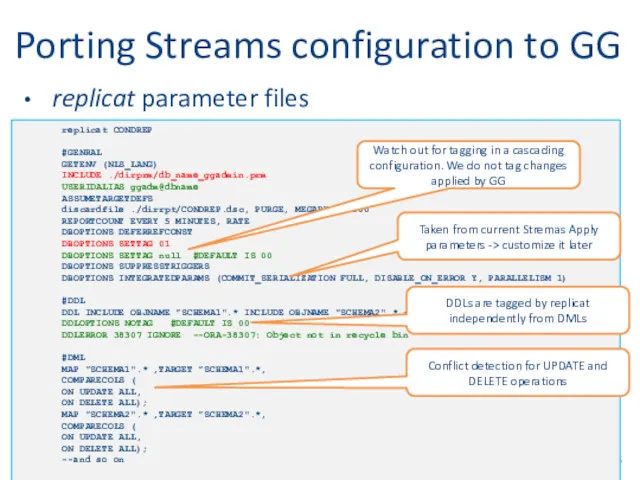

- 36. Porting Streams configuration to GG replicat parameter files replicat CONDREP #GENRAL GETENV (NLS_LANG) INCLUDE ./dirprm/db_name_ggadmin.prm USERIDALIAS

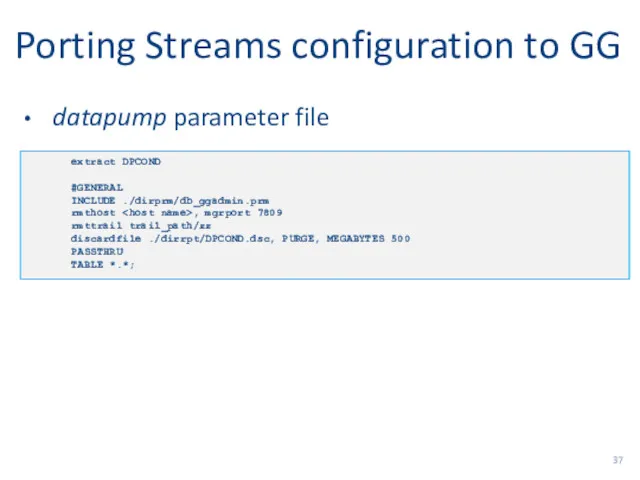

- 37. Porting Streams configuration to GG datapump parameter file extract DPCOND #GENERAL INCLUDE ./dirprm/db_ggadmin.prm rmthost , mgrport

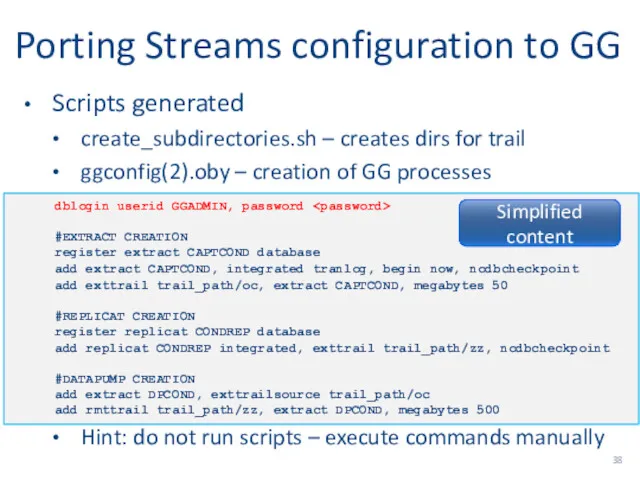

- 38. Porting Streams configuration to GG Scripts generated create_subdirectories.sh – creates dirs for trail ggconfig(2).oby – creation

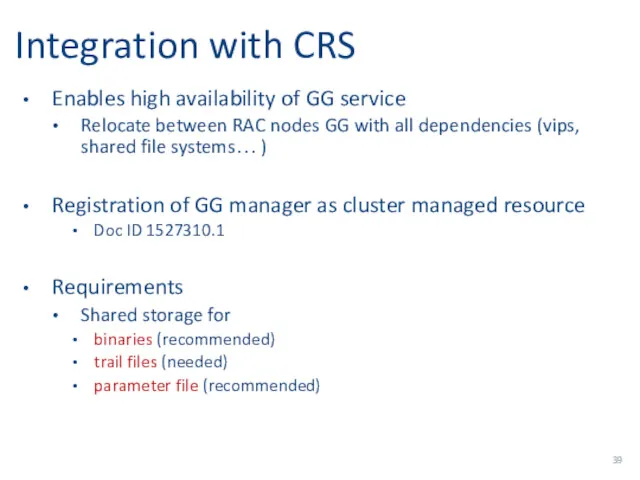

- 39. Integration with CRS Enables high availability of GG service Relocate between RAC nodes GG with all

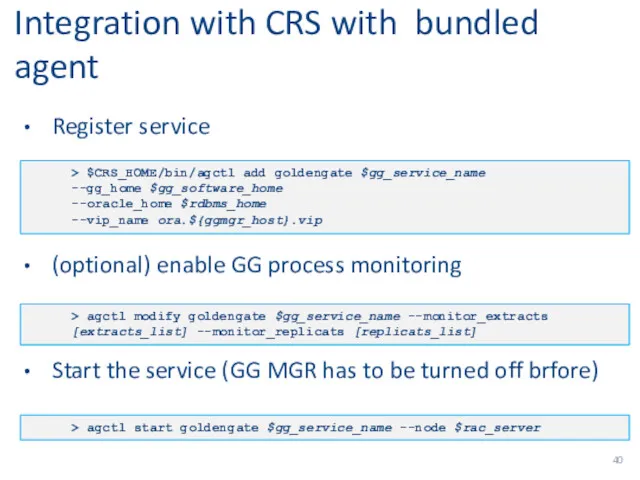

- 40. Integration with CRS with bundled agent Register service (optional) enable GG process monitoring Start the service

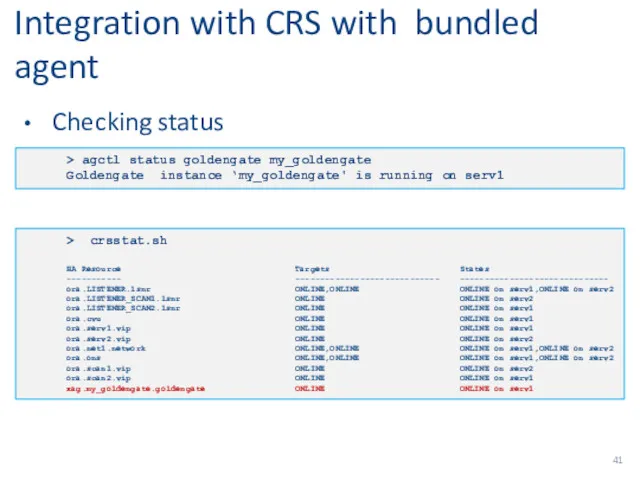

- 41. Integration with CRS with bundled agent Checking status > agctl status goldengate my_goldengate Goldengate instance ‘my_goldengate'

- 42. Switching from Streams to Goldenagte

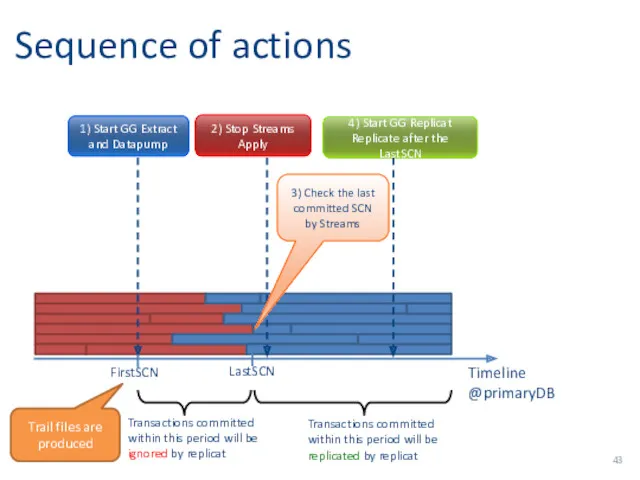

- 43. Sequence of actions 1) Start GG Extract and Datapump Timeline @primaryDB 2) Stop Streams Apply 4)

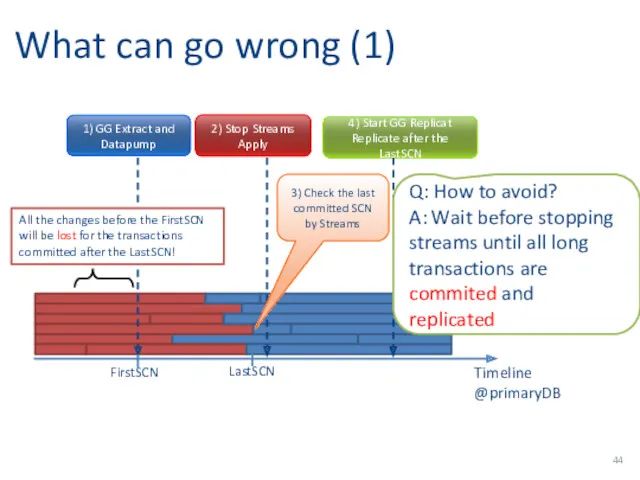

- 44. What can go wrong (1) 1) GG Extract and Datapump 2) Stop Streams Apply 4) Start

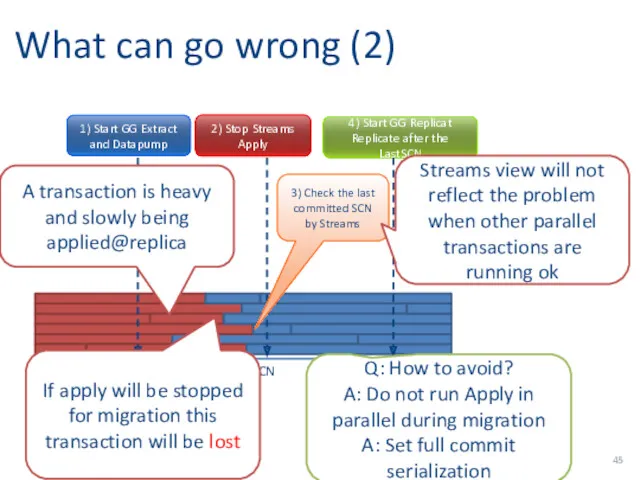

- 45. What can go wrong (2) 2) Stop Streams Apply 4) Start GG Replicat Replicate after the

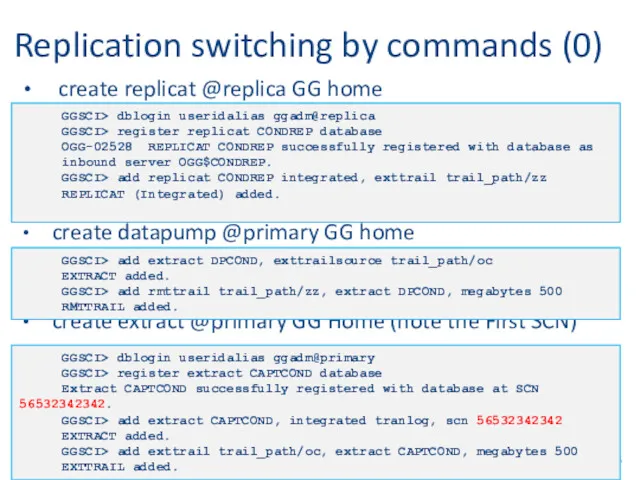

- 46. Replication switching by commands (0) create replicat @replica GG home create datapump @primary GG home create

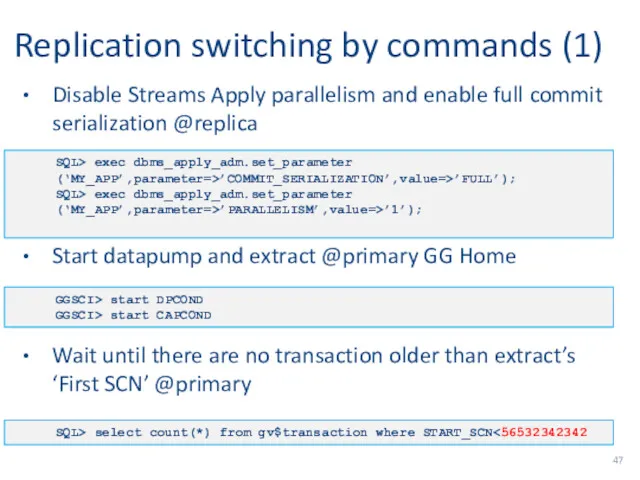

- 47. Replication switching by commands (1) Disable Streams Apply parallelism and enable full commit serialization @replica Start

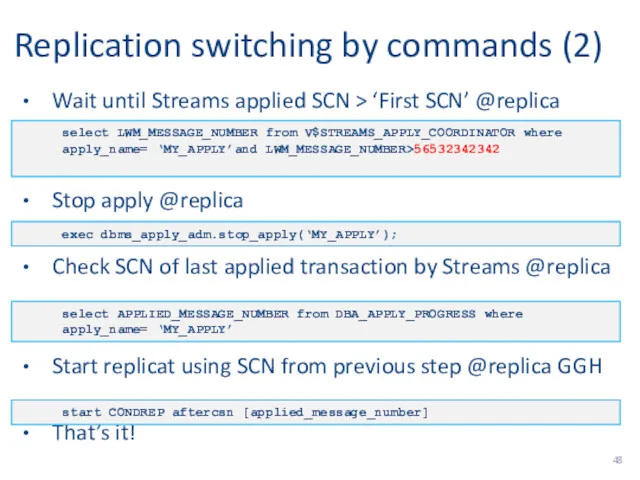

- 48. Replication switching by commands (2) Wait until Streams applied SCN > ‘First SCN’ @replica Stop apply

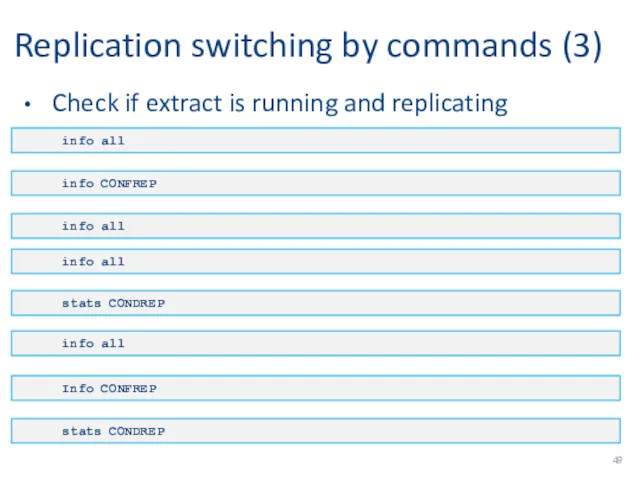

- 49. Replication switching by commands (3) Check if extract is running and replicating info all info CONFREP

- 50. Data consistency validation What are the options select…minus…select@source…? If one small table Compare and converge…? If

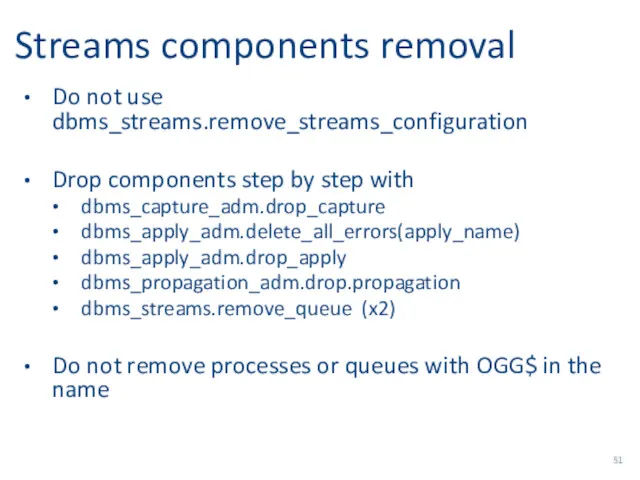

- 51. Streams components removal Do not use dbms_streams.remove_streams_configuration Drop components step by step with dbms_capture_adm.drop_capture dbms_apply_adm.delete_all_errors(apply_name) dbms_apply_adm.drop_apply

- 52. After the migration

- 53. How do we monitor GG Director for the central GG cluster Lag and status of Extracts

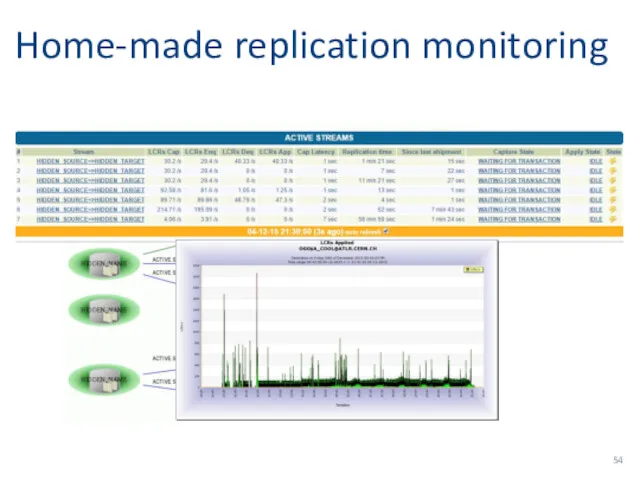

- 54. Home-made replication monitoring

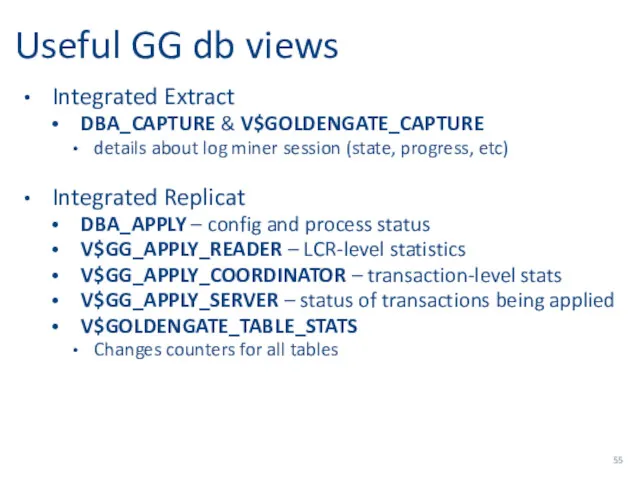

- 55. Useful GG db views Integrated Extract DBA_CAPTURE & V$GOLDENGATE_CAPTURE details about log miner session (state, progress,

- 56. Problems with integrated GG (so far) No major issues so far! Network glitches are not well

- 57. Some best practices http://www.oracle.com/technetwork/database/availability/maa-gg-performance-1969630.pdf Use heartbeat table to validate replication Do not use system generated names

- 58. Future plans Move GG monitoring to EM (12.1.0.3.0) Automatic hang detections and handling Upgrade to GG

- 59. Summary Replication technology evolution at CERN: Oracle Streams (initial solution) was replaced by Golden Gate12c and

- 60. Acknowledgments CERN IT-DB group Especially: Lorena Lobato Pardavila, Eva Dafonte Perez Oracle (via the Openlab partnership)

- 62. Скачать презентацию

Источники света. Распространение света

Источники света. Распространение света Electromagnetic Waves Scale. Uses of Electromagnetic Waves

Electromagnetic Waves Scale. Uses of Electromagnetic Waves Уравнение состояния реального газа Ван-Дер-Ваальса. (Лекция 11)

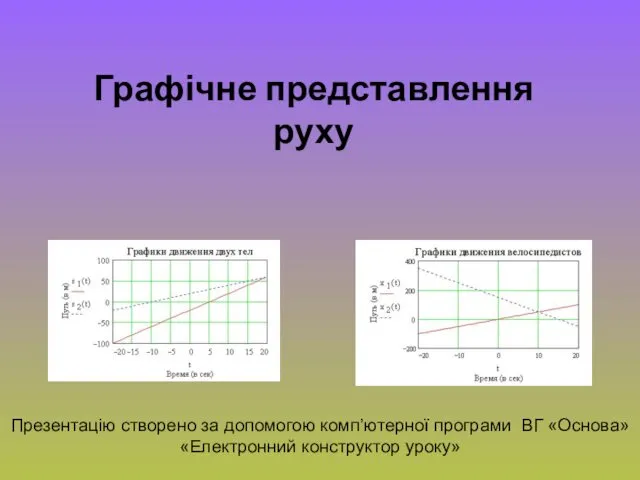

Уравнение состояния реального газа Ван-Дер-Ваальса. (Лекция 11) Графічне представлення руху

Графічне представлення руху Из истории радиоэлектроники

Из истории радиоэлектроники Сила Лоренца

Сила Лоренца Физика ЕГЭ. Задание 14

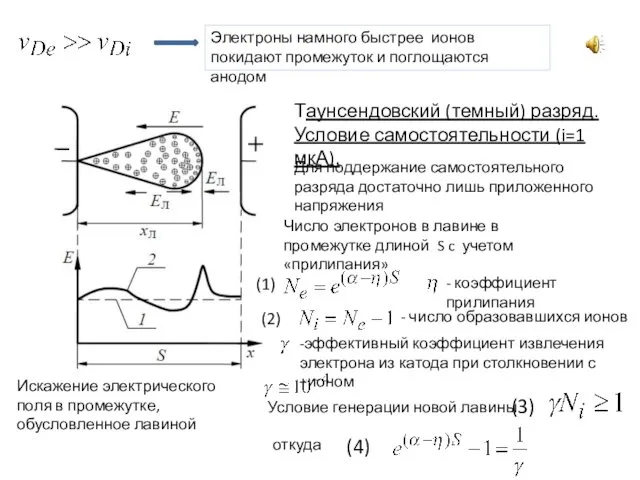

Физика ЕГЭ. Задание 14 Пробой диэлектриков. (Лекция 2)

Пробой диэлектриков. (Лекция 2) ”Графическое представление движения”

”Графическое представление движения” Термоядерная реакция

Термоядерная реакция Повітря і його функції

Повітря і його функції Цели и задачи технической диагностики. Основные понятия, термины и определения диагностики

Цели и задачи технической диагностики. Основные понятия, термины и определения диагностики Пневматический привод в строительных машинах

Пневматический привод в строительных машинах Энергия. Работа. Мощность. Законы сохранения

Энергия. Работа. Мощность. Законы сохранения Напряженность электрического поля. Урок физики в 10 классе

Напряженность электрического поля. Урок физики в 10 классе Брэйн-ринг по физике

Брэйн-ринг по физике Расчет давления жидкости на дно и стенки сосуда

Расчет давления жидкости на дно и стенки сосуда Тест. Устройство автомобилей

Тест. Устройство автомобилей Тестовые задачи для инженера-прочниста

Тестовые задачи для инженера-прочниста Первый и второй законы Ньютона

Первый и второй законы Ньютона Диагностика пневмоколесных машин

Диагностика пневмоколесных машин Быстрое преобразование Фурье. (Лекция 12)

Быстрое преобразование Фурье. (Лекция 12) Оптическая модель упругого рассеяния

Оптическая модель упругого рассеяния Ионосфера - газ молекулаларының ионизациясы жоғары

Ионосфера - газ молекулаларының ионизациясы жоғары Природа света. Законы освещенности

Природа света. Законы освещенности Тепловыделение в ядерных реакторах

Тепловыделение в ядерных реакторах История советского радио

История советского радио Laser material processing

Laser material processing