WHY ARE BIG DATA SYSTEMS DIFFERENT?

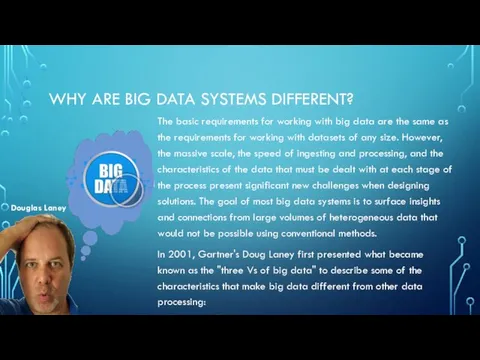

The basic requirements for working with big data

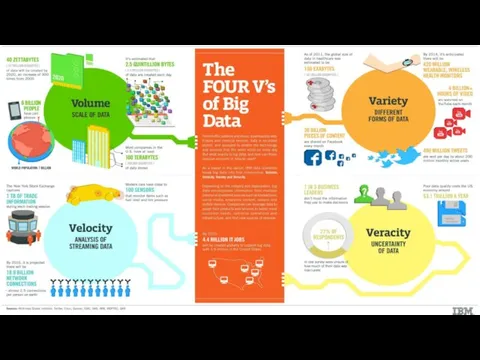

are the same as the requirements for working with datasets of any size. However, the massive scale, the speed of ingesting and processing, and the characteristics of the data that must be dealt with at each stage of the process present significant new challenges when designing solutions. The goal of most big data systems is to surface insights and connections from large volumes of heterogeneous data that would not be possible using conventional methods.

In 2001, Gartner's Doug Laney first presented what became known as the "three Vs of big data" to describe some of the characteristics that make big data different from other data processing:

Douglas Laney

Модели объектов. Моделирование

Модели объектов. Моделирование Проект по розробці універсального додатку АнтиМат

Проект по розробці універсального додатку АнтиМат История развития операционной системы Windows

История развития операционной системы Windows Программа для программирования Scratch

Программа для программирования Scratch Компьютерные сети - основные понятия. Основные принципы организации сетей

Компьютерные сети - основные понятия. Основные принципы организации сетей Mediadiscourse of fashion on the material of Instagram social network

Mediadiscourse of fashion on the material of Instagram social network ICT NEWS Computer viruses

ICT NEWS Computer viruses Информация и информационные процессы

Информация и информационные процессы Введение в конфигурирование в системе 1С:Предприятие 8.2 Основные объекты

Введение в конфигурирование в системе 1С:Предприятие 8.2 Основные объекты Единый информационный час Угрозы интернета – как защититься от манипуляций

Единый информационный час Угрозы интернета – как защититься от манипуляций Урок Алгоритмы

Урок Алгоритмы Information and communications technology

Information and communications technology Текстовий процесор (урок 18, 6 клас)

Текстовий процесор (урок 18, 6 клас) Ulysses · SlidesCarnival

Ulysses · SlidesCarnival Школьная газета МБОУ Ярцевская СОШ № 12 № 1 (октябрь 2018)

Школьная газета МБОУ Ярцевская СОШ № 12 № 1 (октябрь 2018) Информационная безопасность. Социальные сети. Игра

Информационная безопасность. Социальные сети. Игра Проблема сохранения личной неприкосновенности в социальных сетях

Проблема сохранения личной неприкосновенности в социальных сетях Turbo Pascal. Операторы

Turbo Pascal. Операторы Анимированные ребусы

Анимированные ребусы Двоичная арифметика

Двоичная арифметика Подготовка презентации, сопровождающей выступление

Подготовка презентации, сопровождающей выступление Компьютерлік желі

Компьютерлік желі SMM_для_агентств

SMM_для_агентств Настройка пользовательского интерфейса Microsoft Word. Лекция 7

Настройка пользовательского интерфейса Microsoft Word. Лекция 7 Пример создания базы данных шаг за шагом

Пример создания базы данных шаг за шагом Определение количества информации. 8 класс

Определение количества информации. 8 класс Элементы логики

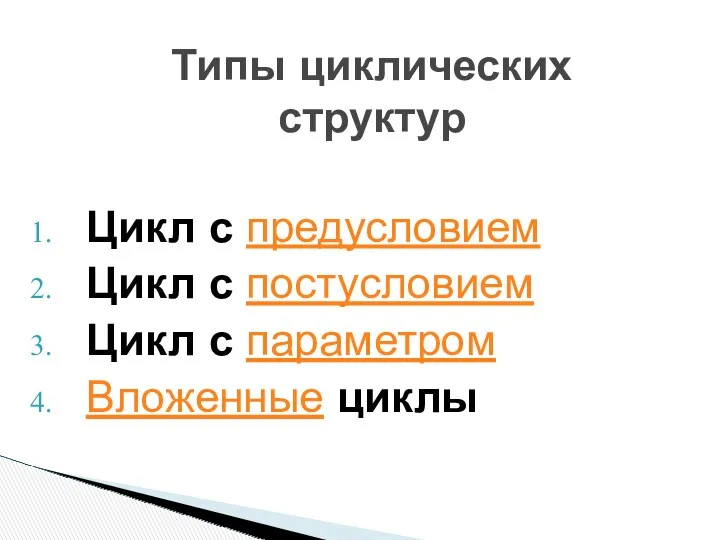

Элементы логики Типы циклических структур

Типы циклических структур