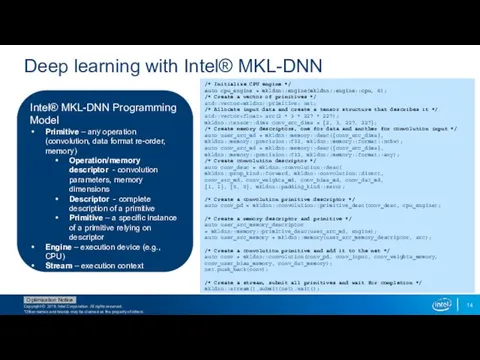

Deep learning with Intel® MKL-DNN

Intel® MKL-DNN Programming Model

Primitive – any operation

(convolution, data format re-order, memory)

Operation/memory descriptor - convolution parameters, memory dimensions

Descriptor - complete description of a primitive

Primitive – a specific instance of a primitive relying on descriptor

Engine – execution device (e.g., CPU)

Stream – execution context

/* Initialize CPU engine */

auto cpu_engine = mkldnn::engine(mkldnn::engine::cpu, 0);

/* Create a vector of primitives */

std::vector net;

/* Allocate input data and create a tensor structure that describes it */

std::vector src(2 * 3 * 227 * 227);

mkldnn::tensor::dims conv_src_dims = {2, 3, 227, 227};

/* Create memory descriptors, one for data and another for convolution input */

auto user_src_md = mkldnn::memory::desc({conv_src_dims},

mkldnn::memory::precision::f32, mkldnn::memory::format::nchw);

auto conv_src_md = mkldnn::memory::desc({conv_src_dims},

mkldnn::memory::precision::f32, mkldnn::memory::format::any);

/* Create convolution descriptor */

auto conv_desc = mkldnn::convolution::desc(

mkldnn::prop_kind::forward, mkldnn::convolution::direct,

conv_src_md, conv_weights_md, conv_bias_md, conv_dst_md,

{1, 1}, {0, 0}, mkldnn::padding_kind::zero);

/* Create a convolution primitive descriptor */

auto conv_pd = mkldnn::convolution::primitive_desc(conv_desc, cpu_engine);

/* Create a memory descriptor and primitive */

auto user_src_memory_descriptor

= mkldnn::memory::primitive_desc(user_src_md, engine);

auto user_src_memory = mkldnn::memory(user_src_memory_descriptor, src);

/* Create a convolution primitive and add it to the net */

auto conv = mkldnn::convolution(conv_pd, conv_input, conv_weights_memory,

conv_user_bias_memory, conv_dst_memory);

net.push_back(conv);

/* Create a stream, submit all primitives and wait for completion */

mkldnn::stream().submit(net).wait();

Работа с файлами в Си-шарп

Работа с файлами в Си-шарп Support Desk Technologies. Общение в чатах

Support Desk Technologies. Общение в чатах Конструктор проекта. Социальный проект Кампус общественных объединений Ставропольского края

Конструктор проекта. Социальный проект Кампус общественных объединений Ставропольского края Алгоритми роботи з об’єктами та величинами. (8 клас)

Алгоритми роботи з об’єктами та величинами. (8 клас) Понятие формы. Элементы управления

Понятие формы. Элементы управления Применение ИКТ в преподавании истории и обществознания. Теория и практика

Применение ИКТ в преподавании истории и обществознания. Теория и практика Табличные информационные модели. Вычислительные таблицы

Табличные информационные модели. Вычислительные таблицы Разработка АРМ менеджера оконного комбината на примере ООО Светоч в среде Delphi 6.0

Разработка АРМ менеджера оконного комбината на примере ООО Светоч в среде Delphi 6.0 Лабораторная работа № 2. Программы с простейшей структурой

Лабораторная работа № 2. Программы с простейшей структурой Информация и измерение информации

Информация и измерение информации презентация по информатике Кодирование числовой информации

презентация по информатике Кодирование числовой информации Состав и структура, функциональные и обеспечивающие подсистемы, жизненный цикл КИС

Состав и структура, функциональные и обеспечивающие подсистемы, жизненный цикл КИС Я, студент. План - проект журналу

Я, студент. План - проект журналу Работа со временем pulsein(), millis(), micros(), delay(), delaymicroseconds()

Работа со временем pulsein(), millis(), micros(), delay(), delaymicroseconds() Носители информации

Носители информации Современная структура и динамика медиасистемы

Современная структура и динамика медиасистемы Основы HTML. Создание сайтов в текстовом редакторе

Основы HTML. Создание сайтов в текстовом редакторе Обзор инструментов обработки Big Data

Обзор инструментов обработки Big Data Разработка информационной системы ООО LITTLE CO

Разработка информационной системы ООО LITTLE CO Буква - строка - текст. Искусство шрифта

Буква - строка - текст. Искусство шрифта Connected Equipment Pack. Настройки тренировки: Оборудование, упражнения и занятия

Connected Equipment Pack. Настройки тренировки: Оборудование, упражнения и занятия Управление процессами. Системы управления

Управление процессами. Системы управления Введение в конфигурирование в системе 1С:Предприятие 8.2 Основные объекты

Введение в конфигурирование в системе 1С:Предприятие 8.2 Основные объекты Компьютерлік желі

Компьютерлік желі ВКонтакте

ВКонтакте SAP CRM Система Управление взаимоотношениями с клиентами

SAP CRM Система Управление взаимоотношениями с клиентами Измерение информации. Семантический подход к измерению количества информации

Измерение информации. Семантический подход к измерению количества информации Презентация: Внешняя память. Средства хранения информации

Презентация: Внешняя память. Средства хранения информации