Содержание

- 2. CHAPTER 14: Assessing and Comparing Classification Algorithms

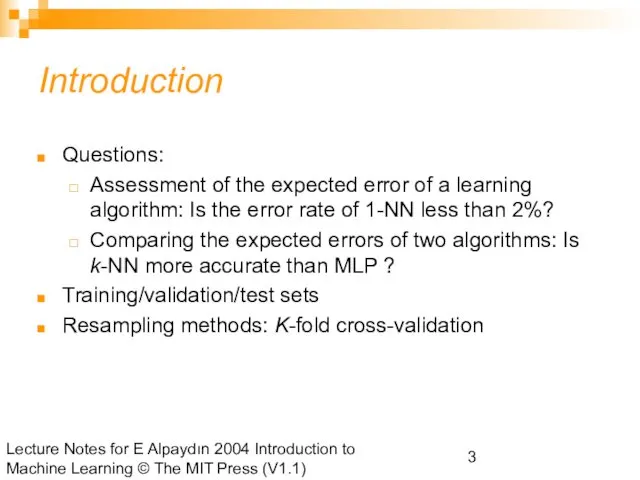

- 3. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Introduction

- 4. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Algorithm

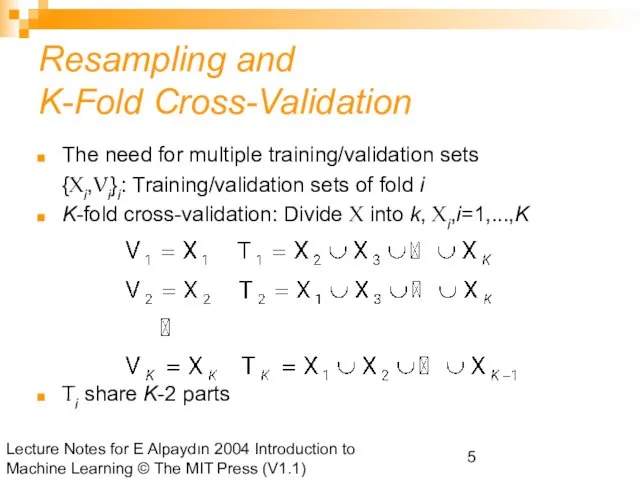

- 5. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Resampling

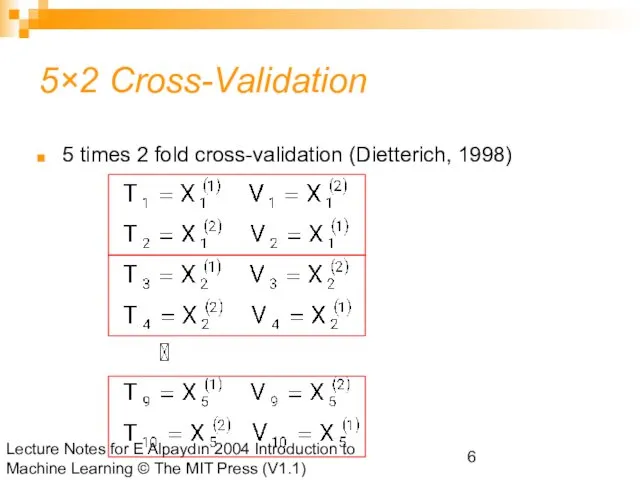

- 6. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) 5×2

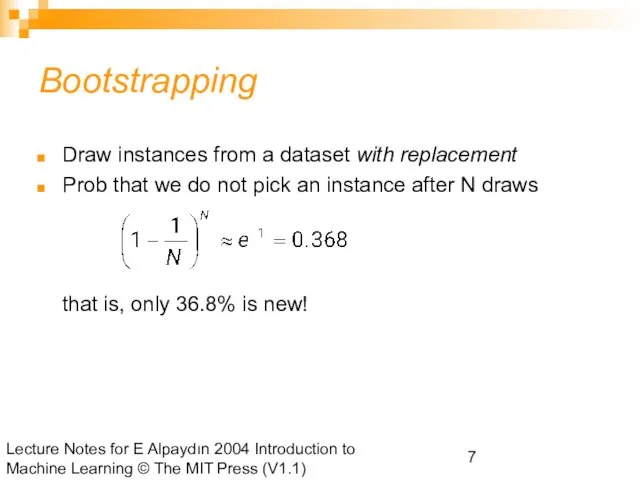

- 7. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Bootstrapping

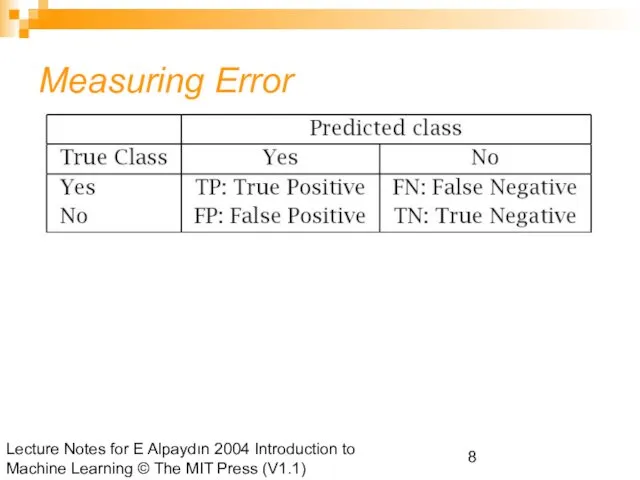

- 8. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Measuring

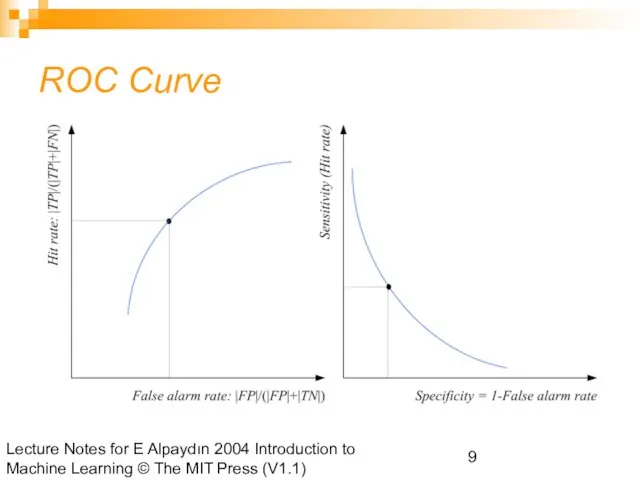

- 9. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) ROC

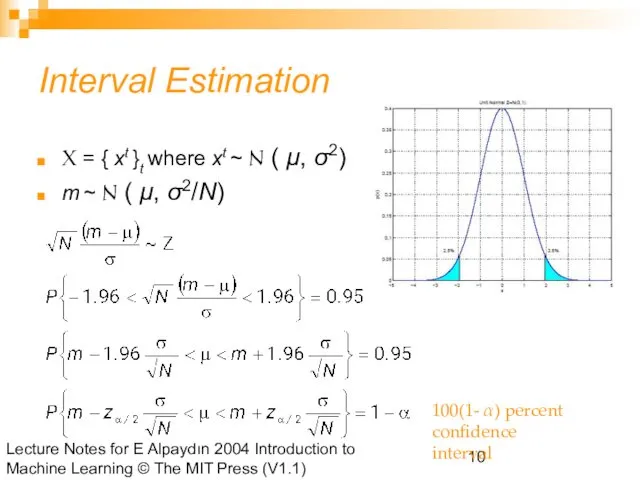

- 10. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Interval

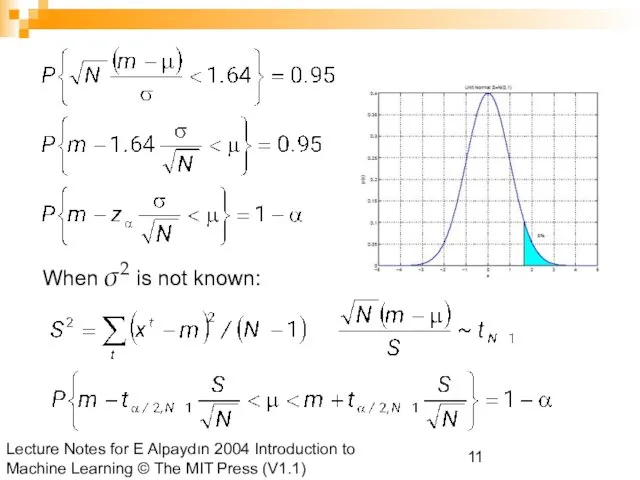

- 11. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) When

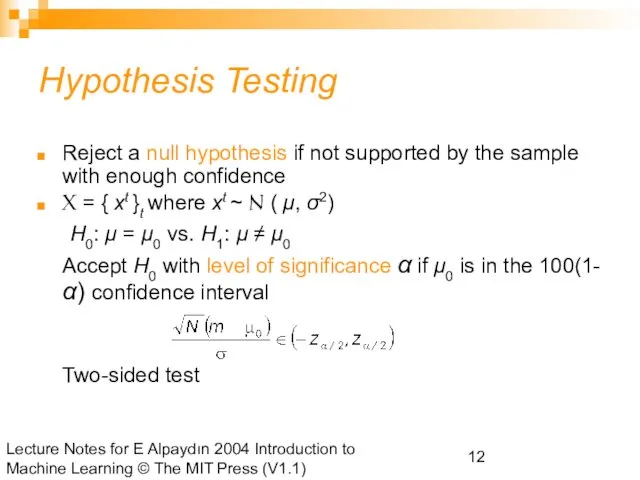

- 12. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Hypothesis

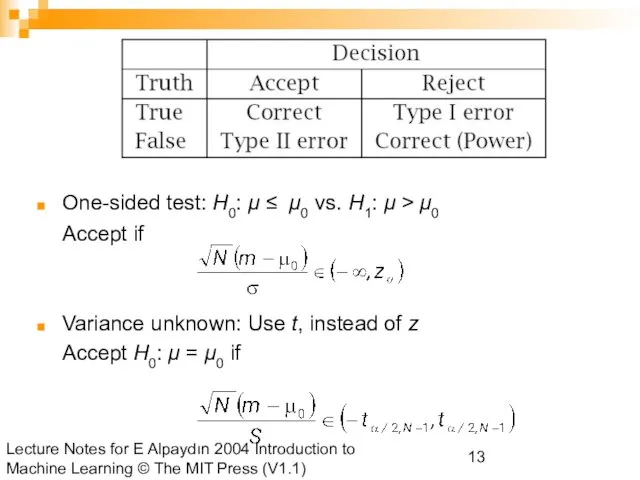

- 13. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) One-sided

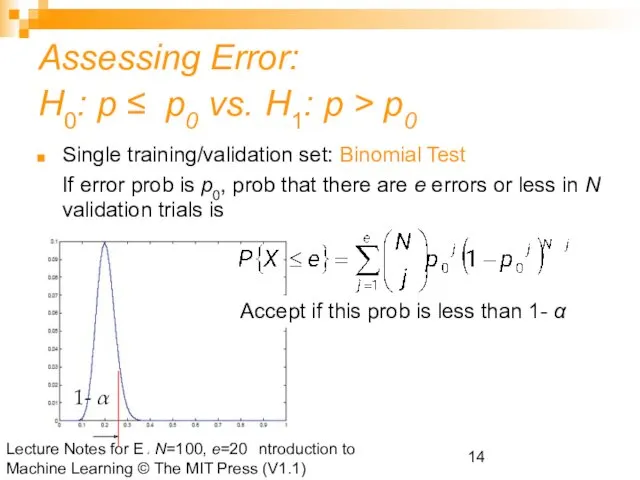

- 14. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Assessing

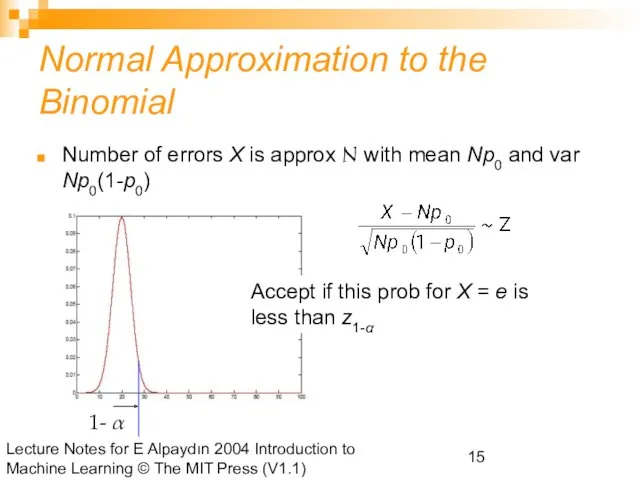

- 15. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Normal

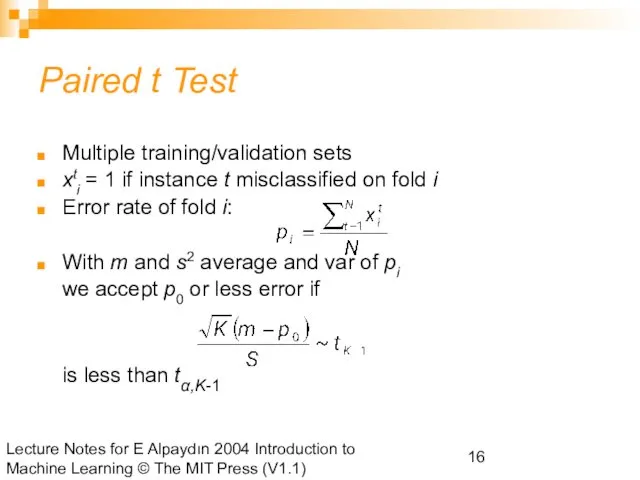

- 16. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Paired

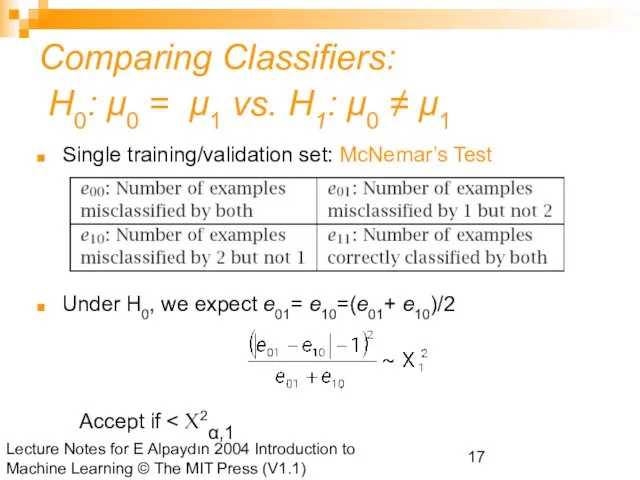

- 17. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Comparing

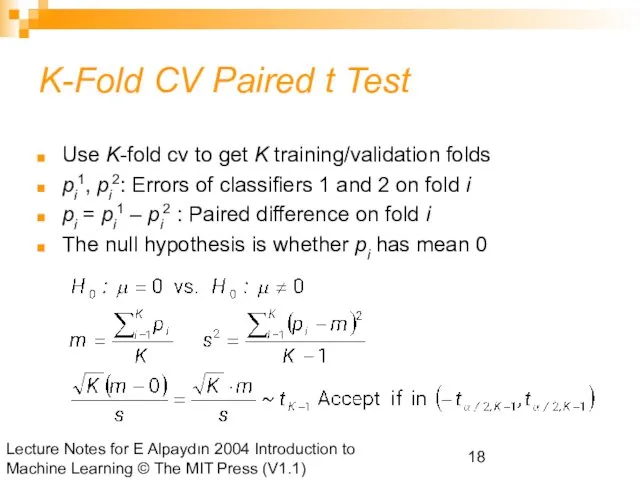

- 18. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) K-Fold

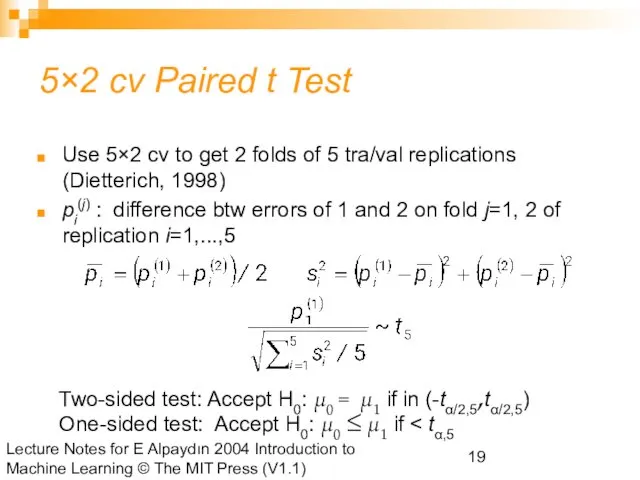

- 19. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) 5×2

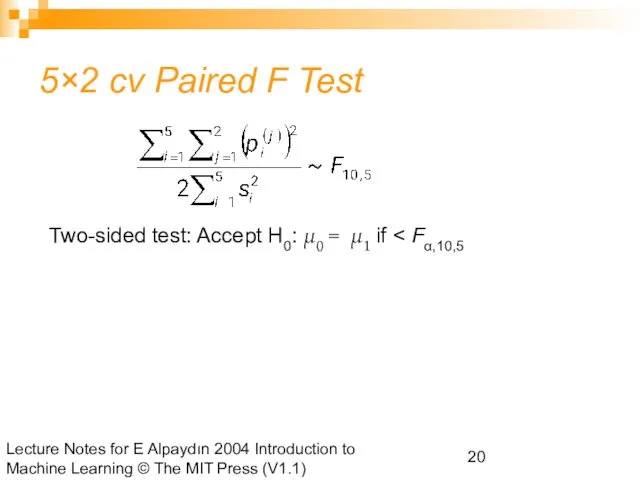

- 20. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) 5×2

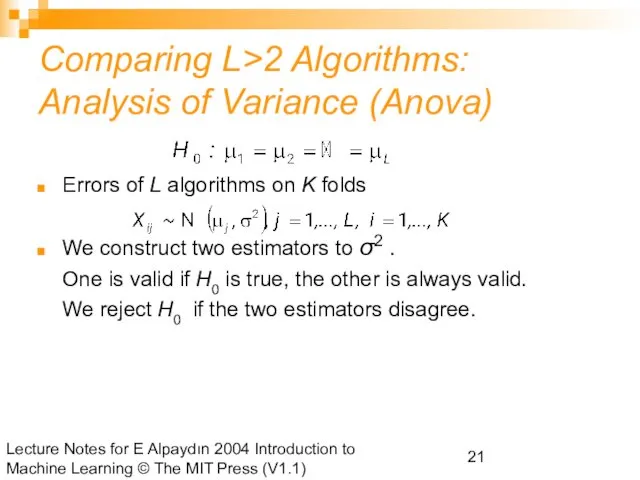

- 21. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1) Comparing

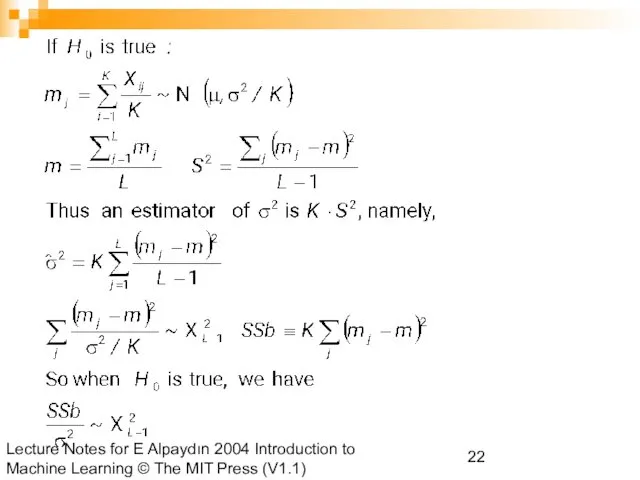

- 22. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1)

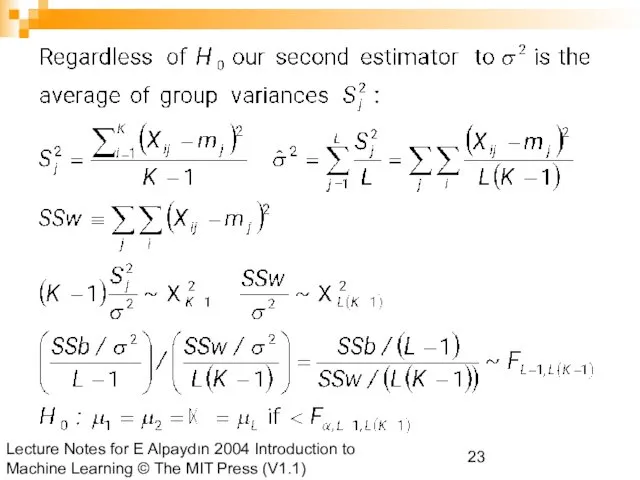

- 23. Lecture Notes for E Alpaydın 2004 Introduction to Machine Learning © The MIT Press (V1.1)

- 25. Скачать презентацию

Сложение и вычитание смешанных чисел

Сложение и вычитание смешанных чисел Нахождение дроби от числа и числа по его дроби

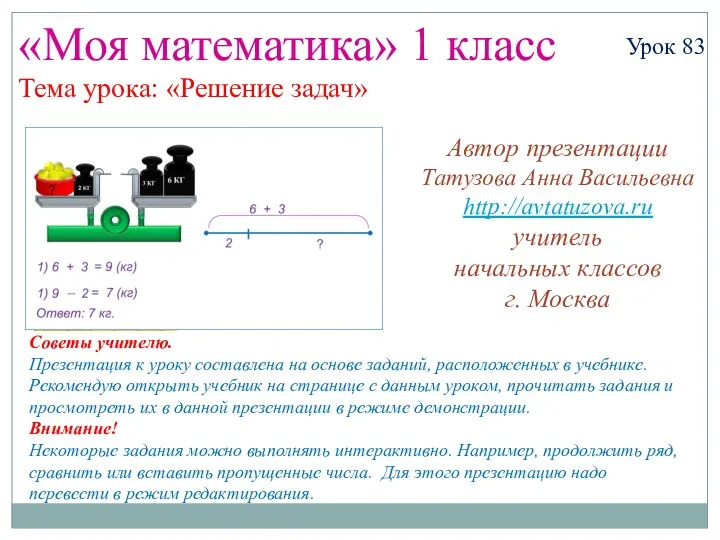

Нахождение дроби от числа и числа по его дроби Математика. 1 класс. Урок 83. Решение задач - Презентация

Математика. 1 класс. Урок 83. Решение задач - Презентация математический турнир

математический турнир Арифметический корень

Арифметический корень Случайная изменчивость (примеры). Урок 16. 7 класс

Случайная изменчивость (примеры). Урок 16. 7 класс Пространственные фигуры. Площадь, объем

Пространственные фигуры. Площадь, объем Круг. Площадь круга. Урок 88

Круг. Площадь круга. Урок 88 Числовые ряды. Общие определения и свойства. Сходимость рядов. Признаки сходимости. (Семинар 25)

Числовые ряды. Общие определения и свойства. Сходимость рядов. Признаки сходимости. (Семинар 25) Четыре замечательные точки треугольника

Четыре замечательные точки треугольника grafy1

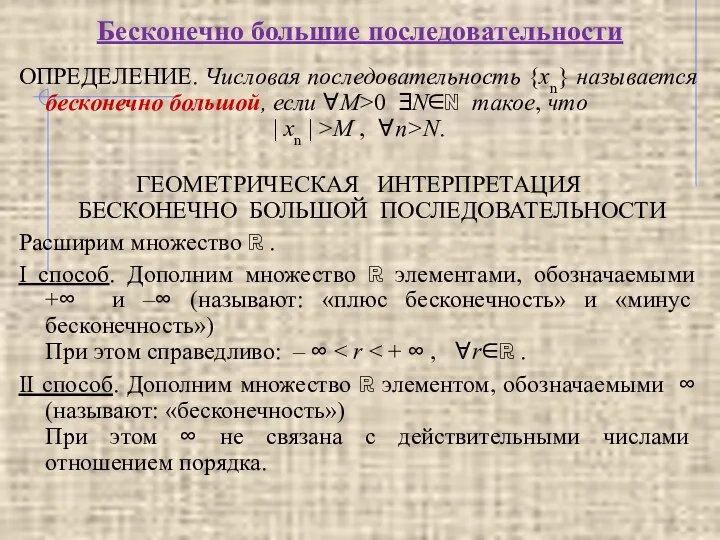

grafy1 Бесконечно большие последовательности

Бесконечно большие последовательности Урок математики по теме Узоры и орнаменты на посуде

Урок математики по теме Узоры и орнаменты на посуде Знаменитые задачи древности. 11 класс

Знаменитые задачи древности. 11 класс Формулы алгебры высказываний

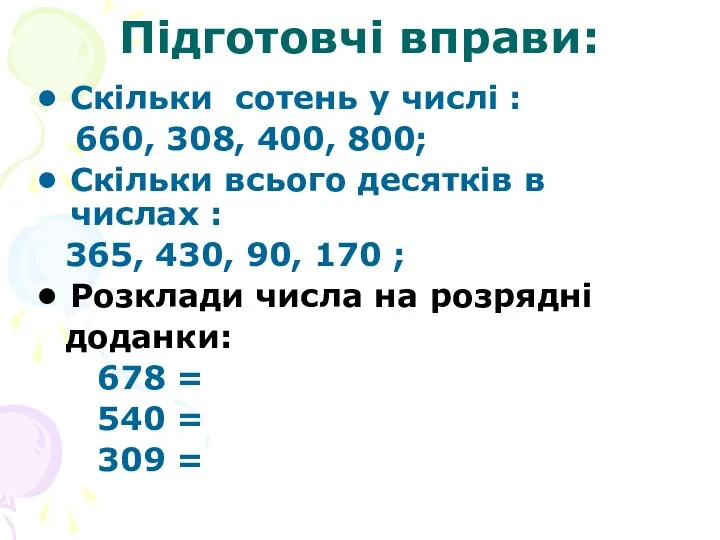

Формулы алгебры высказываний Скільки сотень у числі? Підготовчі вправи

Скільки сотень у числі? Підготовчі вправи Веселая таблица (тренажёр таблицы умножения и деления)

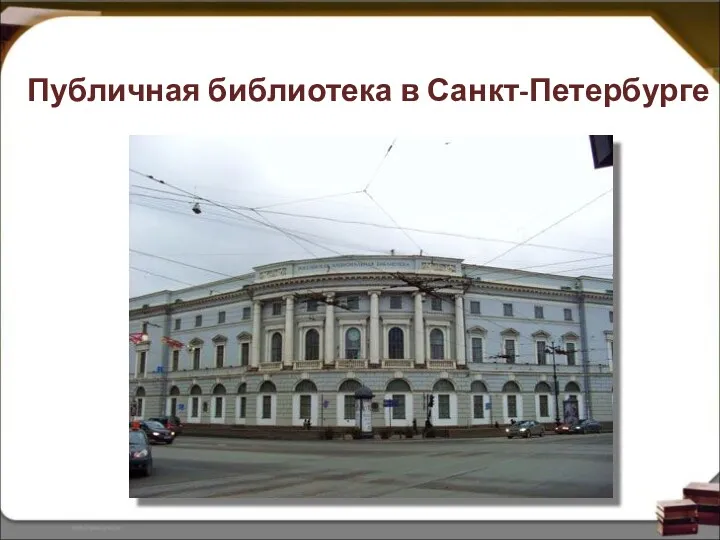

Веселая таблица (тренажёр таблицы умножения и деления) Невский проспект Санкт-Петербурга в цифрах. Публичная библиотека в Санкт-Петербурге (часть 6)

Невский проспект Санкт-Петербурга в цифрах. Публичная библиотека в Санкт-Петербурге (часть 6) Прибавление и вычитание числа 2

Прибавление и вычитание числа 2 Столбчатые диаграммы и графики

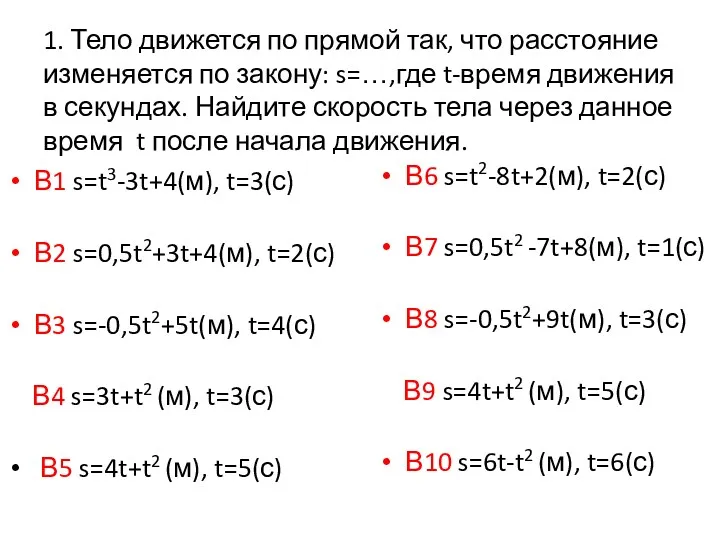

Столбчатые диаграммы и графики Начала математического анализа

Начала математического анализа Кто хочет стать отличником?

Кто хочет стать отличником? Геометрик фигуралар

Геометрик фигуралар Сложение и вычитание десятичных дробей

Сложение и вычитание десятичных дробей Радианная мера угла

Радианная мера угла Расстояние от точки до прямой. Расстояние между параллельными прямыми

Расстояние от точки до прямой. Расстояние между параллельными прямыми Презентация урока математики 2 класс. УМК Гармония.Тема урока: Решение задач.Сложение двузначных чисел.

Презентация урока математики 2 класс. УМК Гармония.Тема урока: Решение задач.Сложение двузначных чисел. Единицы длины. Дециметр

Единицы длины. Дециметр