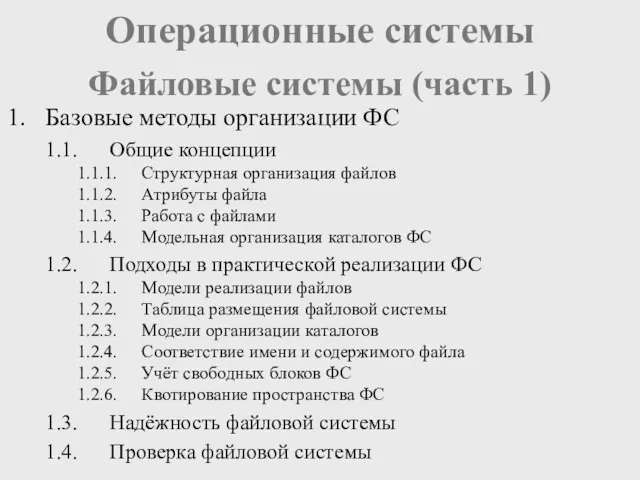

Содержание

- 2. CryENGINE 3: reaching the speed of light Anton Kaplanyan Lead researcher at Crytek

- 3. Agenda Texture compression improvements Several minor improvements Deferred shading improvements Advances in Real-Time Rendering Course Siggraph

- 4. TEXTURES Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 5. Agenda: Texture compression improvements Color textures Authoring precision Best color space Improvements to the DXT block

- 6. Color textures What is color texture? Image? Albedo! What color depth is enough for texture? 8

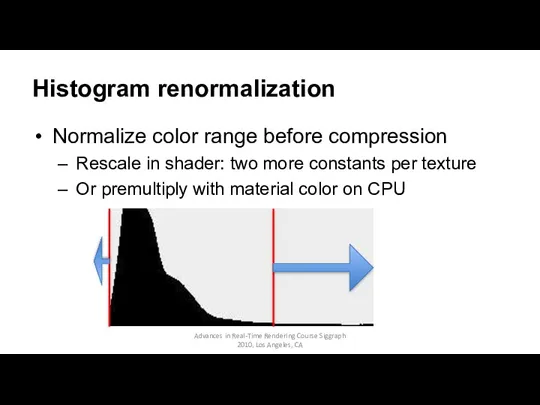

- 7. Histogram renormalization Normalize color range before compression Rescale in shader: two more constants per texture Or

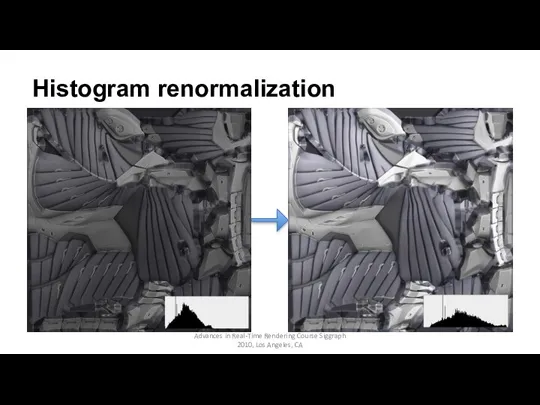

- 8. Histogram renormalization Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

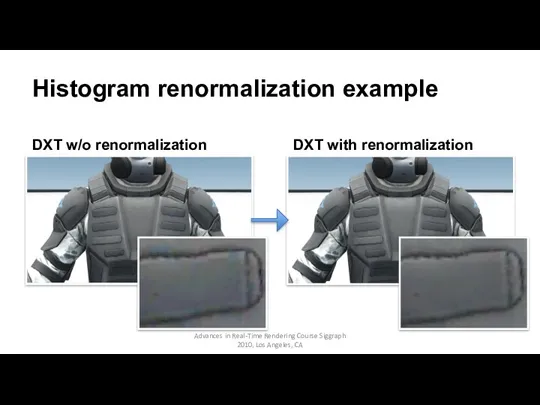

- 9. Histogram renormalization example Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA DXT w/o renormalization

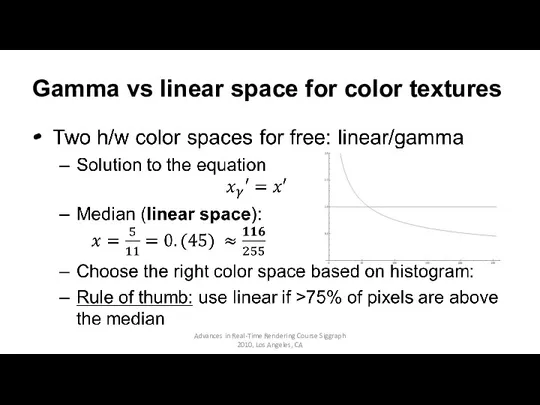

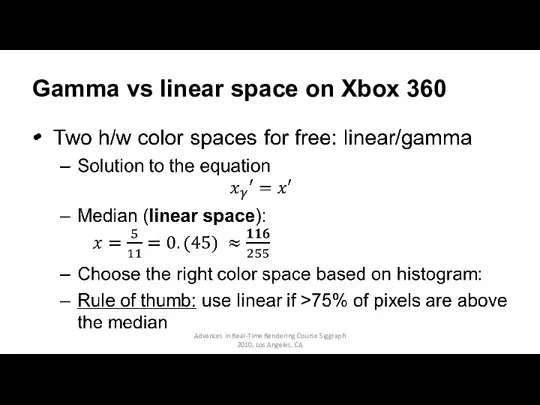

- 10. Gamma vs linear space for color textures Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles,

- 11. Gamma vs linear space on Xbox 360 Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles,

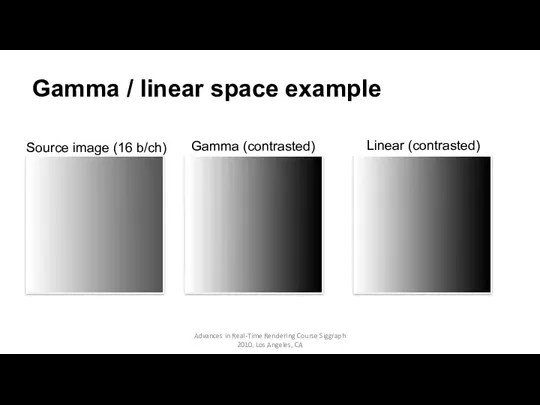

- 12. Gamma / linear space example Source image (16 b/ch) Gamma (contrasted) Linear (contrasted) Advances in Real-Time

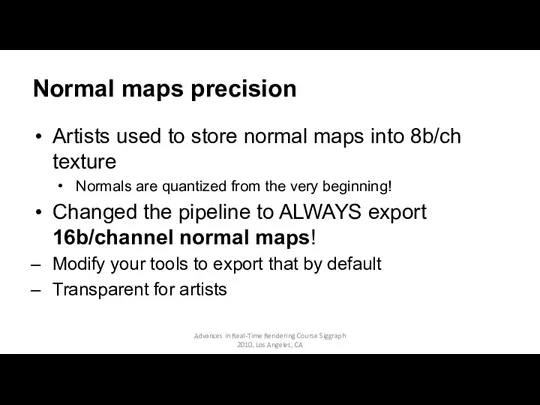

- 13. Normal maps precision Artists used to store normal maps into 8b/ch texture Normals are quantized from

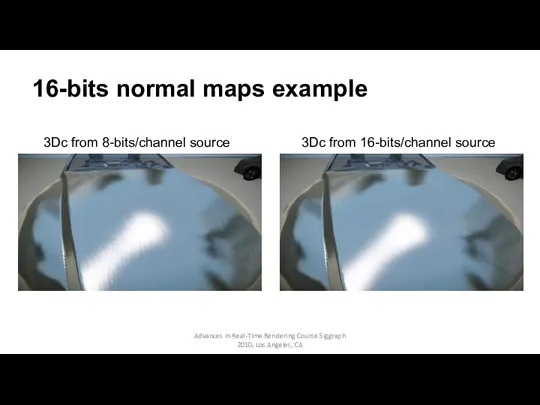

- 14. 16-bits normal maps example 3Dc from 8-bits/channel source 3Dc from 16-bits/channel source Advances in Real-Time Rendering

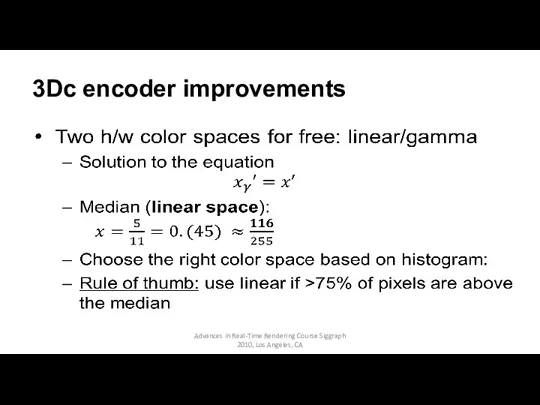

- 15. 3Dc encoder improvements Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

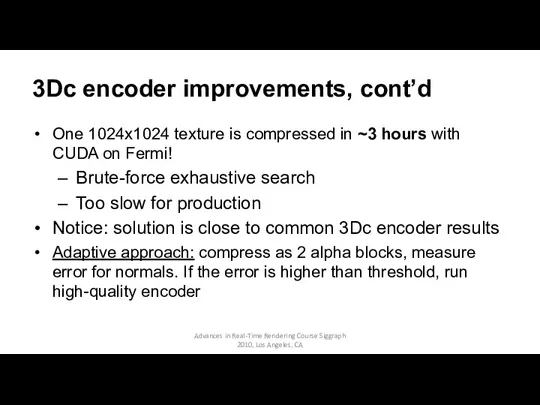

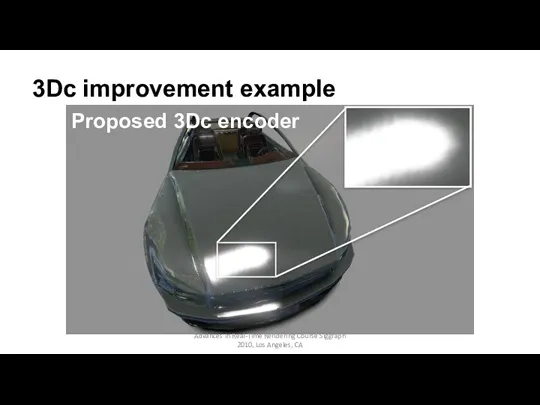

- 16. 3Dc encoder improvements, cont’d One 1024x1024 texture is compressed in ~3 hours with CUDA on Fermi!

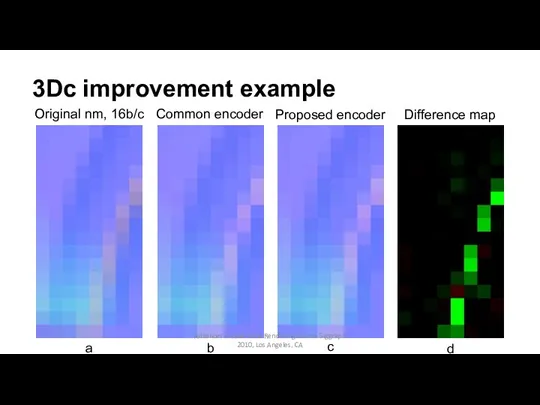

- 17. 3Dc improvement example Original nm, 16b/c Common encoder Proposed encoder Difference map Advances in Real-Time Rendering

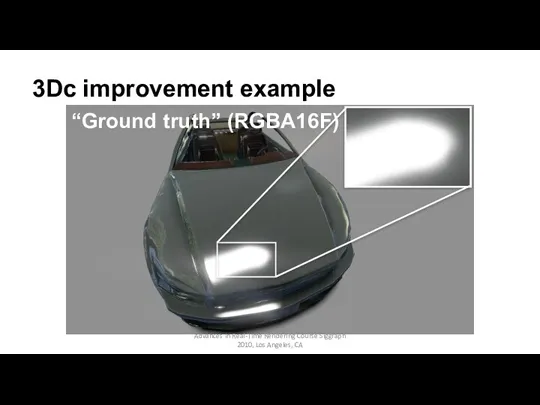

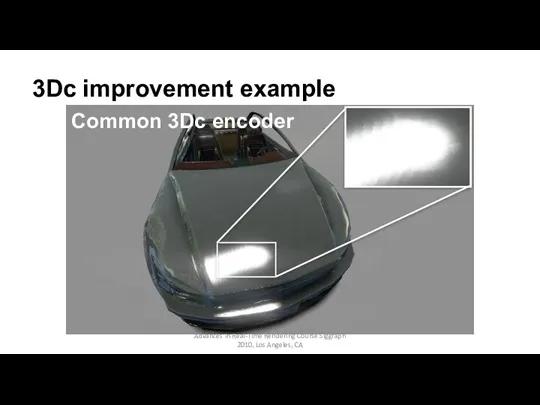

- 18. 3Dc improvement example Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA “Ground truth” (RGBA16F)

- 19. 3Dc improvement example Common 3Dc encoder Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 20. 3Dc improvement example Proposed 3Dc encoder Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 21. DIFFERENT IMPROVEMENTS Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 22. Occlusion culling Use software z-buffer (aka coverage buffer) Downscale previous frame’s z buffer on consoles Use

- 23. SSAO improvements Encode depth as 2 channel 16-bits value [0;1] Linear detph as a rational: depth=x+y/255

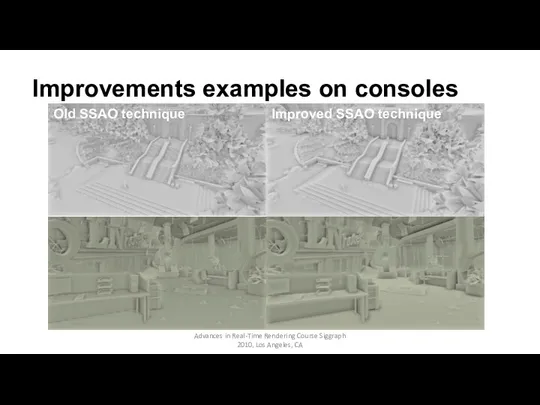

- 24. Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA Improvements examples on consoles

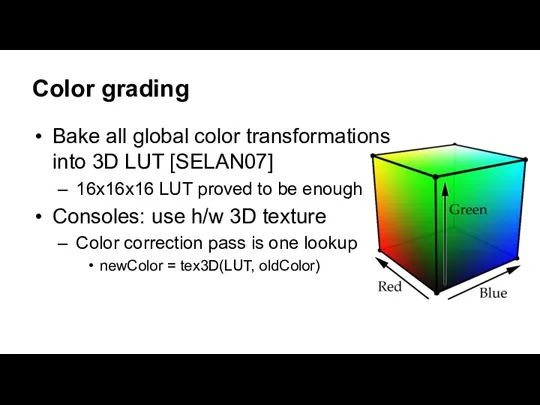

- 25. Color grading Bake all global color transformations into 3D LUT [SELAN07] 16x16x16 LUT proved to be

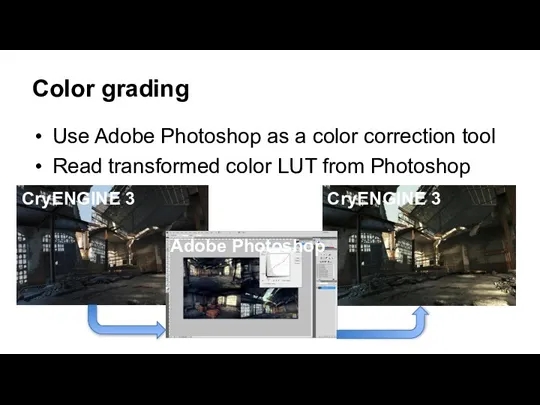

- 26. Color grading Use Adobe Photoshop as a color correction tool Read transformed color LUT from Photoshop

- 27. Color chart example for Photoshop

- 28. DEFERRED PIPELINE Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

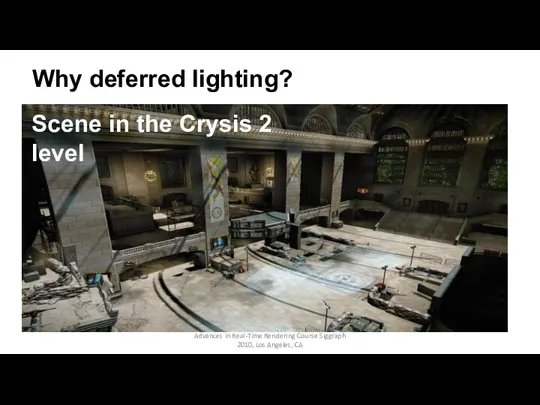

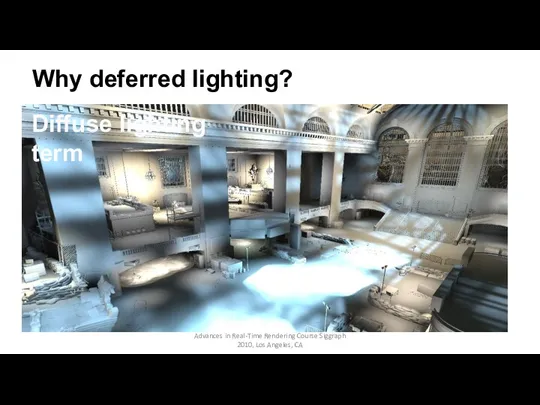

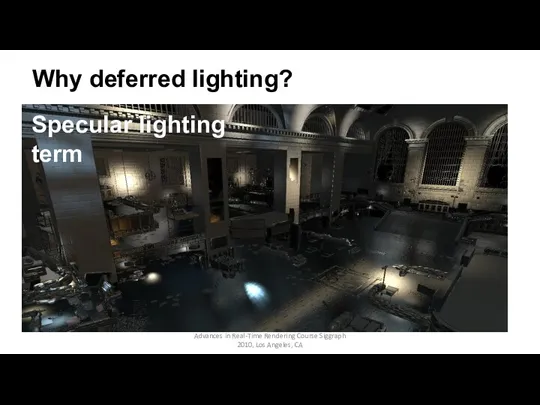

- 29. Why deferred lighting? Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 30. Why deferred lighting? Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 31. Why deferred lighting? Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 32. Introduction Good decomposition of lighting No lighting-geometry interdependency Cons: Higher memory and bandwidth requirements Advances in

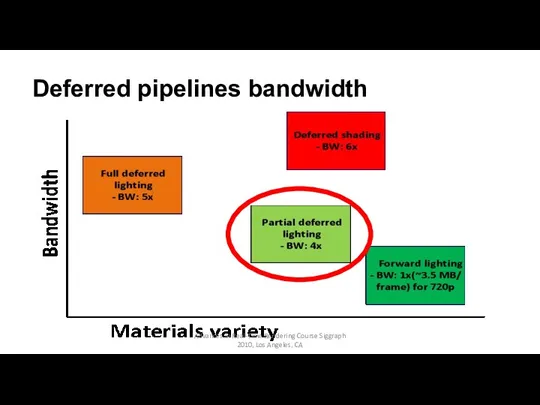

- 33. Deferred pipelines bandwidth Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 34. Major issues of deferred pipeline No anti-aliasing Existing multi-sampling techniques are too heavy for deferred pipeline

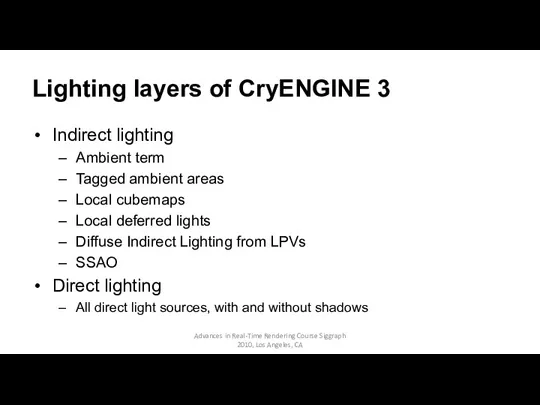

- 35. Lighting layers of CryENGINE 3 Indirect lighting Ambient term Tagged ambient areas Local cubemaps Local deferred

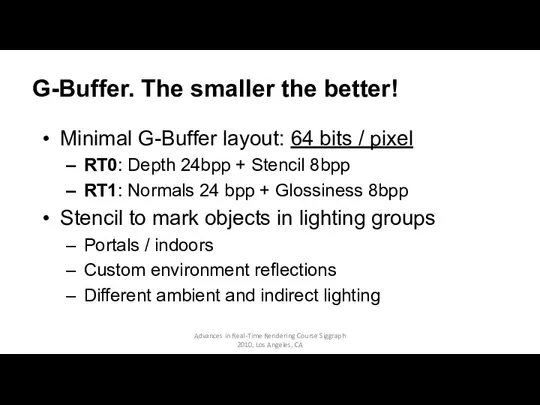

- 36. G-Buffer. The smaller the better! Minimal G-Buffer layout: 64 bits / pixel RT0: Depth 24bpp +

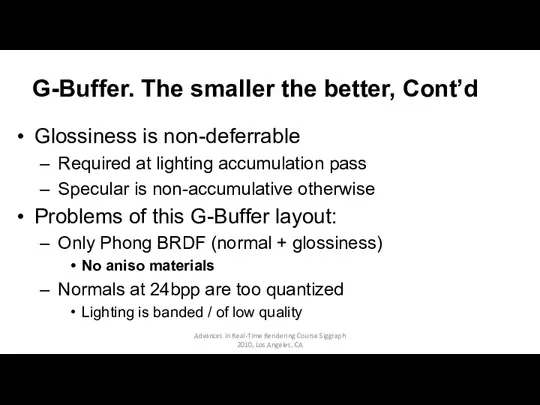

- 37. G-Buffer. The smaller the better, Cont’d Glossiness is non-deferrable Required at lighting accumulation pass Specular is

- 38. STORING NORMALS IN G-BUFFER Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 39. Normals precision for shading Normals at 24bpp are too quantized, lighting is of a low quality

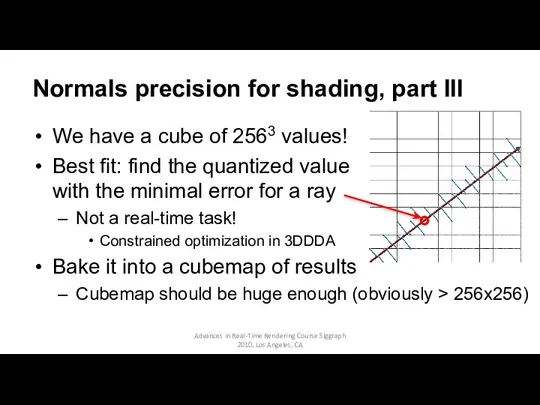

- 40. Normals precision for shading, part III We have a cube of 2563 values! Best fit: find

- 41. Normals precision for shading, part III Extract the most meaningful and unique part of this symmetric

- 42. Best fit for normals Supports alpha blending Best fit gets broken though. Usually not an issue

- 43. Storage techniques breakdown Normalized normals: ~289880 cells out of 16777216, which is ~ 1.73 % Divided

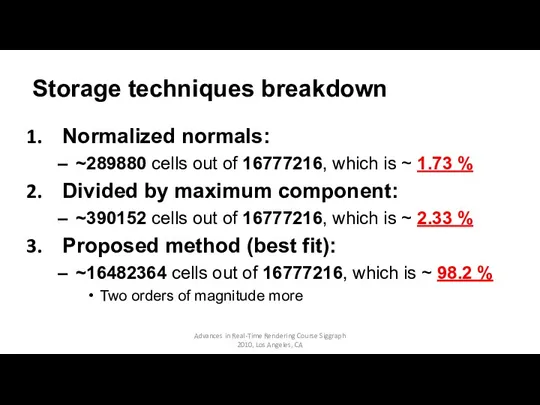

- 44. Normals precision in G-Buffer, example Diffuse lighting with normalized normals in G-Buffer Advances in Real-Time Rendering

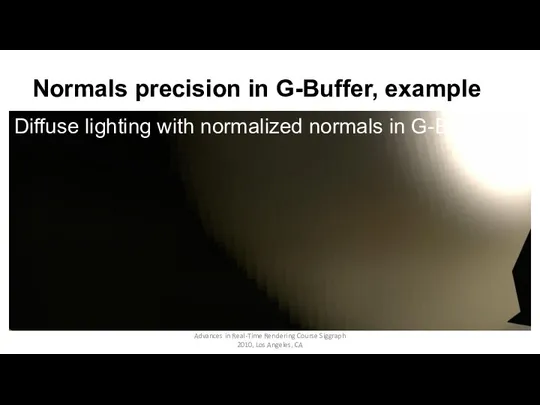

- 45. Normals precision in G-Buffer, example Diffuse lighting with best-fit normals in G-Buffer Advances in Real-Time Rendering

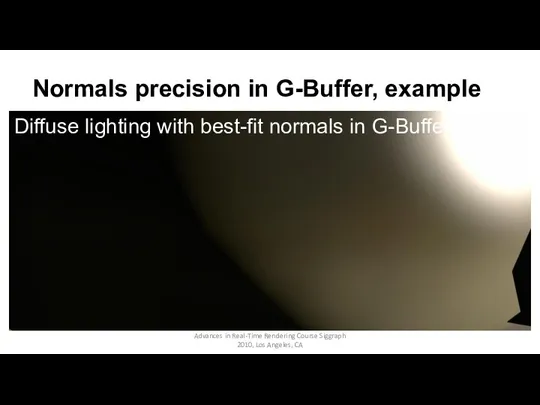

- 46. Normals precision in G-Buffer, example Lighting with normalized normals in G-Buffer Advances in Real-Time Rendering Course

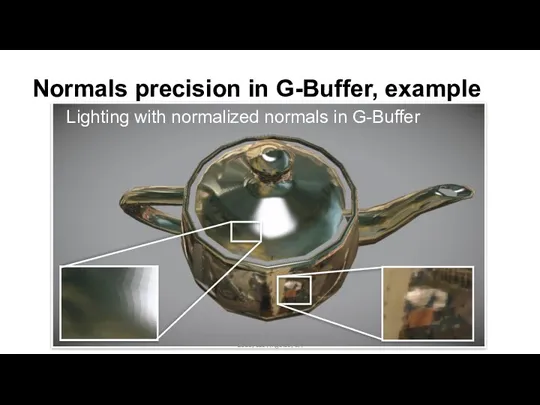

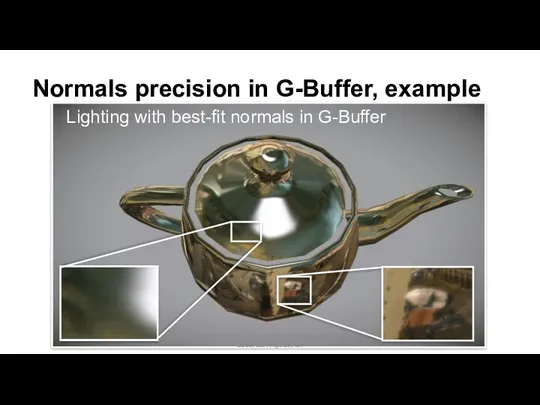

- 47. Normals precision in G-Buffer, example Lighting with best-fit normals in G-Buffer Advances in Real-Time Rendering Course

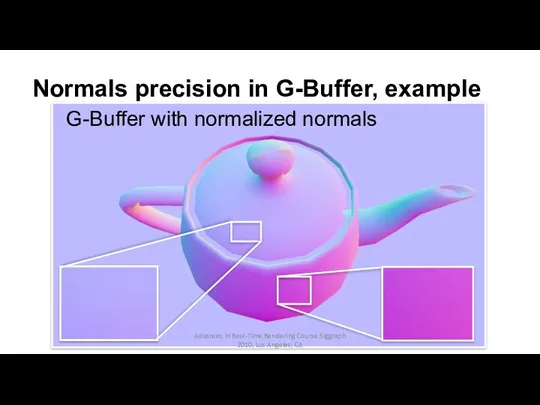

- 48. Normals precision in G-Buffer, example G-Buffer with normalized normals Advances in Real-Time Rendering Course Siggraph 2010,

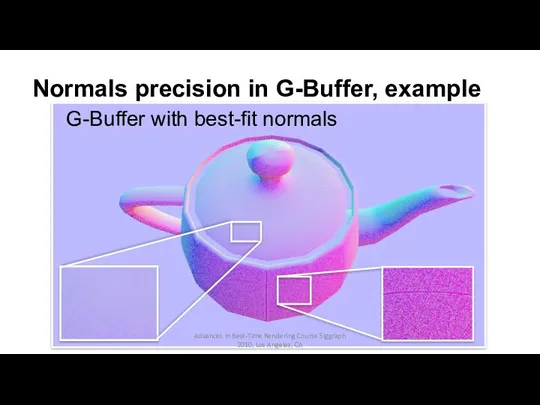

- 49. Normals precision in G-Buffer, example G-Buffer with best-fit normals Advances in Real-Time Rendering Course Siggraph 2010,

- 50. PHYSICALLY-BASED BRDFS Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

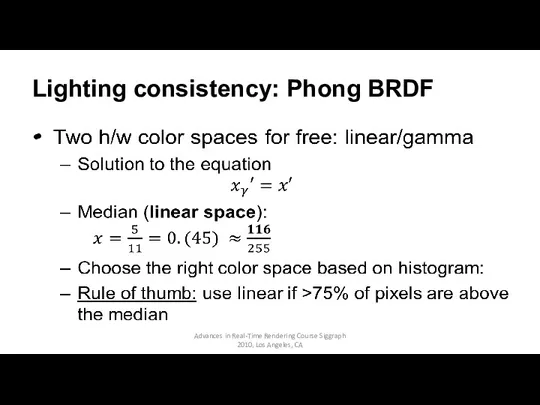

- 51. Lighting consistency: Phong BRDF Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

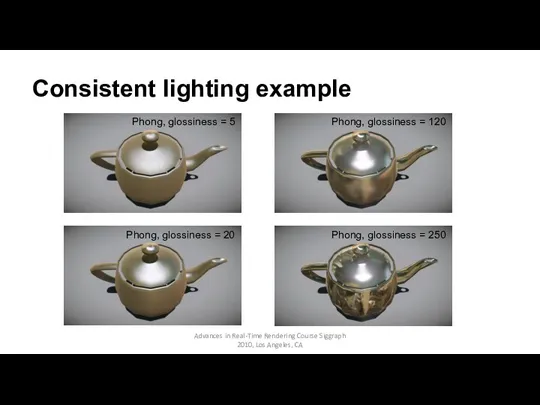

- 52. Consistent lighting example Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

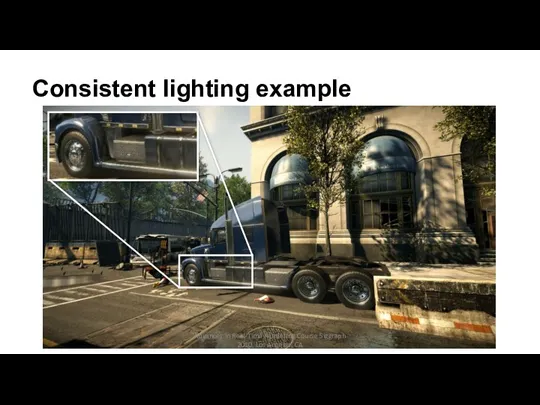

- 53. Consistent lighting example Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

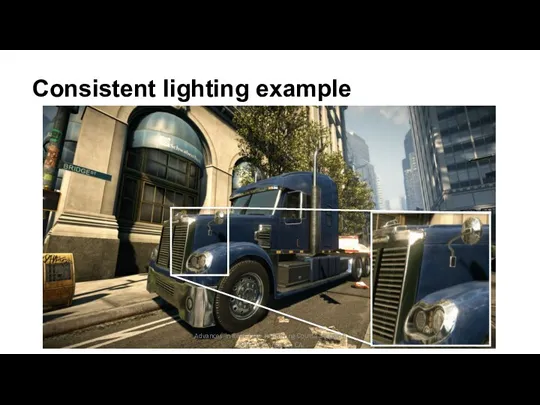

- 54. Consistent lighting example Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 55. HDR… VS BANDWIDTH VS PRECISION Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 56. HDR on consoles Can we achieve bandwidth the same as for LDR? PS3: RGBK (aka RGBM)

- 57. HDR on consoles: dynamic range Use dynamic range scaling to improve precision Use average luminance to

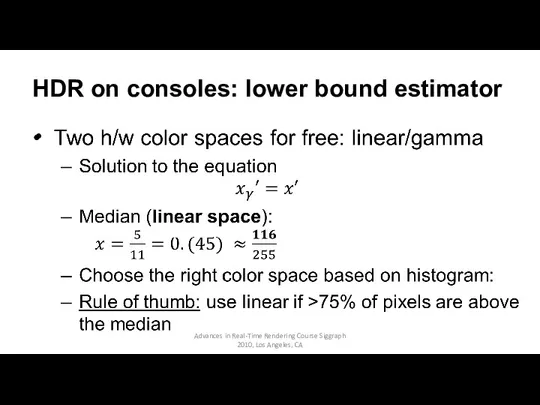

- 58. HDR on consoles: lower bound estimator Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

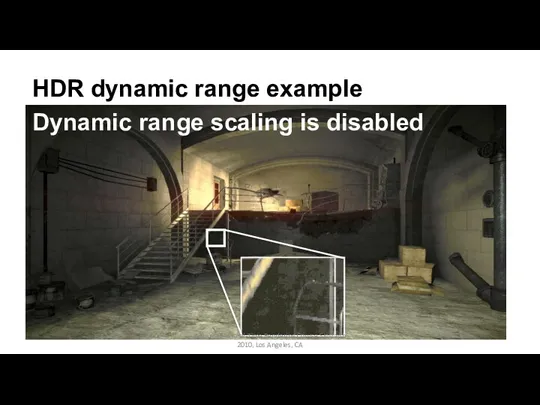

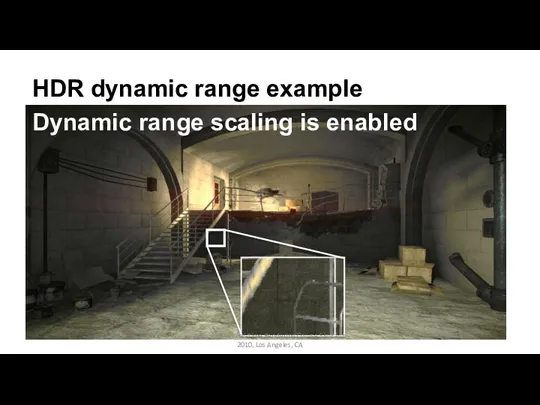

- 59. HDR dynamic range example Dynamic range scaling is disabled Advances in Real-Time Rendering Course Siggraph 2010,

- 60. HDR dynamic range example Dynamic range scaling is enabled Advances in Real-Time Rendering Course Siggraph 2010,

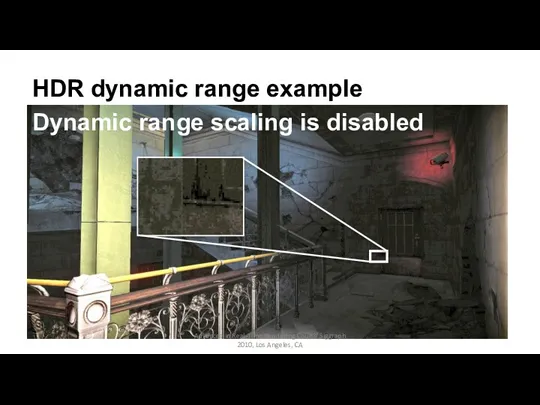

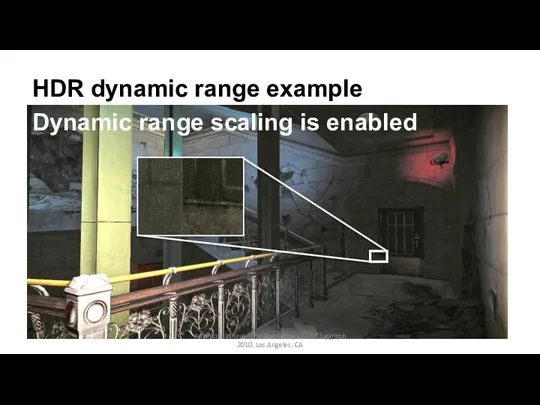

- 61. HDR dynamic range example Dynamic range scaling is disabled Advances in Real-Time Rendering Course Siggraph 2010,

- 62. HDR dynamic range example Dynamic range scaling is enabled Advances in Real-Time Rendering Course Siggraph 2010,

- 63. LIGHTING TOOLS: CLIP VOLUMES Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

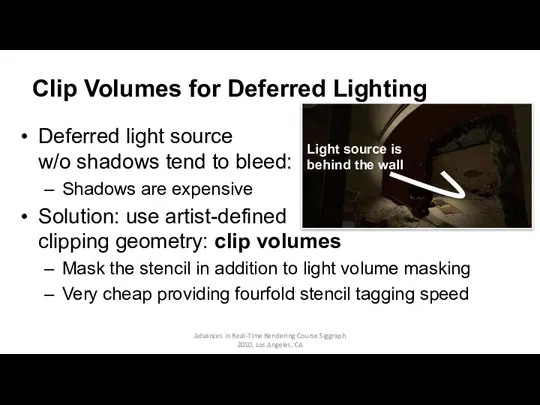

- 64. Clip Volumes for Deferred Lighting Deferred light source w/o shadows tend to bleed: Shadows are expensive

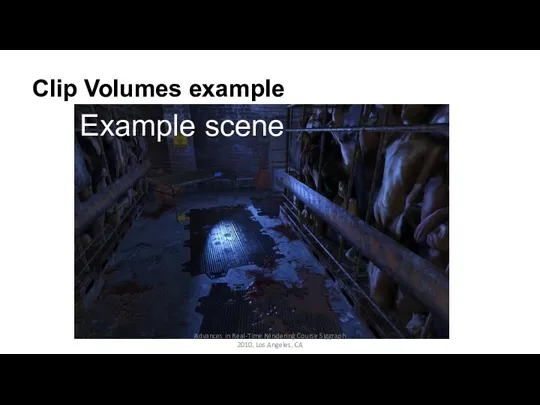

- 65. Clip Volumes example Example scene Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

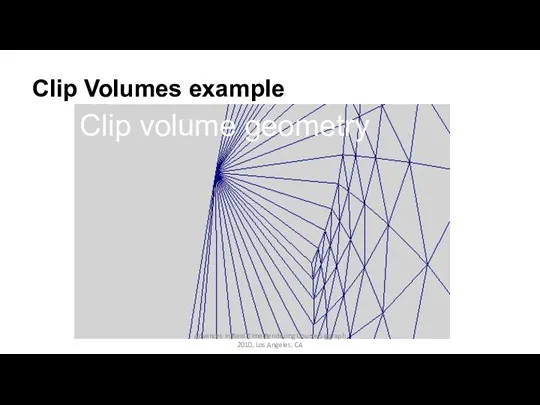

- 66. Clip Volumes example Clip volume geometry Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 67. Clip Volumes example Stencil tagging Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

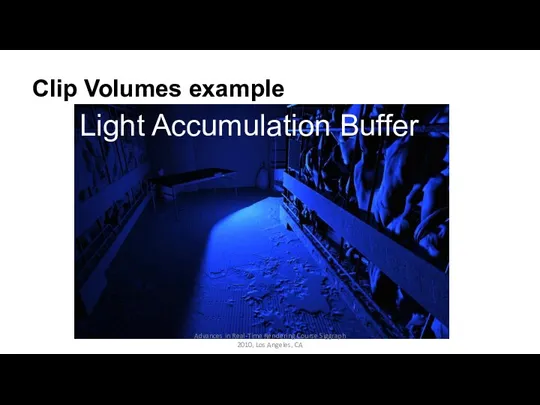

- 68. Clip Volumes example Light Accumulation Buffer Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

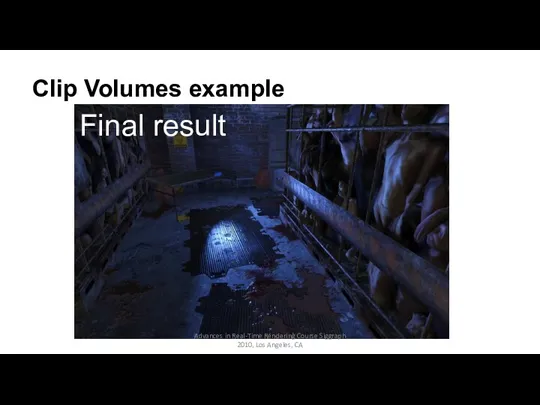

- 69. Clip Volumes example Final result Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 70. DEFERRED LIGHTING AND ANISOTROPIC MATERIALS Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

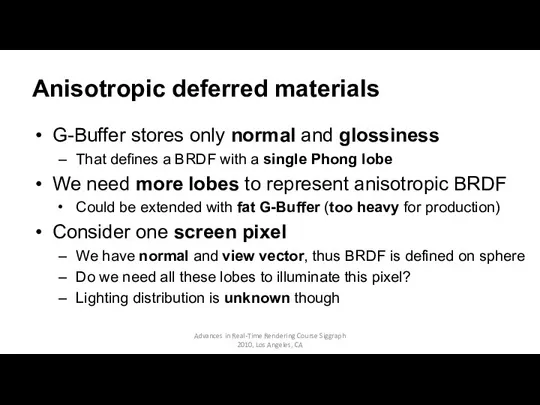

- 71. Anisotropic deferred materials G-Buffer stores only normal and glossiness That defines a BRDF with a single

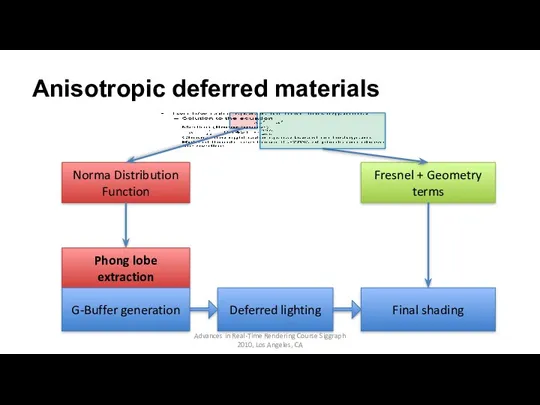

- 72. Anisotropic deferred materials, part I Idea: Extract the major Phong lobe from NDF Use microfacet BRDF

- 73. Anisotropic deferred materials, part II Approximate lighting distribution with SG per object Merge SG functions if

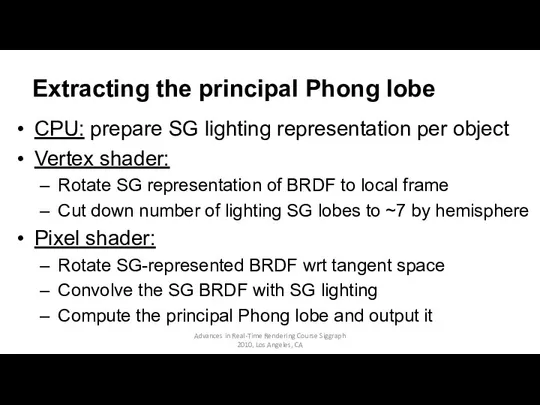

- 74. Extracting the principal Phong lobe CPU: prepare SG lighting representation per object Vertex shader: Rotate SG

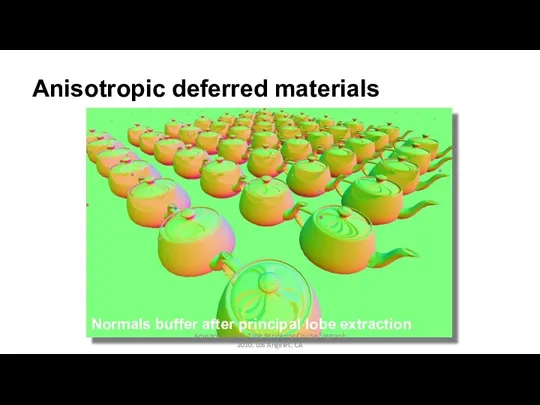

- 75. Anisotropic deferred materials Norma Distribution Function Fresnel + Geometry terms Deferred lighting Final shading Phong lobe

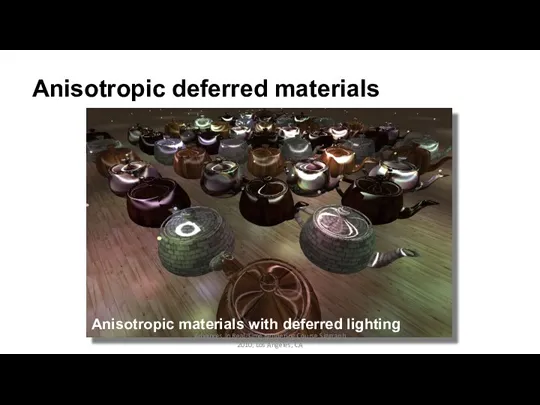

- 76. Anisotropic deferred materials Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA Anisotropic materials with

- 77. Anisotropic deferred materials Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA Normals buffer after

- 78. Anisotropic deferred materials: why? Cons: Imprecise lobe extraction and specular reflections But: see [RTDKS10] for more

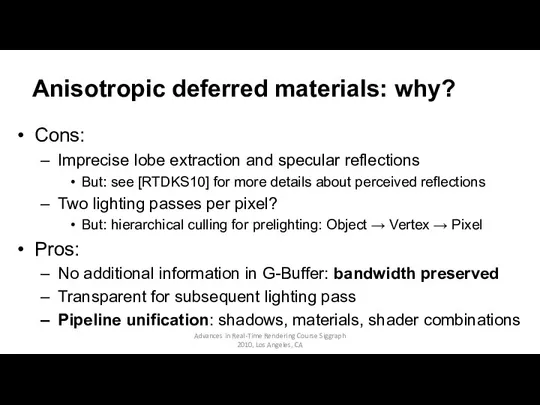

- 79. DEFERRED LIGHTING AND ANTI-ALIASING Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

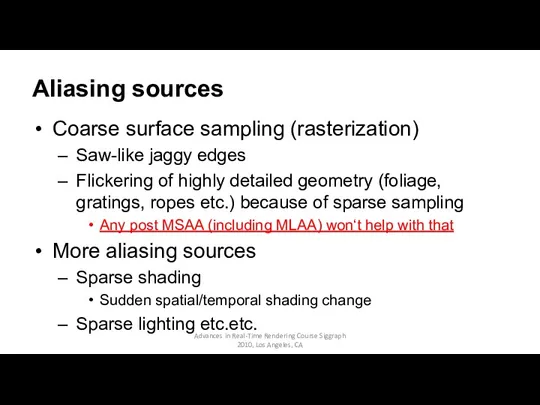

- 80. Aliasing sources Coarse surface sampling (rasterization) Saw-like jaggy edges Flickering of highly detailed geometry (foliage, gratings,

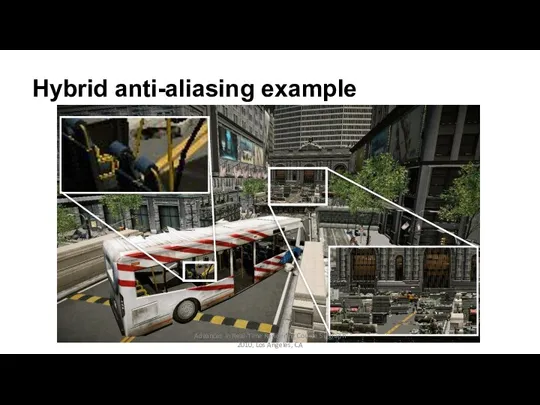

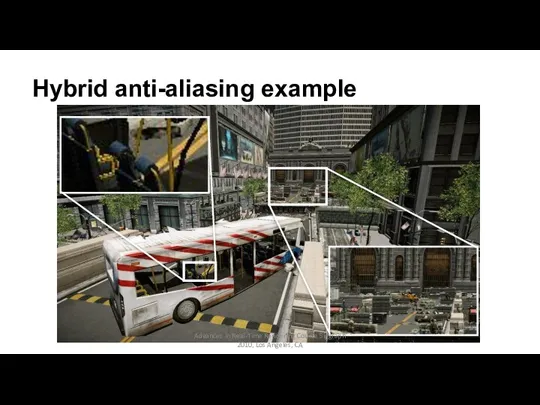

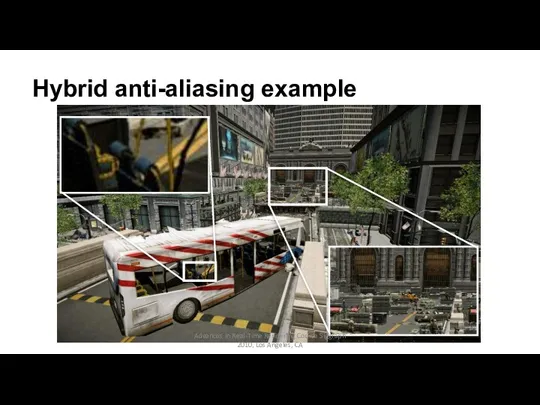

- 81. Hybrid anti-aliasing solution Post-process AA for near objects Doesn‘t supersample Works on edges Temporal AA for

- 82. Post-process Anti-Aliasing Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

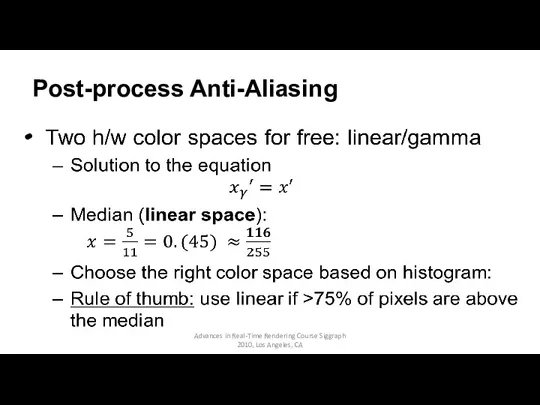

- 83. Temporal Anti-Aliasing Use temporal reprojection with cache miss approach Store previous frame and depth buffer Reproject

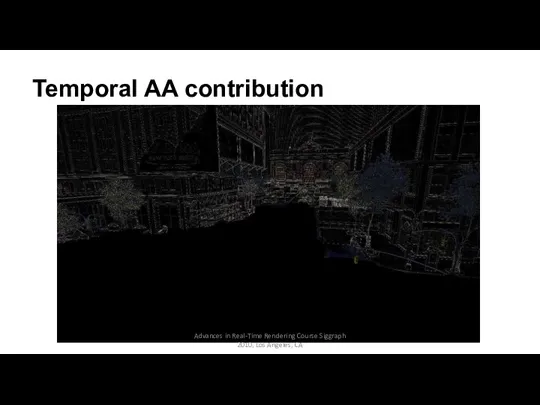

- 84. Hybrid anti-aliasing solution Separation by distance guarantees small changes of view vector for distant objects Reduces

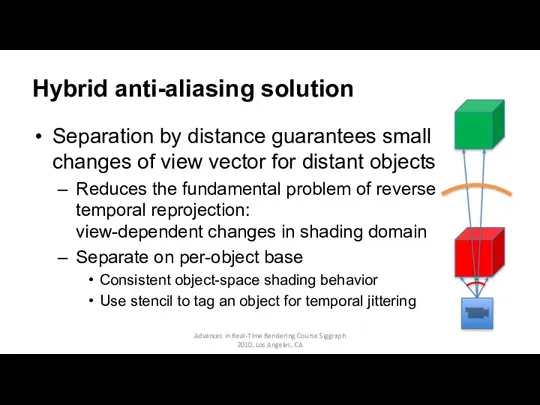

- 85. Hybrid anti-aliasing example Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 86. Hybrid anti-aliasing example Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 87. Hybrid anti-aliasing example Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 88. Temporal AA contribution Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

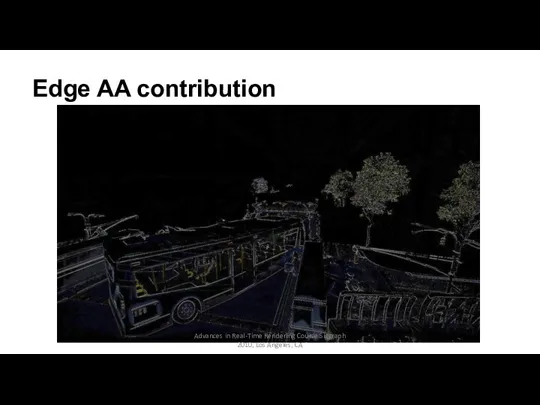

- 89. Edge AA contribution Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 90. Hybrid anti-aliasing video Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 91. Conclusion Texture compression improvements for consoles Deferred pipeline: some major issues successfully resolved Bandwidth and precision

- 92. Acknowledgements Vaclav Kyba from R&D for implementation of temporal AA Tiago Sousa, Sergey Sokov and the

- 93. QUESTIONS? Thank you for your attention Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

- 94. APPENDIX A: BEST FIT FOR NORMALS Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

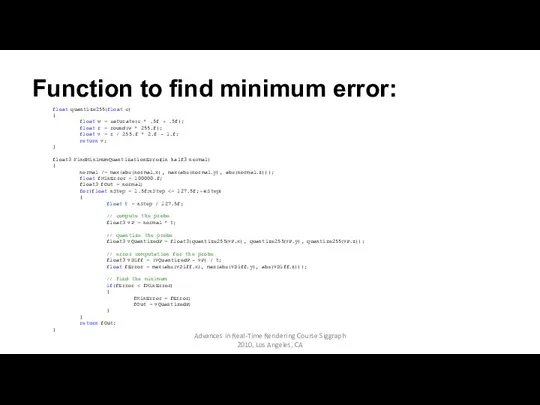

- 95. Function to find minimum error: float quantize255(float c) { float w = saturate(c * .5f +

- 96. Cubemap produced with this function Advances in Real-Time Rendering Course Siggraph 2010, Los Angeles, CA

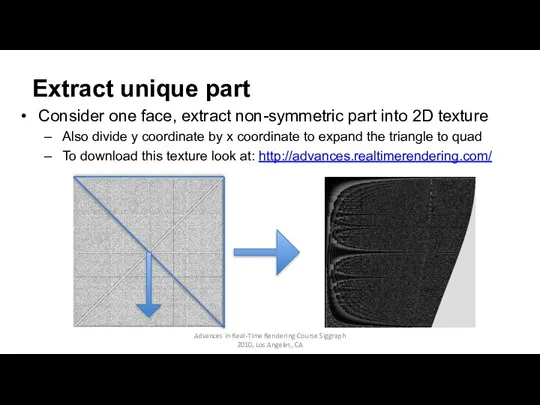

- 97. Consider one face, extract non-symmetric part into 2D texture Also divide y coordinate by x coordinate

- 98. Function to fetch 2D texture at G-Buffer pass: void CompressUnsignedNormalToNormalsBuffer(inout half4 vNormal) { // renormalize (needed

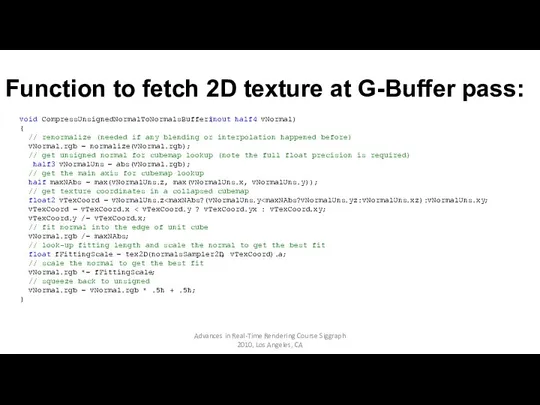

- 100. Скачать презентацию

![SSAO improvements Encode depth as 2 channel 16-bits value [0;1]](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/203117/slide-22.jpg)

Объектно-ориентированное программирование на алгоритмическом языке С++

Объектно-ориентированное программирование на алгоритмическом языке С++ Представление числовой информации с помощью систем счисления

Представление числовой информации с помощью систем счисления Подготовка и проведение пресс-конференции

Подготовка и проведение пресс-конференции Планирование выполнения процессов в Linux

Планирование выполнения процессов в Linux Программа курса “Введение в тестирование ПО”. Динамическое тестирование

Программа курса “Введение в тестирование ПО”. Динамическое тестирование Электрондық құжат айналымы

Электрондық құжат айналымы Космічний. Комікси

Космічний. Комікси Реклама в интернете

Реклама в интернете Анализ многосвязных динамических систем

Анализ многосвязных динамических систем Программы для работы с видео

Программы для работы с видео Алгоритмы с ветвлениями. 6 класс

Алгоритмы с ветвлениями. 6 класс Сетевые модели OSI и IEEE Project 802

Сетевые модели OSI и IEEE Project 802 VR/Ar-технологии. Сейчас и перспективы

VR/Ar-технологии. Сейчас и перспективы СУБД MySQL PHP. Лекція №7

СУБД MySQL PHP. Лекція №7 Методические аспекты использования интерактивной системы голосования и тестирования Votum в дошкольных учреждениях

Методические аспекты использования интерактивной системы голосования и тестирования Votum в дошкольных учреждениях Тексты в компьютерной памяти

Тексты в компьютерной памяти Операционные системы. Файловые системы (часть 1)

Операционные системы. Файловые системы (часть 1) Photoshop. Лабораторная работа №1

Photoshop. Лабораторная работа №1 2D Pipe Junction. Introduction to ANSYS ICEM CFD

2D Pipe Junction. Introduction to ANSYS ICEM CFD Интерактивная компьютерная графика. Системы координат

Интерактивная компьютерная графика. Системы координат Киберспорт. Появление игр

Киберспорт. Появление игр Тема 1. Введение в теорию баз данных

Тема 1. Введение в теорию баз данных Работа со списками в Excel

Работа со списками в Excel История развития ЭВМ

История развития ЭВМ Программная инженерия. Часть 3

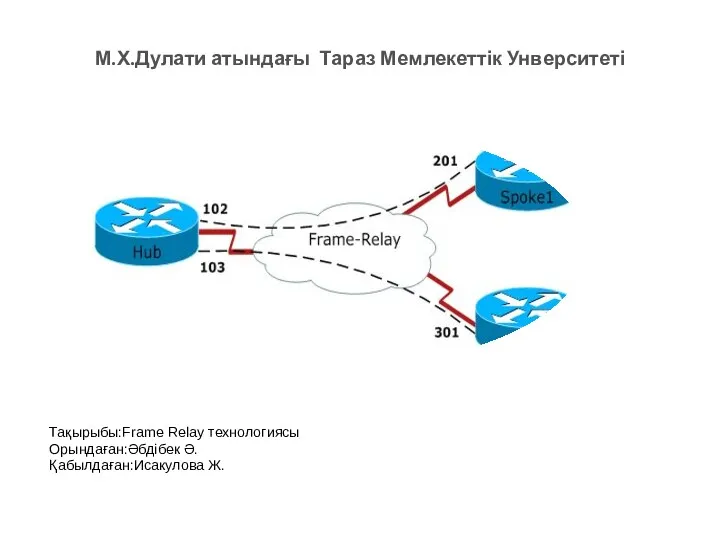

Программная инженерия. Часть 3 Frame Relay технологиясы

Frame Relay технологиясы Сортировка

Сортировка Обеспечение безопасности информации на некоторых уязвимых направлениях деятельности предприятия

Обеспечение безопасности информации на некоторых уязвимых направлениях деятельности предприятия