Содержание

- 2. Performance and Energy Efficiency Applications today are data-intensive

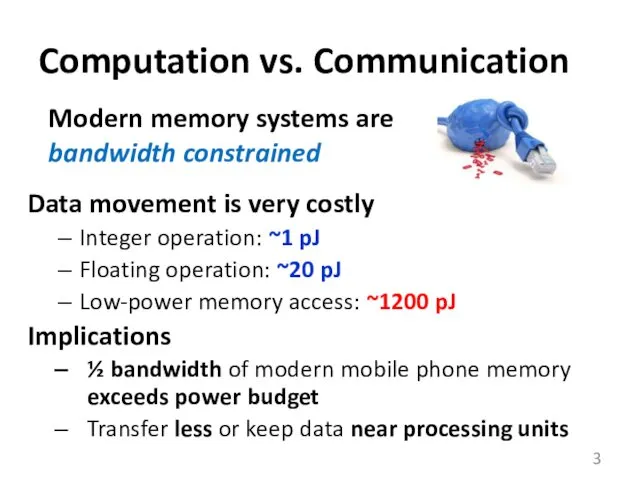

- 3. Computation vs. Communication Data movement is very costly Integer operation: ~1 pJ Floating operation: ~20 pJ

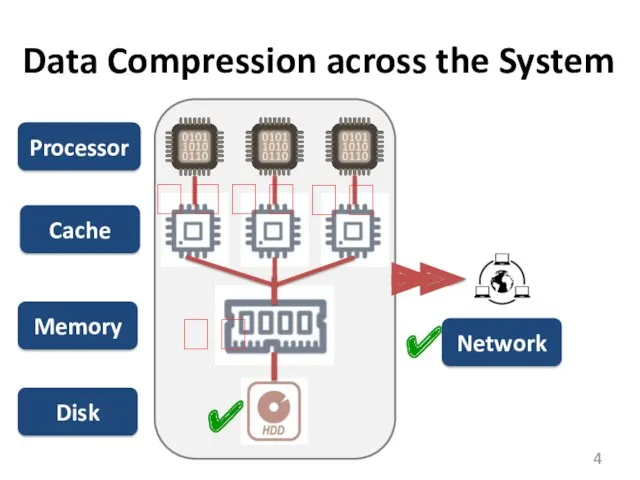

- 4. Data Compression across the System Processor Cache Memory Disk Network ✔ ✔ ? ? ? ?

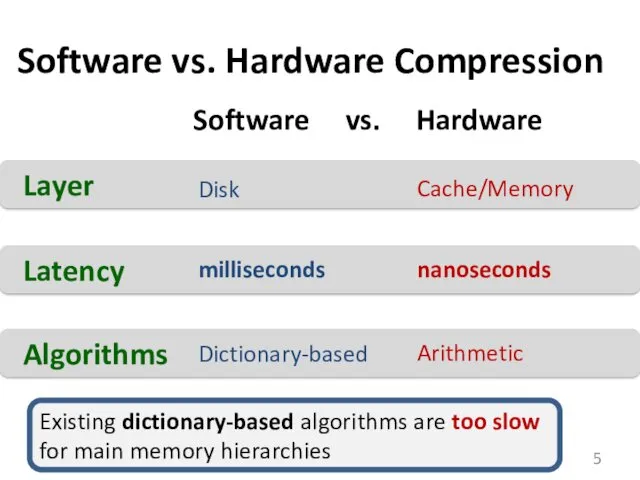

- 5. Software vs. Hardware Compression Layer Disk Cache/Memory Latency milliseconds nanoseconds Software vs. Hardware Algorithms Dictionary-based Arithmetic

- 6. Key Challenges for Compression in Memory Hierarchy Fast Access Latency Practical Implementation and Low Cost High

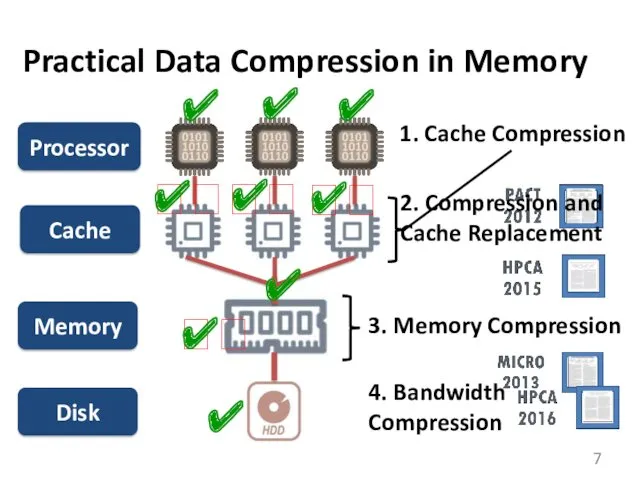

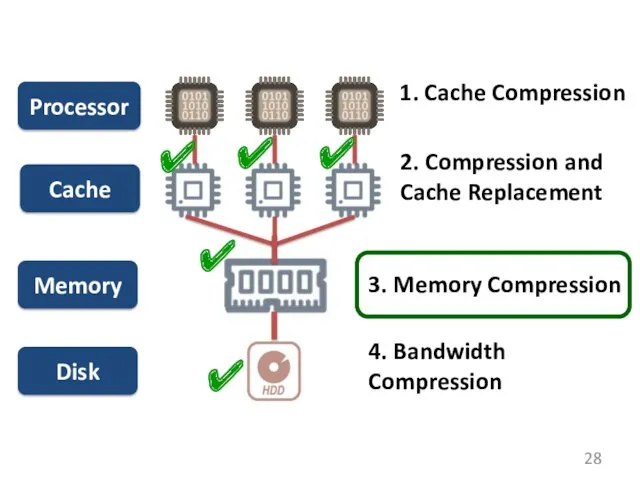

- 7. Practical Data Compression in Memory Processor Cache Memory Disk ✔ ? ? ? ? 1. Cache

- 8. 1. Cache Compression

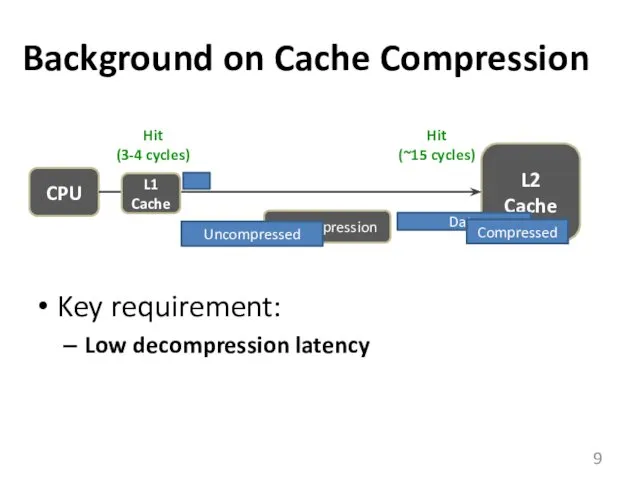

- 9. Background on Cache Compression Key requirement: Low decompression latency CPU L2 Cache Data Compressed Decompression Uncompressed

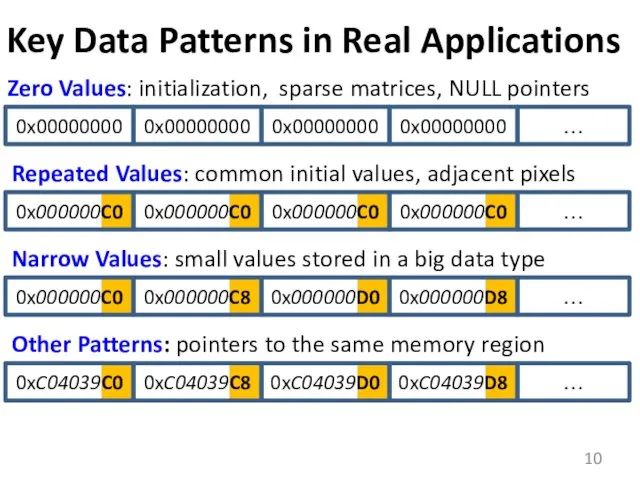

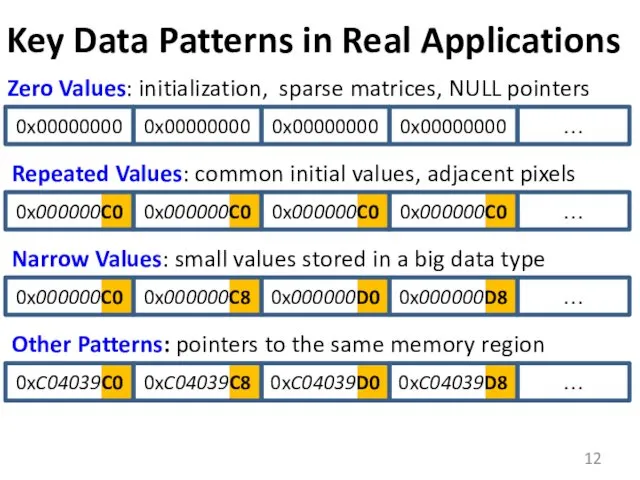

- 10. Key Data Patterns in Real Applications 0x00000000 0x00000000 0x00000000 0x00000000 … 0x000000C0 0x000000C0 0x000000C0 0x000000C0 …

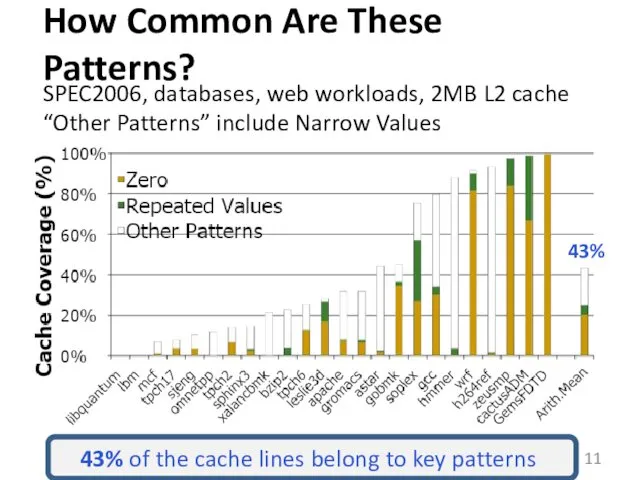

- 11. How Common Are These Patterns? SPEC2006, databases, web workloads, 2MB L2 cache “Other Patterns” include Narrow

- 12. Key Data Patterns in Real Applications 0x00000000 0x00000000 0x00000000 0x00000000 … 0x000000C0 0x000000C0 0x000000C0 0x000000C0 …

- 13. Key Data Patterns in Real Applications Low Dynamic Range: Differences between values are significantly smaller than

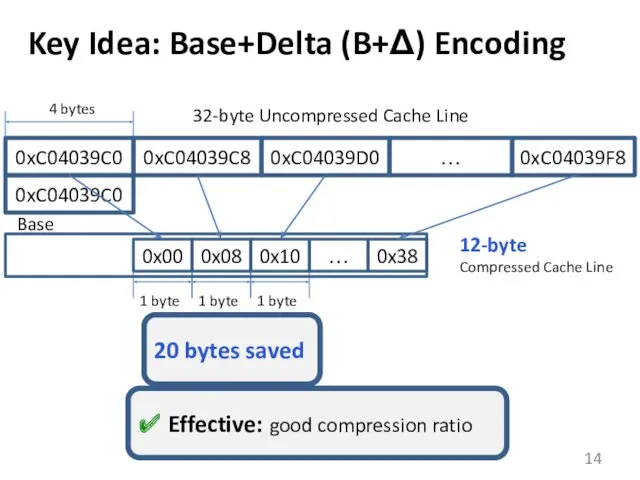

- 14. 32-byte Uncompressed Cache Line Key Idea: Base+Delta (B+Δ) Encoding 0xC04039C0 0xC04039C8 0xC04039D0 … 0xC04039F8 4 bytes

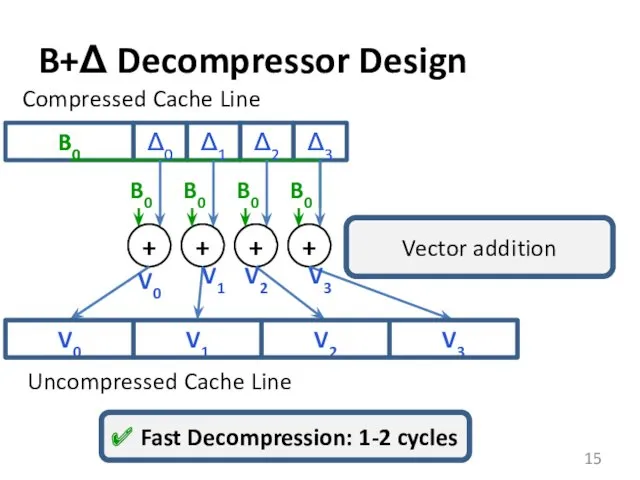

- 15. Δ0 B0 B+Δ Decompressor Design Δ1 Δ2 Δ3 Compressed Cache Line V0 V1 V2 V3 +

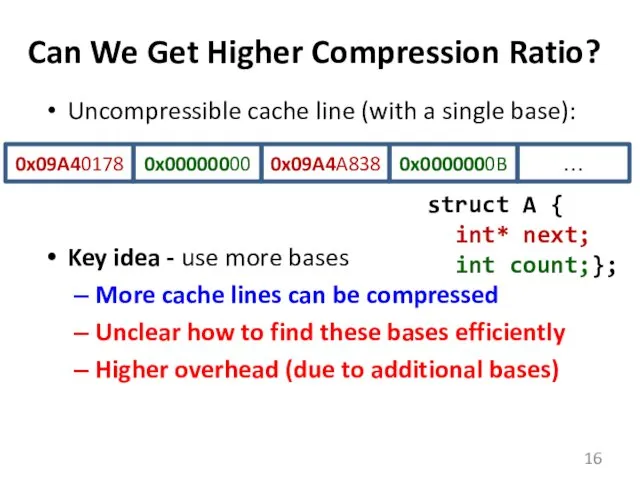

- 16. Can We Get Higher Compression Ratio? Uncompressible cache line (with a single base): Key idea -

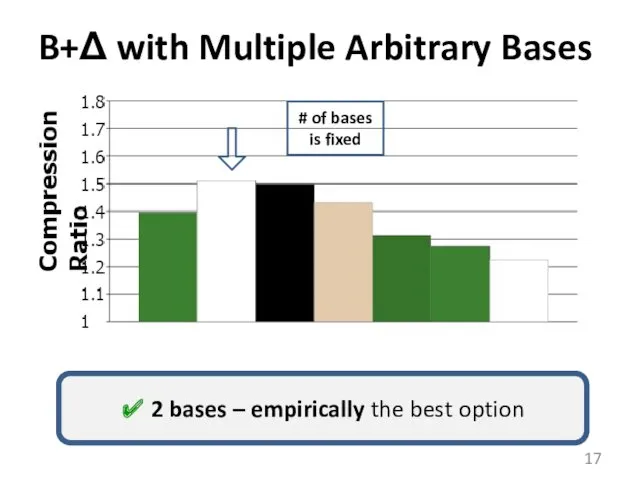

- 17. B+Δ with Multiple Arbitrary Bases ✔ 2 bases – empirically the best option # of bases

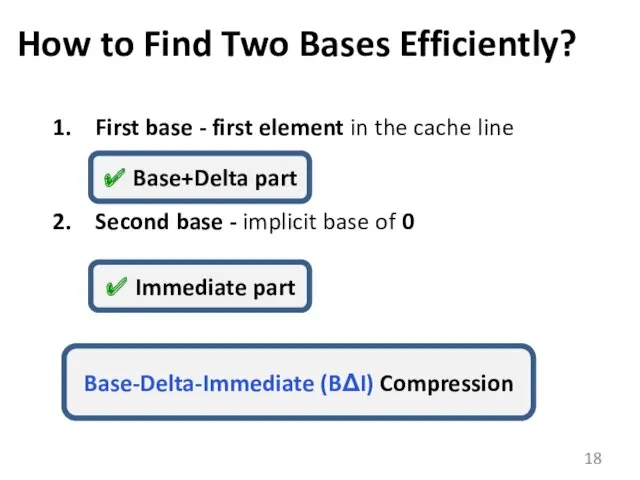

- 18. How to Find Two Bases Efficiently? First base - first element in the cache line Second

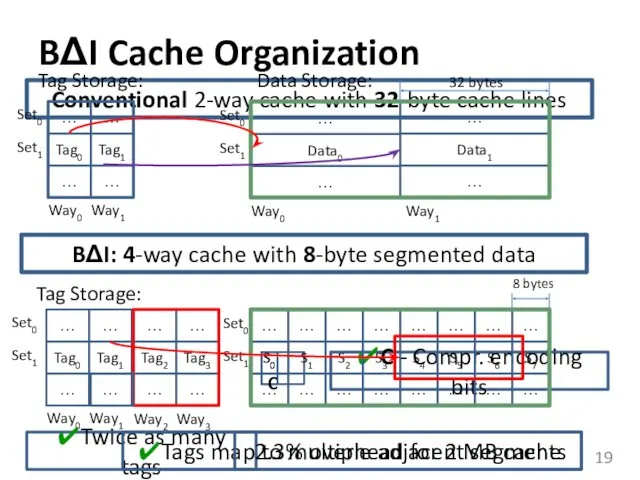

- 19. Conventional 2-way cache with 32-byte cache lines BΔI Cache Organization Tag0 Tag1 … … … …

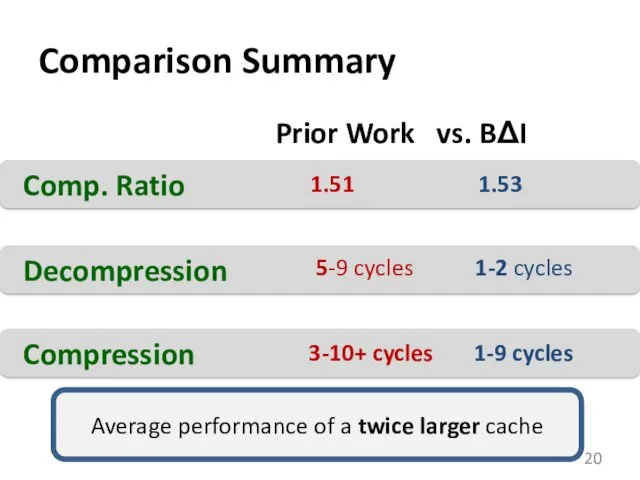

- 20. Comparison Summary Comp. Ratio 1.53 1.51 Decompression 1-2 cycles 5-9 cycles Compression 1-9 cycles 3-10+ cycles

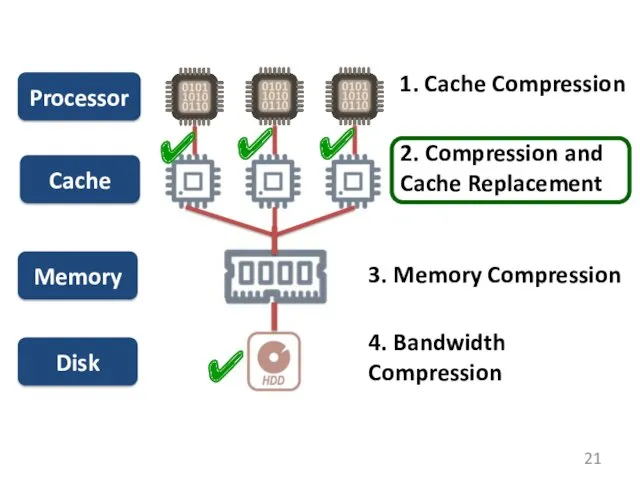

- 21. Processor Cache Memory Disk ✔ 1. Cache Compression 2. Compression and Cache Replacement 3. Memory Compression

- 22. 2. Compression and Cache Replacement

- 23. Cache Management Background Not only about size Cache management policies are important Insertion, promotion and eviction

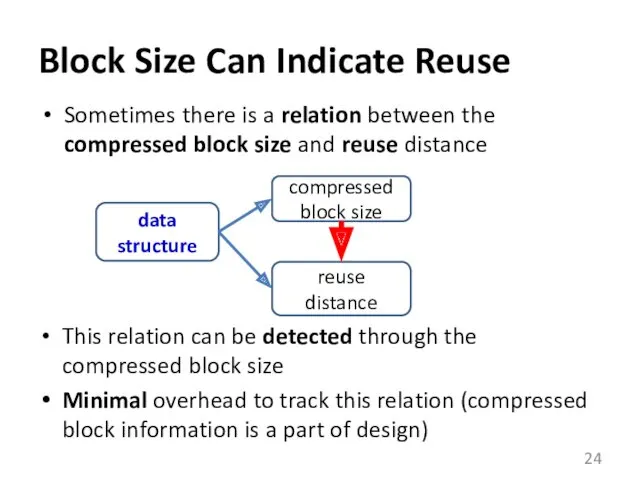

- 24. Block Size Can Indicate Reuse Sometimes there is a relation between the compressed block size and

- 25. Code Example to Support Intuition int A[N]; // small indices: compressible double B[16]; // FP coefficients:

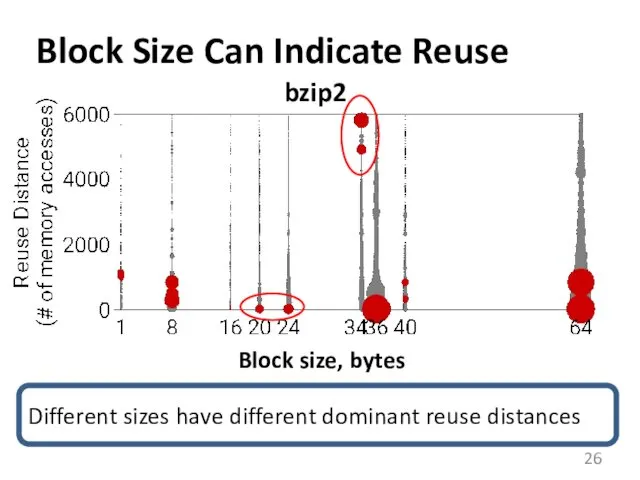

- 26. Block Size Can Indicate Reuse bzip2 Block size, bytes Different sizes have different dominant reuse distances

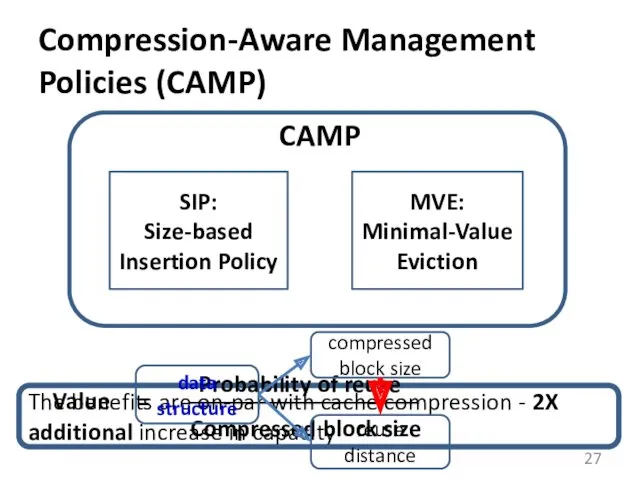

- 27. The benefits are on-par with cache compression - 2X additional increase in capacity Compression-Aware Management Policies

- 28. Processor Cache Memory Disk ✔ 1. Cache Compression 2. Compression and Cache Replacement 3. Memory Compression

- 29. 3. Main Memory Compression

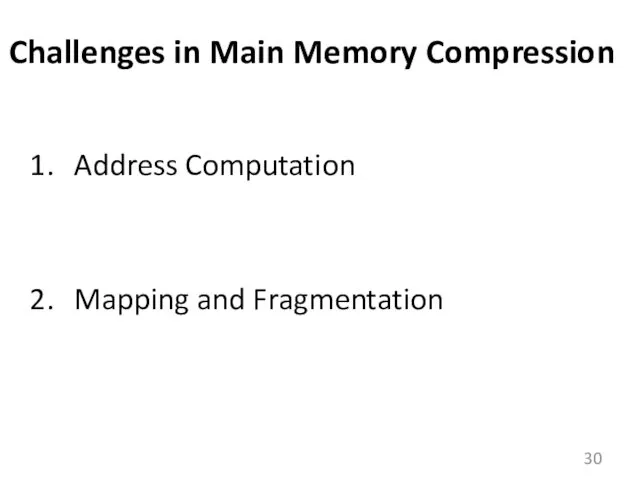

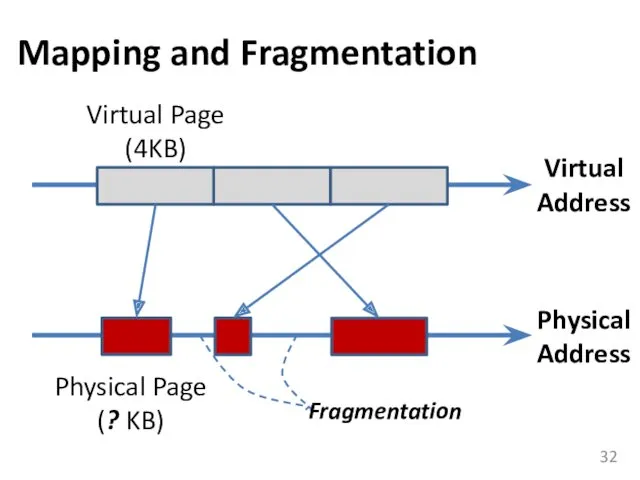

- 30. Challenges in Main Memory Compression Address Computation Mapping and Fragmentation

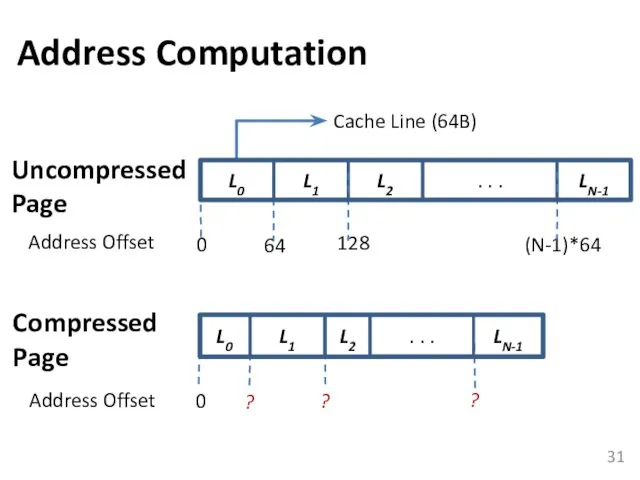

- 31. L0 L1 L2 . . . LN-1 Cache Line (64B) Address Offset 0 64 128 (N-1)*64

- 32. Mapping and Fragmentation Virtual Page (4KB) Physical Page (? KB) Fragmentation Virtual Address Physical Address

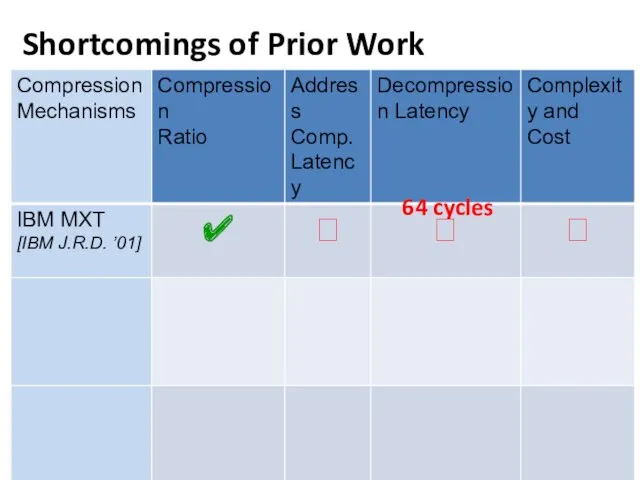

- 33. Shortcomings of Prior Work 64 cycles

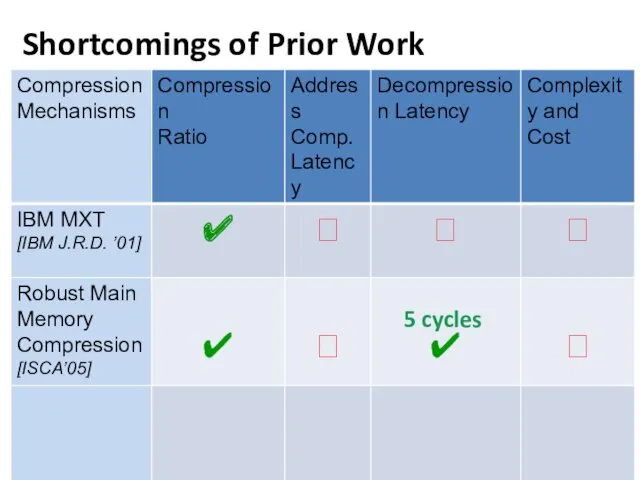

- 34. Shortcomings of Prior Work 5 cycles

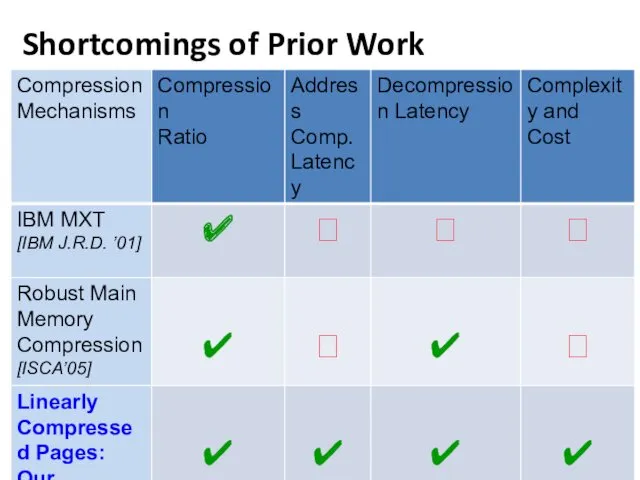

- 35. Shortcomings of Prior Work

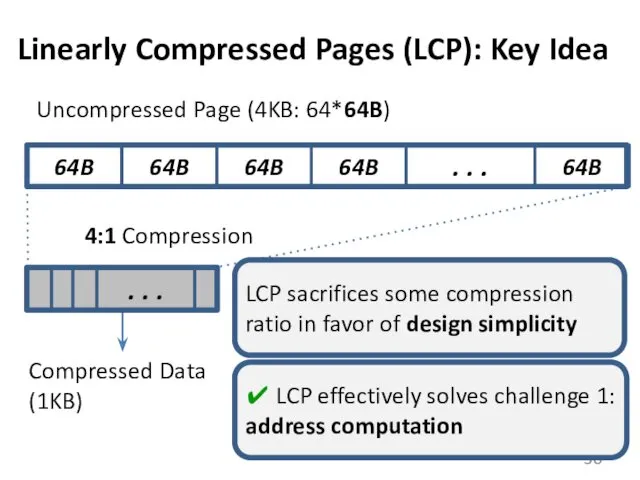

- 36. Linearly Compressed Pages (LCP): Key Idea 64B 64B 64B 64B . . . . . .

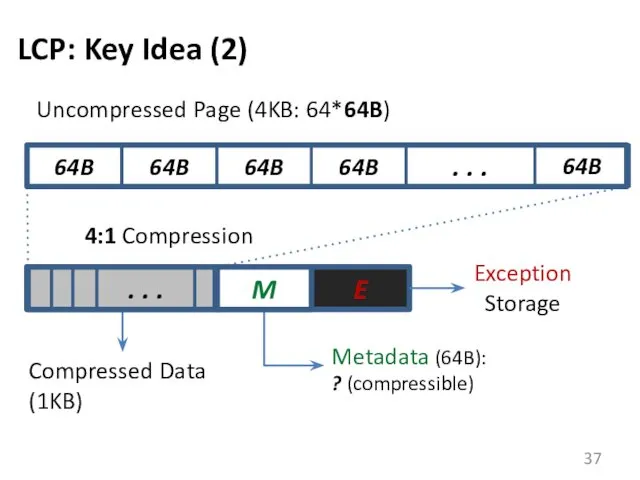

- 37. LCP: Key Idea (2) 64B 64B 64B 64B . . . . . . M E

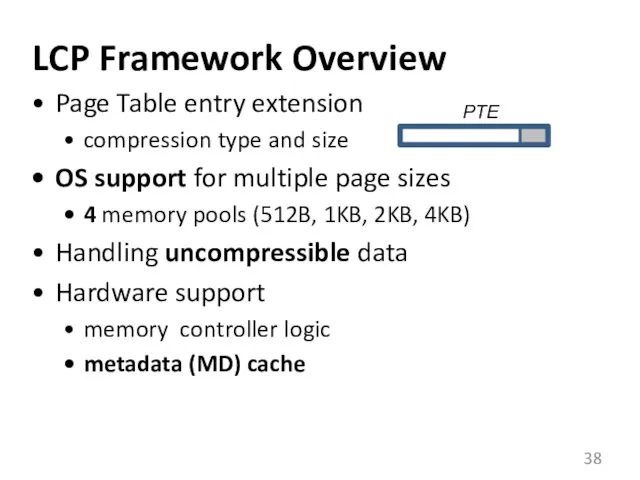

- 38. LCP Framework Overview Page Table entry extension compression type and size OS support for multiple page

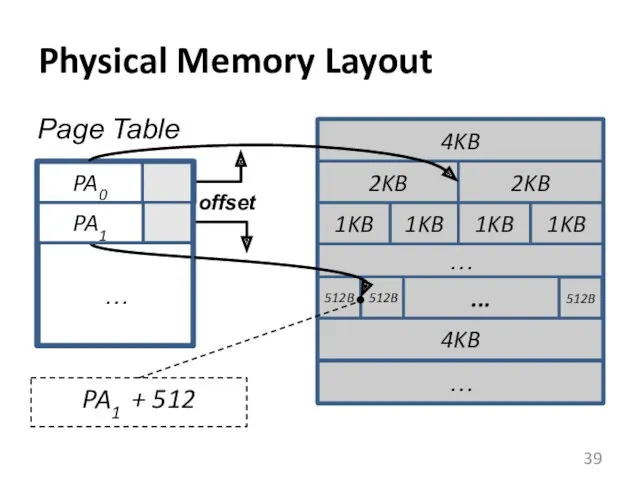

- 39. Physical Memory Layout 4KB 2KB 2KB 1KB 1KB 1KB 1KB 512B 512B ... 512B 4KB …

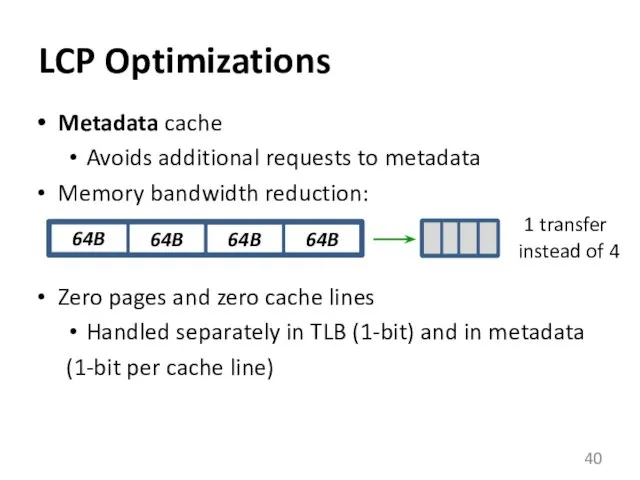

- 40. Metadata cache Avoids additional requests to metadata Memory bandwidth reduction: Zero pages and zero cache lines

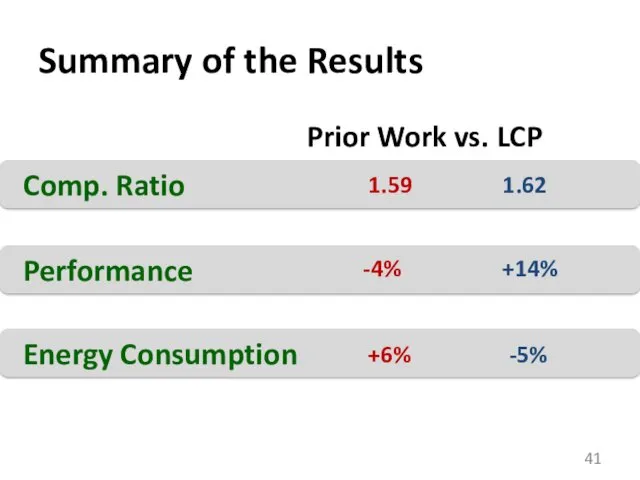

- 41. Summary of the Results Comp. Ratio 1.62 1.59 Performance +14% -4% Energy Consumption -5% +6% Prior

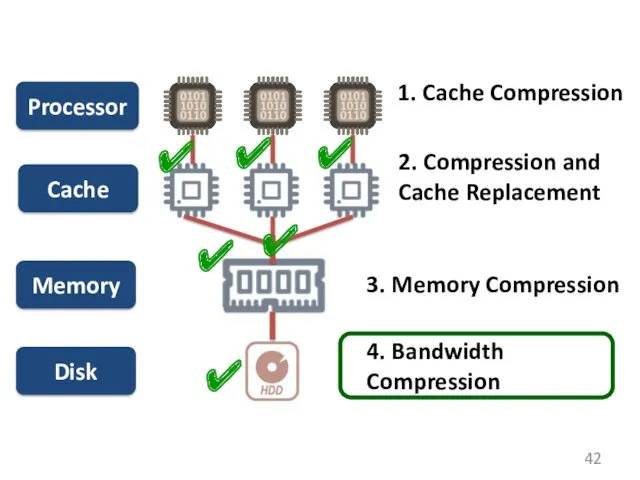

- 42. Processor Cache Memory Disk ✔ 1. Cache Compression 2. Compression and Cache Replacement 3. Memory Compression

- 43. 4. Energy-Efficient Bandwidth Compression CAL 2015

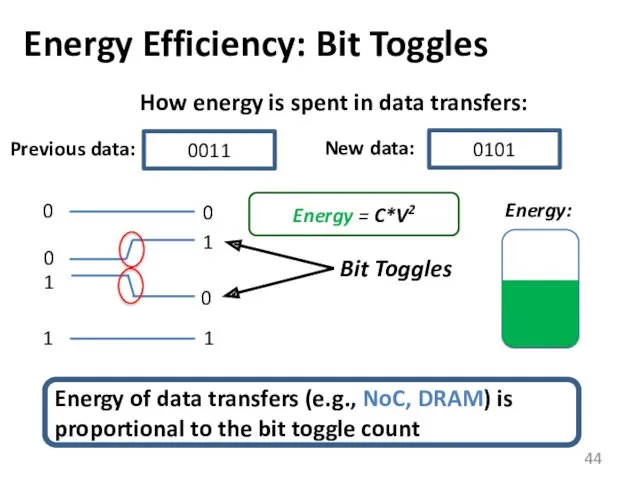

- 44. Energy Efficiency: Bit Toggles 0 1 0011 Previous data: Bit Toggles Energy = C*V2 How energy

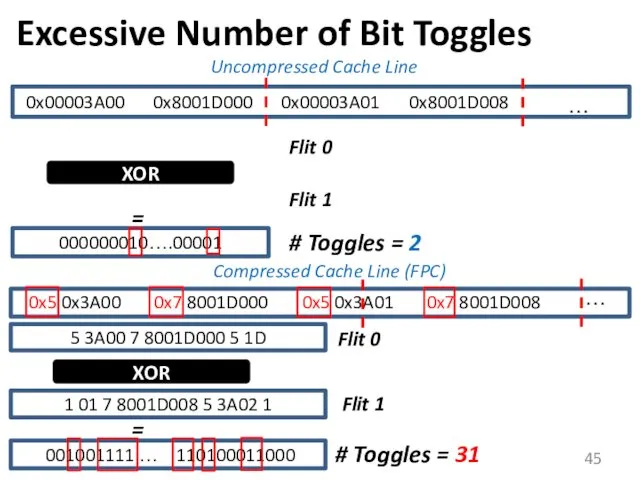

- 45. Excessive Number of Bit Toggles 0x00003A00 0x8001D000 0x00003A01 0x8001D008 … Flit 0 Flit 1 XOR 000000010….00001

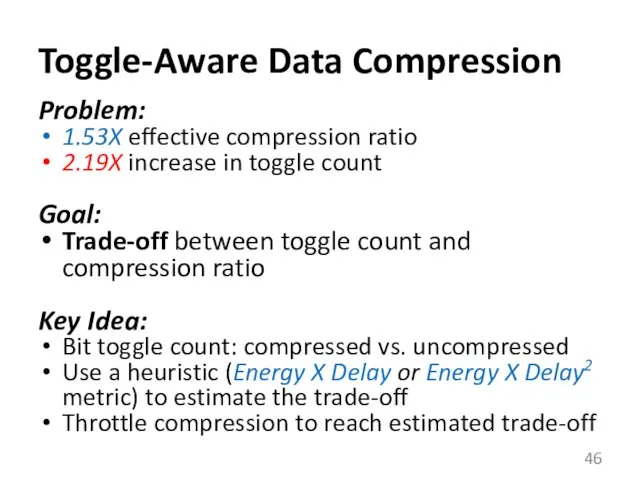

- 46. Toggle-Aware Data Compression Problem: 1.53X effective compression ratio 2.19X increase in toggle count Goal: Trade-off between

- 48. Скачать презентацию

![Code Example to Support Intuition int A[N]; // small indices:](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/100056/slide-24.jpg)

Основные инструменты продвижения в Instagram и YouTube

Основные инструменты продвижения в Instagram и YouTube Xsd - xml schema definition xslt- extensible stylesheet language transformations

Xsd - xml schema definition xslt- extensible stylesheet language transformations Периферийные устройства

Периферийные устройства Система электронного урегулирования претензий в Личном кабинете

Система электронного урегулирования претензий в Личном кабинете Презентация Ребенок и компьютер

Презентация Ребенок и компьютер Типология баз данных

Типология баз данных Електронна пошта

Електронна пошта Современные формы интернет-коммуникаций

Современные формы интернет-коммуникаций Доступ граждан к правовой информации

Доступ граждан к правовой информации Configuring for Recoverability (2)

Configuring for Recoverability (2) Индивидуальный практикум по информатике

Индивидуальный практикум по информатике Microsoft Office. Краткая характеристика программ

Microsoft Office. Краткая характеристика программ Первый взгляд на платформу .Net

Первый взгляд на платформу .Net Мультимедиа. Области применения мультимедиа. Создание анимации в презентациях

Мультимедиа. Области применения мультимедиа. Создание анимации в презентациях Test Documentation Overview

Test Documentation Overview Разработка игры Соображариум

Разработка игры Соображариум Электронные таблицы Microsoft Excel

Электронные таблицы Microsoft Excel Деревья принятия решения

Деревья принятия решения Шаблоны. Типы шаблонов

Шаблоны. Типы шаблонов Электронные таблицы. (7 класс)

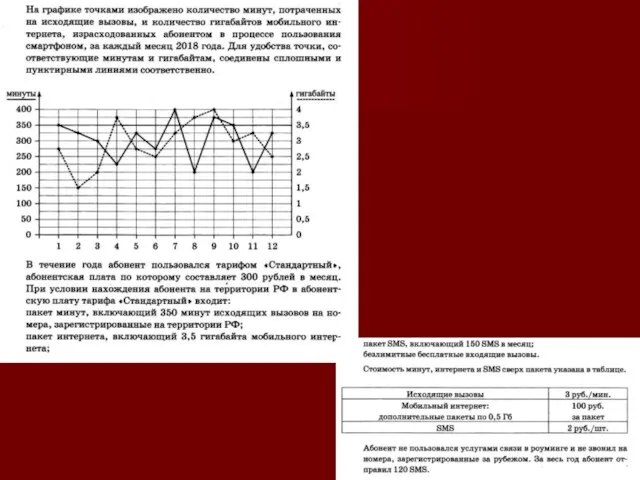

Электронные таблицы. (7 класс) Телефон. Задачи

Телефон. Задачи Обработка экономической и статистической информации. Тема 2.6

Обработка экономической и статистической информации. Тема 2.6 Технология хранения, поиска и сортировки информации

Технология хранения, поиска и сортировки информации Условный оператор. Операторы отношения

Условный оператор. Операторы отношения Типы сетевых угроз

Типы сетевых угроз Презентации

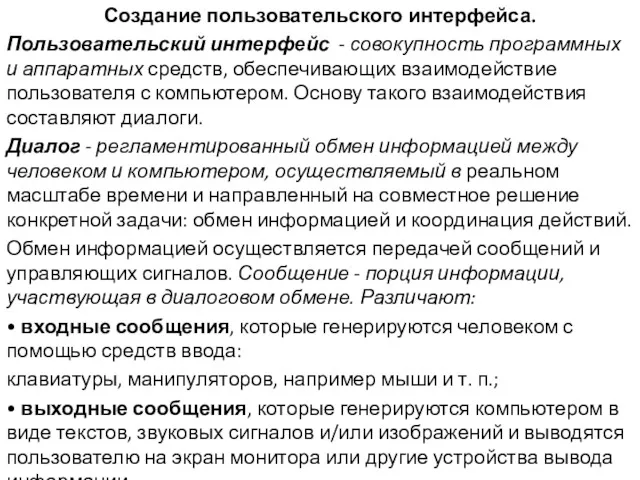

Презентации Создание пользовательского интерфейса

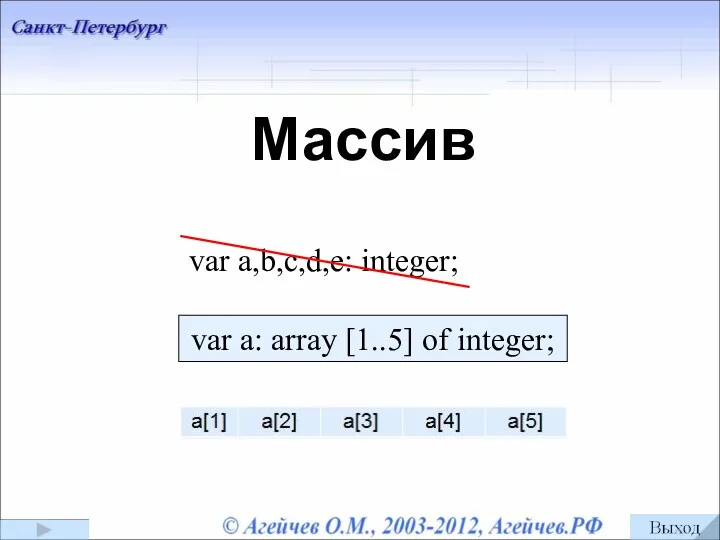

Создание пользовательского интерфейса Понятие массив. Объявление массивов. Обращение к элементам массива

Понятие массив. Объявление массивов. Обращение к элементам массива