Содержание

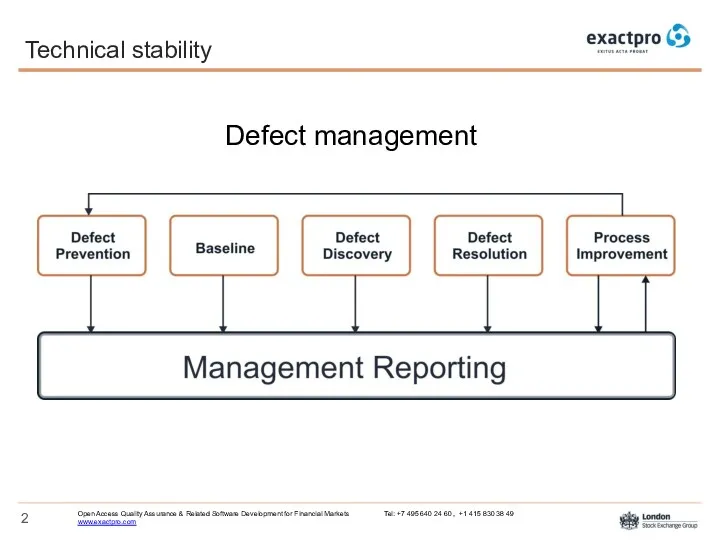

- 2. Defect management Technical stability

- 3. Areas of research in defect management [1]: automatic defect fixing automatic defect detection triaging defect reports

- 4. Automatic defect fixing Tasks: automatic fixing of unit-tests automatic fixing of found detects

- 5. Genetic programming Evolve both programs and test cases at the same time [1] Avoid defects and

- 6. Automatic defect fixing SBSE Searching code for possible defects [1] Adaptive bug isolation [2] [1] M.

- 7. Automatic defect fixing Tools: Co-evolutionary Automated Software Correction [1] AutoFix-E / AutoFixE2 [2] ReAssert [3] GenProg

- 8. Automatic defect defection Tasks: Search defects [1] Predict defects [2] Predict number of defects [3] Predict

- 9. Automatic defect defection Tools: Linkster [1] BugScout [2] [1] A. Bachmann, C. Bird, F. Rahman, P.

- 10. Triaging defect reports Tasks: Classify defect reports Detecting duplicates Automatic assignment

- 11. Triaging defect reports Classify defect reports: Defect or non-defect [1] Security risk [2] Crash-types [3] [1]

- 12. Triaging defect reports Reasons for duplicates [1]: unexperienced users, poor search features, multiple failures - one

- 13. Triaging defect reports Detecting duplicates: NLP + information extraction [1] Textual semantic + clustering [2] N-gram-based

- 14. Triaging defect reports Automatic assignment: Predict developer : text categorization [1], SVM [2], information retrieval [3]

- 15. Automatic defect fixing Tools: Bugzie [1] DREX [2] [1] A.Tamrawi,T.T.Nguyen,J.M.Al-Kofahi,and T.N.Nguyen,“Fuzzy set and cache-based approach for

- 16. Quality of defect-reports Tasks: Surveying Developers and Testers Improving defect reports

- 17. Quality of defect-reports Results of survey [1]: [1] E. I. Laukkanen and M. V. Mantyla, “Survey

- 18. Improving defect reports: eliminate user private information from bug-report [1] measure comments [2] eliminate invalid bug-report

- 19. Tools: Cuezilla Quality of defect-reports [1] N. Bettenburg, S. Just, A. Schröter, C. Weiss, R. Premraj,

- 20. Tasks: Analysis of defect data Predict metrics of testing Metrics and prediction of defect reports

- 21. Analysis of defect data : NLP [1] Visualize of defect databases [2] Automatically generating summaries [3]

- 22. Examples of metrics: time to fix / time to resolve[1] which defects get reopened [2] which

- 23. Time to resolve -> cheap/expensive bug Attributes: self-reported severity readability daily load submitter reputation bug severity

- 24. Metrics and prediction of defect reports Reasons of defect reopening: Bug report has insufficient information Developers

- 25. Metrics and prediction of defect reports Attributes (reopening of defect): Bug source Reputation of bug opener

- 26. Defect clustering Understand weaknesses of software Improve testing strategy Defect Management

- 27. Attributes for cluster analysis: Priority status resolution time to resolve count of comments area of testing

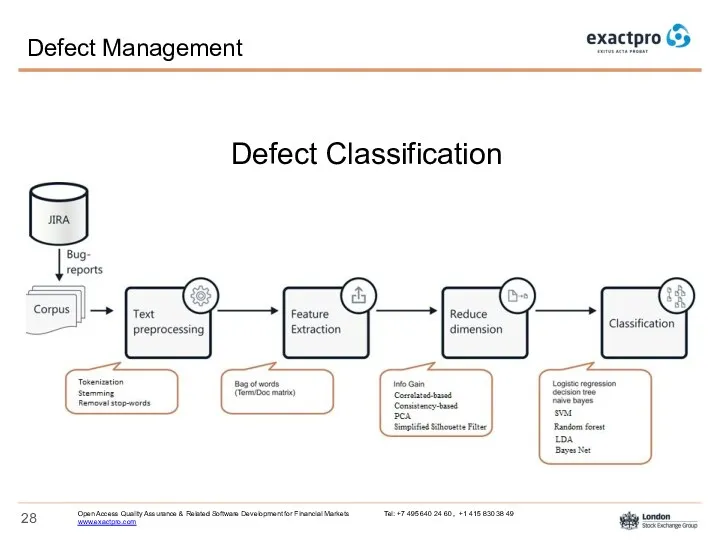

- 28. Defect Classification Defect Management

- 29. Analyse description utility: Stack trace (regular expressions) Steps to reproduce (classify) Expected/Observed behaviour (classify) Readability Defect

- 30. Attributes for prediction of metric “which defects get reopened”: Priority status resolution time to resolve count

- 32. Скачать презентацию

![Areas of research in defect management [1]: automatic defect fixing](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-2.jpg)

![Automatic defect fixing SBSE Searching code for possible defects [1]](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-5.jpg)

![Automatic defect fixing Tools: Co-evolutionary Automated Software Correction [1] AutoFix-E](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-6.jpg)

![Automatic defect defection Tasks: Search defects [1] Predict defects [2]](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-7.jpg)

![Automatic defect defection Tools: Linkster [1] BugScout [2] [1] A.](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-8.jpg)

![Triaging defect reports Classify defect reports: Defect or non-defect [1]](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-10.jpg)

![Triaging defect reports Reasons for duplicates [1]: unexperienced users, poor](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-11.jpg)

![Triaging defect reports Detecting duplicates: NLP + information extraction [1]](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-12.jpg)

![Automatic defect fixing Tools: Bugzie [1] DREX [2] [1] A.Tamrawi,T.T.Nguyen,J.M.Al-Kofahi,and](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-14.jpg)

![Quality of defect-reports Results of survey [1]: [1] E. I.](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-16.jpg)

![Improving defect reports: eliminate user private information from bug-report [1]](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-17.jpg)

![Tools: Cuezilla Quality of defect-reports [1] N. Bettenburg, S. Just,](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-18.jpg)

![Analysis of defect data : NLP [1] Visualize of defect](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-20.jpg)

![Examples of metrics: time to fix / time to resolve[1]](/_ipx/f_webp&q_80&fit_contain&s_1440x1080/imagesDir/jpg/341010/slide-21.jpg)

Среда программирования Pascal ABC

Среда программирования Pascal ABC Презентация по информатике Информационные технологии

Презентация по информатике Информационные технологии Создание печатных публикаций

Создание печатных публикаций Оформление газет

Оформление газет Хочу писать красивые тексты: что делать?

Хочу писать красивые тексты: что делать? Правила создания презентаций.Урок родного (русского) языка.. 6 класс

Правила создания презентаций.Урок родного (русского) языка.. 6 класс Организация защиты информации в локальной сети компании ООО MAN Truck and Bus Rus

Организация защиты информации в локальной сети компании ООО MAN Truck and Bus Rus Компоновка элементов в WPF

Компоновка элементов в WPF Социальные сети

Социальные сети Инклюзивное образование сегодня

Инклюзивное образование сегодня Специфика аудиовизуальной сферы в журналистике

Специфика аудиовизуальной сферы в журналистике Особенности и основные аспекты проектирования облачных архитектур

Особенности и основные аспекты проектирования облачных архитектур HTML: Базові, основні та складні елементи. Лекция 25

HTML: Базові, основні та складні елементи. Лекция 25 Метод оценки качества, основанный на иерархической модели

Метод оценки качества, основанный на иерархической модели Федеральный закон Российской Федерации от 06.04.2011 № 63-ФЗ Об электронной подписи. Виды, состав ЭП

Федеральный закон Российской Федерации от 06.04.2011 № 63-ФЗ Об электронной подписи. Виды, состав ЭП Передача и коммутация данных в компьютерных сетях Сертификационный курс. Часть 1. Лекция 3

Передача и коммутация данных в компьютерных сетях Сертификационный курс. Часть 1. Лекция 3 Новое в ЕГЭ и ГИА по информатике и ИКТ

Новое в ЕГЭ и ГИА по информатике и ИКТ Установка и настройка веб-сервера Apache2 на ОС Ubuntu server

Установка и настройка веб-сервера Apache2 на ОС Ubuntu server Логическое программирование и язык Пролог

Логическое программирование и язык Пролог Способы несанкционированного доступа к информации и понятие аутентификации

Способы несанкционированного доступа к информации и понятие аутентификации Информационная безопасность: государственная политика Российской федерации. Борьба с фейками

Информационная безопасность: государственная политика Российской федерации. Борьба с фейками Разработка мобильного приложение для повышения навыков программирования “CodingChamps

Разработка мобильного приложение для повышения навыков программирования “CodingChamps Группа Компаний СДЭК. Логистические решения

Группа Компаний СДЭК. Логистические решения Детектор лиц

Детектор лиц Базы данных

Базы данных Основные правила заполнения калькуляции PBD

Основные правила заполнения калькуляции PBD нформационные ресурсы общества Информационные услуги и продукты

нформационные ресурсы общества Информационные услуги и продукты Веб-форумы

Веб-форумы